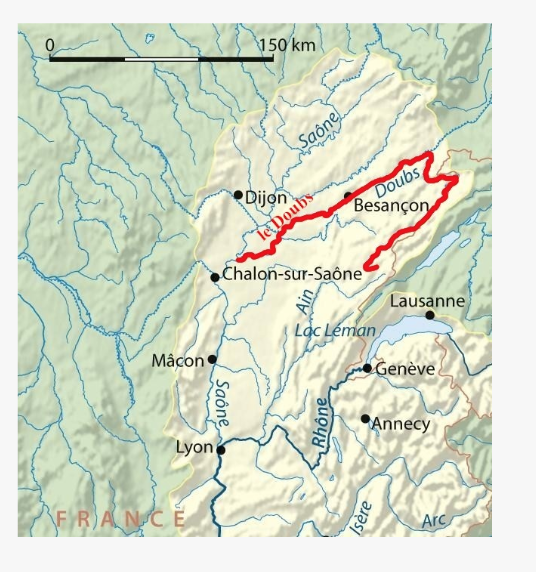

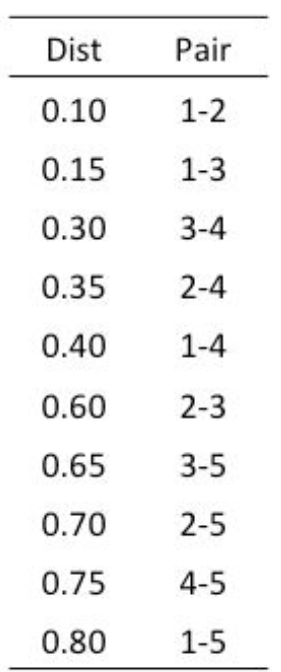

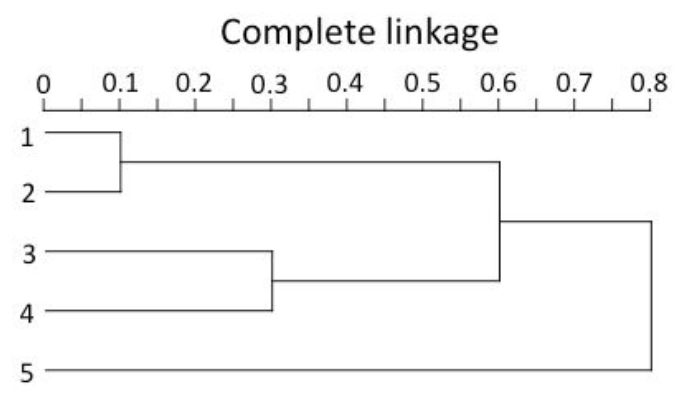

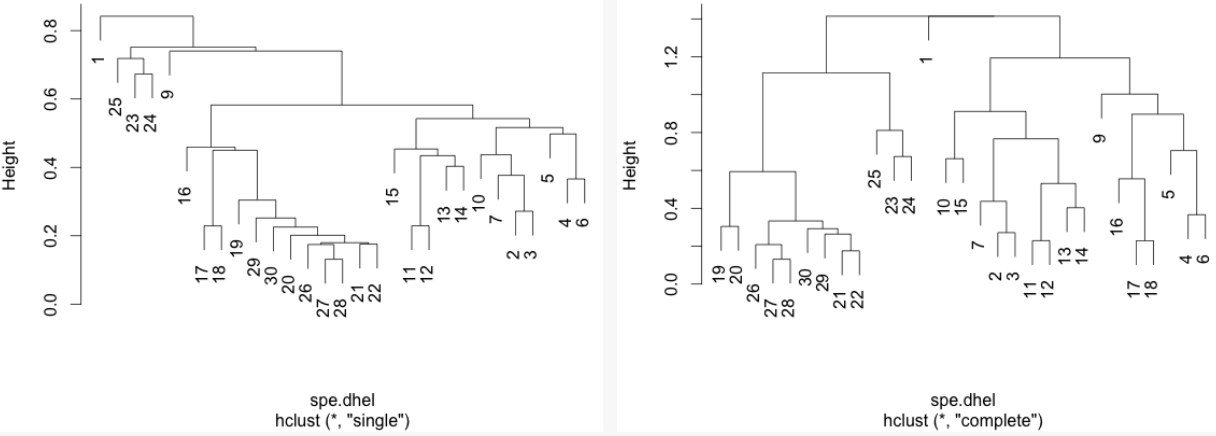

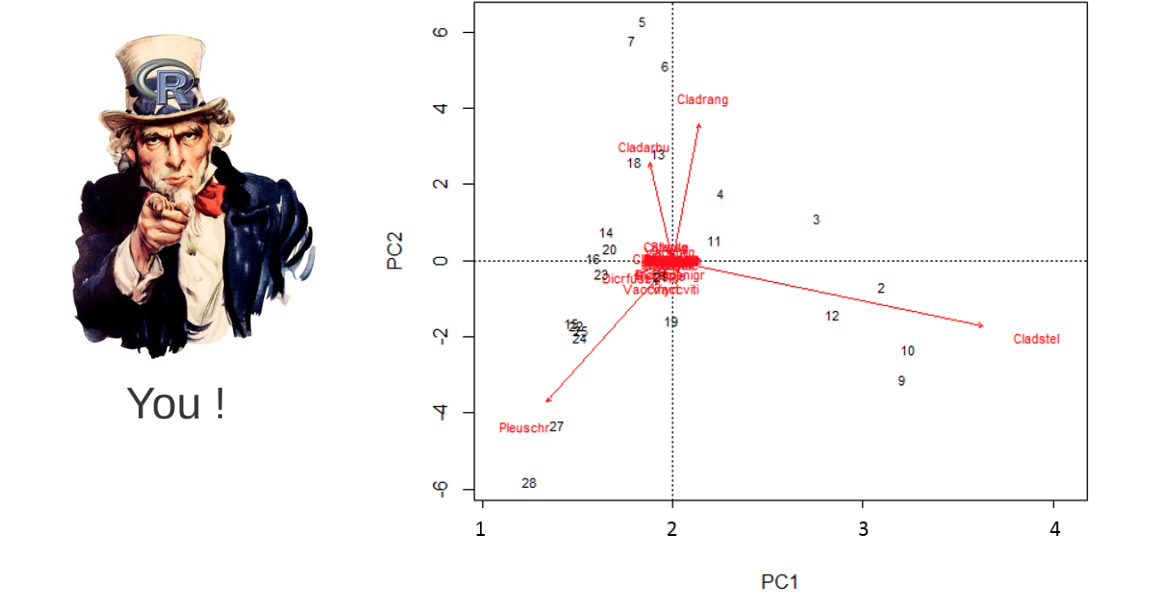

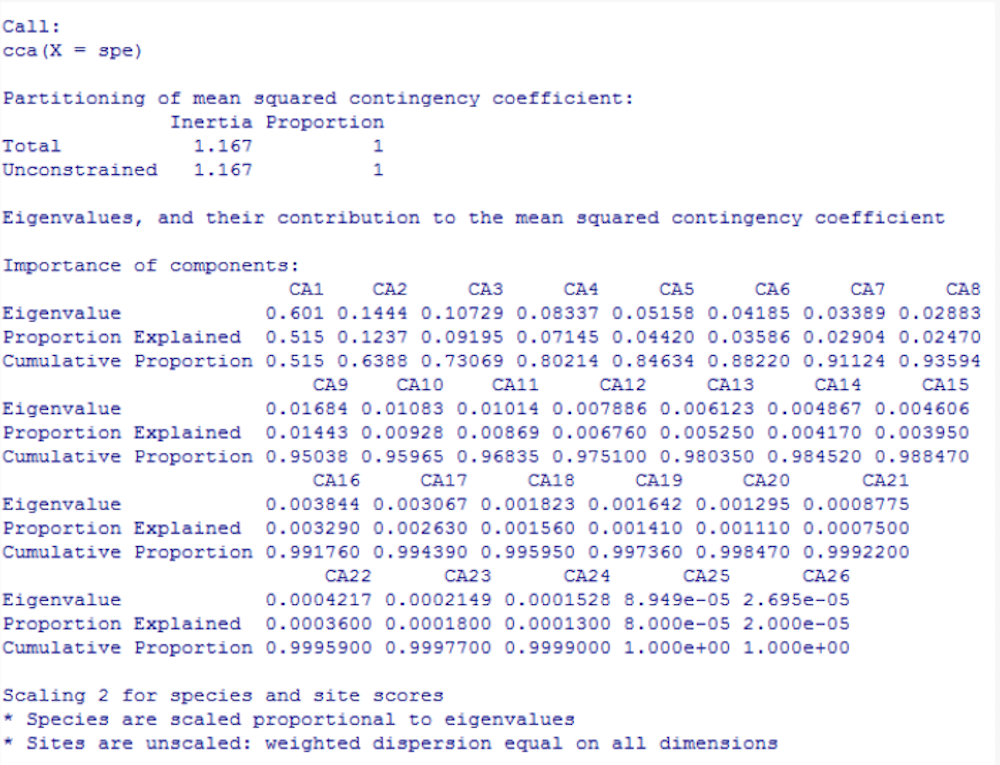

class: center, middle, inverse, title-slide .title[ # Workshop 9: Multivariate Analyses ] .subtitle[ ## QCBS R Workshop Series ] .author[ ### Québec Centre for Biodiversity Science ] --- class: inverse, center, middle # About this workshop [](https://r.qcbs.ca/workshop09/pres-en/workshop09-pres-en.html) [](https://r.qcbs.ca/workshop09/book-en/workshop09-script-en.R) [](https://r.qcbs.ca/workshop09/book-en/index.html) [](https://r.qcbs.ca/workshops/r-workshop-09/) [](https://github.com/QCBSRworkshops/workshop09) --- <p style="font-size:75%"> .center[ **QCBS members who contributed to this workshop** by modifying and improving its content as part of the <br> *Le*arning *a*nd *D*evelopment *A*ward ] .pull-left[ .right[ **2022** - **2021** - **2020** Pedro Henrique P. Braga Katherine Hébert Mi Lin Linley Sherin <br> **2019** - **2018** - **2017** Gabriel Muñoz Marie Hélène-Brice Pedro Henrique P. Braga ] ] .pull-right[ .left[ **2016** - **2015** - **2014** Bérenger Bourgeois Xavier Giroux-Bougard Amanda Winegardner Emmanuelle Chrétien Monica Granados ] ] </p> <br> .center[ __If you would like to contribute too__, visit [r.qcbs.ca/contributing](https://r.qcbs.ca/contributing/) <br> and don't hesitate to [get in touch](mailto:qcbs.csbq.r@gmail.com) with us! ] --- # Required material This workshop requires the latest [RStudio](https://rstudio.com/products/rstudio/download/#download) and [R](https://cran.rstudio.com/) versions. .pull-left[ You must also use these packages: * [ape](https://cran.r-project.org/package=ape) * [ade4](https://cran.r-project.org/package=ade4) * [codep](https://cran.r-project.org/package=codep) * [gclus](https://cran.r-project.org/package=gclus) * [vegan](https://cran.r-project.org/package=vegan) * [GGally](https://cran.r-project.org/package=GGally) * [PlaneGeometry](https://cran.r-project.org/package=PlaneGeometry) * [remotes](https://cran.r-project.org/package=remotes) ] .pull-right[ To install them from CRAN, run: ```r install.packages(c("ape", "ade4", "codep", "gclus", "vegan", "GGally", "PlaneGeometry", "remotes")) ``` ] <br> .pull-left2[ Throughout this workshop, there will be a series of **challenges** that you can recognize by this Rubik's cube. **During these challenges, do not hesitate to collaborate!** ] .pull-right2[ .center[  ] ] --- # Learning objectives 1. Learn the basics of multivariate analysis to reveal patterns in community composition data 2. Use `R` to perform an unconstrained ordination 3. Learn about similarity and dissimilarity coefficients and transformations to perform multivariate analysis 4. Use `R` to create dendrograms 5. Learn the following methods: * Clustering analysis * Principal Component Analysis (PCA) * Principal Coordinate Analysis (PCoA) * Non-Metric MultiDimensional Scaling (NMDS) --- class: inverse, center, middle # 1. Preamble --- # Recap: Univariate analyses We have learned a multitude of analyses that allowed us to interpret ecological data while depicting the effects of _one or multiple_ variables in _one_ response variable. -- .pull-left[ We can recall the: 1. General Linear Models 1. `lm()`; 2. `anova()`; 3. `t.test()`; 4. `lmer()`. 2. Generalized Linear Models 1. `glm()` and `glmer()` with several `family()` link functions. 3. Generalized Additive Models 1. `gam()`. ] -- .pull-right[ These models allowed us to ask questions such as: 1. _What are the effects of precipitation and temperature on species richness?_ 2. _How does the abundance of microbes change between hosts?_ 3. _Do co-occurring fish become more aggressive after being induced to fear?_ ] --- # Multivariate statistics However, one may be interested in making inferences from ecological data containing _more than one_ outcome or dependent variable. This interest may be driven by hypothesis testing and modelling, but also be entirely exploratory. -- For instance, our research question might be: 1. How does the _bacterial composition_ on maple leaves change along the elevational gradient? 2. What is the _composition dissimilarity_ of bat communities? 3. How _closely-related_ are local spider communities in terms of their _composition_? ??? -- In all these cases, the outcome is composed of several variables, _e.g._ usually a sample-by-species or sample-by-environment matrix. -- <br> We will now dive into **multivariate statistics**, a tool set that will allow us to address questions requiring the simultaneous observation or analysis of more than one outcome variable. --- # Background on multivariate methods 1. Association (or dis-similarity) measures and matrices 2. Classification (or cluster) analysis 3. Unconstrained ordination 4. Constrained (or canonical) ordination -- <br> .center[But, before that, we must recall the basics of **matrix algebra**.] --- # Matrix algebra: a *very brief* summary Matrix algebra is well-suited for ecology, because most (if not all) _data sets_ we work with are in a _matrix_ format. -- .pull-left[ Ecological data tables are obtained as object-observations or sampling units, and are often recorded as this: <br> | Objects | `\(y_1\)` | `\(y_2\)` | `\(\dots\)` | `\(y_n\)` | | :-------------: |:-------------:| :-----:|:-----:|:-----:| | `\(x_1\)` | `\(y_{1,1}\)` | `\(y_{1,2}\)` | `\(\dots\)` | `\(y_{1,n}\)` | | `\(x_2\)` | `\(y_{2,1}\)` | `\(y_{2,2}\)` | `\(\dots\)` | `\(y_{2,n}\)` | | `\(\vdots\)` | `\(\vdots\)` | `\(\vdots\)` | `\(\ddots\)` | `\(\vdots\)` | | `\(x_m\)` | `\(y_{m,1}\)` | `\(y_{m,2}\)` | `\(\dots\)` | `\(y_{m,n}\)` | <br> where `\(x_m\)` is the sampling unit `\(m\)`; and `\(y_n\)` is the ecological descripor that can be, for example, species present in a sampling unit, locality, or a chemical variable. ] -- .pull-right[ The same ecological data table can be represented in _matrix notation_ like this: `$$Y = [y_{m,n}] = \begin{bmatrix} y_{1,1} & y_{1,2} & \cdots & y_{1,n} \\ y_{2,1} & y_{2,2} & \cdots & y_{2,n} \\ \vdots & \vdots & \ddots & \vdots \\ y_{m,1} & y_{m,2} & \cdots & y_{m,n} \end{bmatrix}$$` where lowercase letters indicate _elements_, and the subscript letters indicate the _position of these elements_ in the matrix (and in the table!). ] --- # Matrix algebra: a *very brief* summary Matrix algebra is well-suited for ecology, because most (if not all) _data sets_ we work with, are in a _matrix_ format. .pull-left[ Moreover, any subset of a matrix can be recognized! <br> .center[_a row matrix_] `$$\begin{bmatrix} y_{1,1} & y_{1,2} & \cdots & y_{1,n} \\ \end{bmatrix}$$` .center[_a column matrix_] `$$\begin{bmatrix} y_{1,1} \\ y_{2,1} \\ \vdots \\ y_{m,1} \end{bmatrix}$$` ] .pull-right[ The same ecological data table can be represented in _matrix notation_ like this: `$$Y = [y_{m,n}] = \begin{bmatrix} y_{1,1} & y_{1,2} & \cdots & y_{1,n} \\ y_{2,1} & y_{2,2} & \cdots & y_{2,n} \\ \vdots & \vdots & \ddots & \vdots \\ y_{m,1} & y_{m,2} & \cdots & y_{m,n} \end{bmatrix}$$` where lowercase letters indicate _elements_, and the subscript letters indicate the _position of these elements_ in the matrix (and in the table!). ] --- # Matrix algebra: a *very brief* summary ###### Association matrices Two important matrices can be derived from the ecological data matrix: the _**association matrix among objects**_ and the _**association matrix among descriptors**_. -- .pull-left[ Using the data from our matrix `\(Y\)`, <div class="math"> \[ Y = \begin{array}{cc} \begin{array}{ccc} x_1 \rightarrow\\ x_2 \rightarrow\\ \vdots \\ x_m \rightarrow\\ \end{array} & \begin{bmatrix} y_{1,1} & y_{1,2} & \cdots & y_{1,n} \\ y_{2,1} & y_{2,2} & \cdots & y_{2,n} \\ \vdots & \vdots & \ddots & \vdots \\ y_{m,1} & y_{m,2} & \cdots & y_{m,n} \end{bmatrix} \end{array} \] </div> one can examine the relationship between the first two objects: <div class="math"> \[x_1 \rightarrow \begin{bmatrix} y_{1,1} & y_{1,2} & \cdots & y_{1,n} \\ \end{bmatrix} \] </div> <div class="math"> \[x_2 \rightarrow \begin{bmatrix} y_{2,1} & y_{2,2} & \cdots & y_{2,n} \\ \end{bmatrix} \] </div> <p>and obtain \(a_{1,2}\). </p> ] -- .pull-right[ We can populate the association matrix `\(A_{n,n}\)` with the relationships between all objects from `\(Y\)`: <div class="math"> \[A_{n,n} = \begin{bmatrix} a_{1,1} & a_{1,2} & \cdots & a_{1,n} \\ a_{2,1} & a_{2,2} & \cdots & a_{2,n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{n,1} & a_{n,2} & \cdots & a_{n,n} \end{bmatrix}\] </div> <p>Because \(A_{n,n}\) has the same number of rows and columns, it is denoted a <i>square matrix</i>.</p> <p>Therefore, it has \(n^2\) elements.</p> ] --- # Matrix algebra: a *very brief* summary ###### Association matrices Two important matrices can be derived from the ecological data matrix: the _**association matrix among objects**_ and the _**association matrix among descriptors**_. -- .pull-left[ We can also obtain the relationship between the first two descriptors of `\(Y\)`, `\(y_1\)` and `\(y_2\)`: .pull-left[ `$$\begin{bmatrix} y_{1,2} \\ y_{2,2} \\ \vdots \\ y_{m,2} \end{bmatrix}$$` ] .pull-right[ `$$\begin{bmatrix} y_{1,1} \\ y_{2,1} \\ \vdots \\ y_{m,1} \end{bmatrix}$$` ] and store it in `\(a_{1,2}\)`. ] -- .pull-right[ We can populate the association matrix `\(A_{m,m}\)` with the relationships between all descriptors from `\(Y\)`: `$$A_{m,m} = \begin{bmatrix} a_{1,1} & a_{1,2} & \cdots & a_{1,m} \\ a_{2,1} & a_{2,2} & \cdots & a_{2,m} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m,1} & a_{m,2} & \cdots & a_{m,m} \end{bmatrix}$$` <p>This \(A_{m,m}\) is a <i>square matrix</i>, and it has \(m^2\) elements.</p> ] --- # Matrix algebra: a *very brief* summary ###### Association matrices Two important matrices can be derived from the ecological data matrix: the _**association matrix among objects**_ and the _**association matrix among descriptors**_. These matrices are the basis of **_Q-mode_** and **_R-mode_** analyses in ecology. .pull-left[ `$$Y_{m,n} = \begin{bmatrix} y_{1,1} & y_{1,2} & \cdots & y_{1,n} \\ y_{2,1} & y_{2,2} & \cdots & y_{2,n} \\ \vdots & \vdots & \ddots & \vdots \\ y_{m,1} & y_{m,2} & \cdots & y_{m,n} \end{bmatrix}$$` ] .pull-right[ `$$A_{m,m} = \begin{bmatrix} a_{1,1} & a_{1,2} & \cdots & a_{1,m} \\ a_{2,1} & a_{2,2} & \cdots & a_{2,m} \\ \vdots & \vdots & \ddots & \vdots \\ a_{m,1} & a_{m,2} & \cdots & a_{m,m} \end{bmatrix}$$` ] .pull-left[ `$$A_{n,n} = \begin{bmatrix} a_{1,1} & a_{1,2} & \cdots & a_{1,n} \\ a_{2,1} & a_{2,2} & \cdots & a_{2,n} \\ \vdots & \vdots & \ddots & \vdots \\ a_{n,1} & a_{m,2} & \cdots & a_{n,n} \end{bmatrix}$$` ] .pull-right[ .pull-left[ `\(\leftarrow\)` **_R-mode_** analysis for descriptors or species ] .pull-right[ `\(\uparrow\)` **_Q-mode_** analysis for objects or sites ] ] --- ## Our plans for the next steps... We will dive into **R-mode** and **Q-mode** analyses, and we will explore: 1. Association coefficients: dissimilarity and similarity 2. Composition data transformation 3. Cluster analyses 3. Ordinations in the reduced space <br> .center[**But, first, let us introduce a real data set.**] .center[_It will be your time to get your hands dirty!_] --- exclude: true ###### Association coefficients _Stepping up our game_, we can use population parameters and sample statistics to reveal the structure of our ecological data. For example, we can represent in our association matrix `\(A_{n,n}\)` --- # Doubs river fish communities .pull-left3[ Verneaux (1973) proposed to use fish species to characterize ecological zones along European rivers and streams. He collected data at **30 localities** along the Doubs river, which runs near the France-Switzerland border, in the Jura Mountains. He showed that fish communities were biological indicators of these water bodies. ] .pull.right3[ .center[] ] -- .pull-left3[ Their data is split in three matrices: 1. The abundance of 27 fish species across the communities; 2. The environmental variables recorded at each site; and, 3. The geographical coordinates of each site. ] .pull.right3[ .center[] <br> ] .xsmall[Verneaux, J. (1973) _Cours d'eau de Franche-Comté (Massif du Jura). Recherches écologiques sur le réseau hydrographique du Doubs_. Essai de biotypologie. Thèse d'état, Besançon. 1–257.] --- # Doubs river fish communities .pull-left3[ You can download these datasets from [r.qcbs.ca/workshops/r-workshop-09](http://r.qcbs.ca/workshops/r-workshop-09/). ```r spe <- read.csv("data/doubsspe.csv", row.names = 1) env <- read.csv("data/doubsenv.csv", row.names = 1) ``` ] -- .pull-right3[ This data can also be retrieved from the `ade4` package: ```r library(ade4) data(doubs) spe <- doubs$fish env <- doubs$env ``` Alternatively, from the `codep` package: ```r library(codep) data(Doubs) spe <- Doubs.fish env <- Doubs.env ``` ] --- # Doubs river environmental data We can then explore the objects containing our newly loaded data. Let us peek into the `env` data: .pull-left[ ```r str(env) # 'data.frame': 30 obs. of 11 variables: # $ das: num 0.3 2.2 10.2 18.5 21.5 32.4 36.8 49.1 70.5 99 ... # $ alt: int 934 932 914 854 849 846 841 792 752 617 ... # $ pen: num 48 3 3.7 3.2 2.3 3.2 6.6 2.5 1.2 9.9 ... # $ deb: num 0.84 1 1.8 2.53 2.64 2.86 4 1.3 4.8 10 ... # $ pH : num 7.9 8 8.3 8 8.1 7.9 8.1 8.1 8 7.7 ... # $ dur: int 45 40 52 72 84 60 88 94 90 82 ... # $ pho: num 0.01 0.02 0.05 0.1 0.38 0.2 0.07 0.2 0.3 0.06 ... # $ nit: num 0.2 0.2 0.22 0.21 0.52 0.15 0.15 0.41 0.82 0.75 ... # $ amm: num 0 0.1 0.05 0 0.2 0 0 0.12 0.12 0.01 ... # $ oxy: num 12.2 10.3 10.5 11 8 10.2 11.1 7 7.2 10 ... # $ dbo: num 2.7 1.9 3.5 1.3 6.2 5.3 2.2 8.1 5.2 4.3 ... ``` ] .pull-right[ |Variable |Description| |:--:|:--| |das|Distance from the source [km] | |alt|Altitude [m a.s.l.] | |pen|Slope [per thousand] | |deb|Mean min. discharge [m<sup>3</sup>s<sup>-1</sup>] | |pH|pH of water | |dur|Ca conc. (hardness) [mgL<sup>-1</sup>] | |pho|K conc. [mgL<sup>-1</sup>] | |nit|N conc. [mgL<sup>-1</sup>] | |amn|NH₄⁺ conc. [mgL<sup>-1</sup>] | |oxy|Diss. oxygen [mgL<sup>-1</sup>] | |dbo|Biol. oxygen demand [mgL<sup>-1</sup>] | ] ```r summary(env) # summary statistics ``` --- # Doubs river fish communities Let us peek into the `spe` data: .pull-left[ ```r head(spe)[, 1:8] # CHA TRU VAI LOC OMB BLA HOT TOX # 1 0 3 0 0 0 0 0 0 # 2 0 5 4 3 0 0 0 0 # 3 0 5 5 5 0 0 0 0 # 4 0 4 5 5 0 0 0 0 # 5 0 2 3 2 0 0 0 0 # 6 0 3 4 5 0 0 0 0 ``` ```r str(spe) ... # 'data.frame': 30 obs. of 27 variables: # $ CHA: int 0 0 0 0 0 0 0 0 0 0 ... # $ TRU: int 3 5 5 4 2 3 5 0 0 1 ... # $ VAI: int 0 4 5 5 3 4 4 0 1 4 ... # $ LOC: int 0 3 5 5 2 5 5 0 3 4 ... # $ OMB: int 0 0 0 0 0 0 0 0 0 0 ... # $ BLA: int 0 0 0 0 0 0 0 0 0 0 ... # $ HOT: int 0 0 0 0 0 0 0 0 0 0 ... ... ``` ] .pull-right[ ```r # Try some of these! names(spe) # names of objects dim(spe) # dimensions str(spe) # structure of objects summary(spe) # summary statistics head(spe) # first 6 rows ``` ] --- # (Dis)similarity measures Community resemblance is almost always assessed on the basis of species composition data in the form of a site-by-species data table `\(Y_{m,n}\)`. We can obtain an association matrix `\(A_{m,m}\)` in the form of pairwise distances or dissimilarities `\(D_{m,m}\)` (or similarities `\(S_{m,m}\)`) and then analyse those distances. -- In `R`, we can compute distance or dissimilarity matrices using `stats::dist()`: ```r dist(spe) # 1 2 3 4 5 6 7 # 2 5.385165 # 3 7.416198 2.449490 # 4 7.874008 4.123106 3.000000 # 5 10.816654 10.677078 10.862780 9.219544 # 6 7.348469 4.582576 4.123106 2.828427 8.185353 # 7 6.855655 2.449490 2.000000 3.605551 10.488088 3.605551 # 8 3.000000 7.071068 8.717798 8.774964 10.954451 7.937254 8.246211 # 9 7.810250 8.717798 9.380832 8.774964 9.380832 6.708204 8.485281 # 10 6.708204 5.099020 5.291503 5.000000 9.273618 3.605551 4.472136 # 11 4.472136 3.316625 5.000000 5.477226 10.246951 5.291503 4.795832 # 12 6.708204 3.162278 3.464102 4.582576 11.045361 4.795832 3.162278 # 13 7.071068 4.358899 5.196152 6.164414 11.532563 6.633250 5.385165 # 14 9.110434 6.324555 6.164414 6.557439 11.916375 7.000000 6.324555 # 15 9.899495 7.810250 7.681146 7.483315 10.816654 6.633250 6.855655 # 16 11.090537 9.899495 9.797959 9.327379 10.198039 8.426150 9.055385 # 17 10.630146 9.486833 9.486833 8.774964 9.797959 8.062258 9.055385 # 18 9.848858 9.591663 9.899495 8.660254 9.055385 7.810250 9.380832 # 19 11.704700 11.135529 11.045361 10.148892 9.380832 8.888194 10.770330 # 20 13.453624 14.212670 14.628739 13.453624 10.770330 12.206556 14.212670 # 21 14.456832 15.362291 15.811388 14.525839 11.489125 13.601471 15.556349 # 22 16.643317 17.663522 18.110770 16.763055 13.416408 15.779734 17.663522 # 23 3.872983 7.483315 9.055385 9.000000 10.583005 7.937254 8.485281 # 24 7.071068 9.539392 10.816654 10.583005 11.357817 9.486833 10.246951 # 25 5.291503 8.306624 9.643651 9.273618 9.746794 8.246211 9.110434 # 26 11.135529 12.609520 13.304135 12.409674 10.723805 11.224972 12.922848 # 27 15.362291 16.462078 16.881943 15.811388 13.076697 14.696938 16.583124 # 28 16.522712 17.549929 18.000000 16.941074 14.352700 15.905974 17.606817 # 29 18.761663 19.364917 19.621417 18.384776 15.524175 17.776389 19.364917 # 30 20.396078 21.377558 21.794495 20.542639 17.117243 19.849433 21.517435 # 8 9 10 11 12 13 14 # 2 # 3 # 4 # 5 # 6 # 7 # 8 # 9 7.211103 # 10 6.480741 6.480741 # 11 5.385165 7.549834 4.582576 # 12 8.124038 8.717798 5.477226 3.872983 # 13 8.426150 10.049876 6.855655 4.000000 3.316625 # 14 10.198039 10.583005 7.615773 6.244998 4.242641 3.316625 # 15 10.630146 9.746794 6.855655 7.745967 6.403124 6.633250 5.000000 # 16 11.489125 10.862780 8.485281 10.049876 9.591663 9.746794 8.944272 # 17 10.770330 9.899495 8.246211 9.219544 9.273618 9.643651 9.380832 # 18 9.695360 8.602325 7.874008 8.774964 9.380832 9.949874 9.797959 # 19 11.313708 9.486833 9.273618 11.000000 11.313708 12.041595 11.832160 # 20 13.114877 11.489125 12.727922 13.527749 14.422205 15.000000 14.966630 # 21 14.142136 13.266499 14.142136 14.662878 15.684387 16.031220 16.062378 # 22 16.370706 15.033296 16.248077 16.941074 17.832555 18.303005 18.165902 # 23 2.449490 6.324555 6.633250 5.744563 8.366600 8.774964 10.392305 # 24 6.403124 7.549834 8.544004 8.124038 10.148892 10.583005 11.789826 # 25 4.358899 7.280110 7.000000 6.782330 9.110434 9.486833 10.723805 # 26 10.723805 10.148892 11.445523 11.747340 13.000000 13.490738 13.892444 # 27 15.066519 13.892444 15.264338 15.748016 16.703293 17.146428 17.117243 # 28 16.248077 14.899664 16.309506 16.822604 17.720045 18.193405 18.220867 # 29 18.681542 17.521415 18.303005 18.761663 19.416488 19.646883 19.313208 # 30 20.174241 19.467922 20.371549 20.736441 21.610183 21.863211 21.931712 # 15 16 17 18 19 20 21 # 2 # 3 # 4 # 5 # 6 # 7 # 8 # 9 # 10 # 11 # 12 # 13 # 14 # 15 # 16 5.916080 # 17 8.185353 6.164414 # 18 8.660254 7.615773 3.464102 # 19 10.630146 9.165151 6.633250 6.000000 # 20 13.674794 12.649111 9.380832 7.615773 6.324555 # 21 15.066519 13.928388 11.224972 9.380832 7.874008 3.741657 # 22 16.763055 15.556349 13.416408 11.489125 10.583005 6.480741 4.000000 # 23 10.630146 11.489125 10.295630 9.055385 10.488088 11.916375 13.114877 # 24 11.747340 12.449900 10.723805 9.110434 9.746794 10.148892 11.269428 # 25 10.583005 11.180340 10.148892 8.660254 9.539392 10.630146 11.618950 # 26 13.190906 13.076697 10.816654 8.774964 7.810250 5.385165 5.385165 # 27 16.062378 15.329710 13.304135 11.532563 10.246951 6.244998 4.582576 # 28 17.175564 16.370706 14.352700 12.489996 11.489125 7.745967 6.000000 # 29 18.330303 17.058722 14.662878 13.076697 12.767145 9.000000 7.000000 # 30 21.023796 19.621417 17.117243 15.652476 15.000000 11.180340 9.000000 # 22 23 24 25 26 27 28 # 2 # 3 # 4 # 5 # 6 # 7 # 8 # 9 # 10 # 11 # 12 # 13 # 14 # 15 # 16 # 17 # 18 # 19 # 20 # 21 # 22 # 23 15.362291 # 24 13.304135 4.358899 # 25 13.964240 3.000000 3.741657 # 26 7.549834 9.433981 7.071068 8.000000 # 27 5.000000 14.035669 11.916375 12.649111 5.291503 # 28 5.099020 15.231546 13.076697 13.964240 7.000000 3.872983 # 29 5.567764 17.804494 15.874508 16.431677 9.899495 6.633250 5.567764 # 30 8.185353 19.416488 17.832555 18.055470 11.916375 9.165151 7.280110 # 29 # 2 # 3 # 4 # 5 # 6 # 7 # 8 # 9 # 10 # 11 # 12 # 13 # 14 # 15 # 16 # 17 # 18 # 19 # 20 # 21 # 22 # 23 # 24 # 25 # 26 # 27 # 28 # 29 # 30 6.480741 ``` --- # (Dis)similarity measures For simplicity, we have done this without specifying arguments. Run `dist(spe)` from your end, note what you observe and see what the commands below show you: ```r class(dist(spe)) ``` ```r str(dist(spe)) ``` ```r as.matrix(dist(spe)) ``` ```r dim(as.matrix(dist(spe))) ``` ??? If possible, do this part with the participants. Show them how a dist class object is structured. Highlight that it is a lower-triangular matrix, where the distances between the same objects are hidden. --- # (Dis)similarity measures There are three groups of distance coefficients: _metrics_, _..._ , _..._ . The first group consists of *metrics*, and its coefficients satisfy the following properties: .pull-left[ 1. minimum 0: if species `\(a\)` is equal to species `\(b\)`, then `\(D(a,b)=0\)`; 2. positiveness: if `\(a \neq b\)`, then `\(D(a,b) > 0\)`; 3. symmetry: `\(D(a,b) = D(b,a)\)`; 4. triangle inequality: `\(D(a,b) + D(b,c) \geq D(a,c)\)`. The sum of two sides of a triangle drawn in the Euclidean space is equal or greater than the third side. ] .pull-right[ We can spot all these properties below: ```r as.matrix(dist(spe))[1:3, 1:3] # 1 2 3 # 1 0.000000 5.385165 7.416198 # 2 5.385165 0.000000 2.449490 # 3 7.416198 2.449490 0.000000 ``` ] ??? Highlight the triangular nature of the `dist` class, that it can be converted to a matrix, and the dimensions (noting that it has the same number of species). --- # (Dis)similarity measures There are three groups of distance coefficients: _metrics_, _semimetrics_, _..._ . The second group consists of *semimetrics*, and they violate the _triangle inequality_ property: .pull-left[ 1. minimum 0: if species `\(a\)` is equal to species `\(b\)`, then `\(D(a,b)=0\)`; 2. positiveness: if `\(a \neq b\)`, then `\(D(a,b) > 0\)`; 3. symmetry: `\(D(a,b) = D(b,a)\)`; 4. ~~triangle inequality~~: `\({D(a,b) + D(b,c) \geq or < D(a,c)}\)`. The sum of two sides of a triangle drawn in the Euclidean space is _not_ equal or greater than the third side. ] .pull-right[ ] ??? Highlight the triangular nature of the `dist` class, that it can be converted to a matrix, and the dimensions (noting that it has the same number of species). --- # (Dis)similarity measures There are three groups of distance coefficients: _metrics_, _semimetrics_, _nonmetrics_. The third group consists of *nonmetrics*, and they violate the _positiveness_ property of metric distances: .pull-left[ 1. minimum 0: if species `\(a\)` is equal to species `\(b\)`, then `\(D(a,b)=0\)`; 2. ~~positiveness:~~ if `\(a \neq b\)`, then `\(D(a,b) > or < 0\)`; 3. symmetry: `\(D(a,b) = D(b,a)\)`; 4. ~~triangle inequality~~: `\({D(a,b) + D(b,c) \geq or < D(a,c)}\)`. The sum of two sides of a triangle drawn in the Euclidean space is _not_ equal or greater than the third side. ] .pull-right[ ] ??? Highlight the triangular nature of the `dist` class, that it can be converted to a matrix, and the dimensions (noting that it has the same number of species). --- # (Dis)similarity measures: Euclidean distances The most common metric distance measure is the _Euclidean distance_. .pull-left4[ It is computed using the Pythagorean formula: `$$D_{1} (x_1,x_2) = \sqrt{\sum_{j=1}^p(y_{1j} - y_{2j})^2}$$` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-2-1.png" width="360" style="display: block; margin: auto;" /> ] .pull-right4[ Using `stats::dist()`, we can compute it with: ```r spe.D.Euclid <- dist(x = spe, method = "euclidean") ``` And, we can test whether a distance is Euclidean using: ```r is.euclid(spe.D.Euclid) # [1] TRUE ``` ] ??? The figure on the side needs to be finished. It is supposed to represent the Euclidean distance between sites x1 and x2 in the 2D Cartesian space. The axes are the descriptors y1 and y2, respectively. The arrow on the bottom is the distance between the position of y21 and y11. You can use it to recall the Pythagorean theorem that everyone learns in high school, where the length of the hypotenuse and a and b denote the two lengths of the legs of a right triangle. `method = "euclidean"` is the default parameter --- # Challenge #1 ![:cube]() **Your turn!** Using the `dist()` function, compute the Euclidean distance matrix `\(D_{hmm}\)` for the species abundances by site matrix `\(Y_{hmm}\)` below: .pull-left[ ```r Y.hmm <- data.frame( y1 = c(0, 0, 1), y2 = c(4, 1, 0), y3 = c(8, 1, 0)) ``` ] .pull-right[ | Sites | `\(y_1\)` | `\(y_2\)` | `\(y_3\)` | |:----: | :----:| :---: | :---: | | `\(s_1\)` | 0 | 4 | 8 | | `\(s_2\)` | 0 | 1 | 1 | | `\(s_3\)` | 1 | 0 | 0 | ] After this, look into the numbers, think critically about them, and be ready to chat! (_5 to 10 minutes_) -- .pull-left[ **Solution:** ```r Y.hmm.DistEu <- dist(x = Y.hmm, method = "euclidean") as.matrix(Y.hmm.DistEu) ``` ] .pull-right[ **Output**: ``` # 1 2 3 # 1 0.000000 7.615773 9.000000 # 2 7.615773 0.000000 1.732051 # 3 9.000000 1.732051 0.000000 ``` ] <br> .center[ Tip: Look into the composition and the distances between sites `\(s_2\)` and `\(s_3\)` and between `\(s_1\)` and `\(s_2\)`. ] ??? --- ## Challenge #1 ![:cube]() .pull-left[ **Solution:** ```r Y.hmm # y1 y2 y3 # 1 0 4 8 # 2 0 1 1 # 3 1 0 0 ``` ] .pull-right[ **Output**: ```r as.matrix(Y.hmm.DistEu) # 1 2 3 # 1 0.000000 7.615773 9.000000 # 2 7.615773 0.000000 1.732051 # 3 9.000000 1.732051 0.000000 ``` ] The Euclidean distance between sites `\(s_2\)` and `\(s_3\)`, which have no species in common, is smaller than the distance between `\(s_1\)` and `\(s_2\)`, which share species `\(y_2\)` and `\(y_3\)` (!). From an ecological perspective, _this is a problematic_ assessment of the relationship among sites. -- This issue is known as the **double-zero problem**, _i.e._ double zeroes are treated in the same way as double presences, so that the double zeros shrink the distance between two sites. Euclidean distances ( `\(D_1\)` ) should thus _not_ be used to compare sites based on species abundances. --- # (Dis)similarity measures: Chord distances Orlóci (1967) proposed the _Chord distance_ to analyse community composition. .pull-left[ It consists of: 1\. Normalizing the data, _i.e._ scaling site vectors to length 1 by dividing species abundances in a given sample by the square-rooted sum of square abundances in all samples as `$$y'_{Uj}=y_{Uj}/\sum^s_{j=1}{y^2_{Uj}}$$` 2\. Calculating the Euclidean distances on this normalized data: `$$D_{3} (x_1,x_2) = \sqrt{\sum_{j=1}^p(y'_{1j} - y'_{2j})^2}$$` ] .pull-right[ We can use `vegan::vegdist()` for this one: ```r spe.D.Ch <- vegdist(spe, method = "chord") as.matrix(spe.D.Ch)[1:3, 1:3] # 1 2 3 # 1 0.0000000 0.7653669 0.9235374 # 2 0.7653669 0.0000000 0.2309609 # 3 0.9235374 0.2309609 0.0000000 ``` When two sites share the same species in the same proportions of the number of individuals the value of `\(D_3\)` is `\(0\)`, and when no species are shared, its value is `\(\sqrt{2}\)`. ] ??? `method = "euclidean"` is the default parametre --- # (Dis)similarity measures: Chord distances There are many other metric distances of interest, which we can use: What happens if we compute Chord distances in the same site-by-species matrix `\(Y_{hmm}\)`? .pull-left[ ```r Y.hmm # y1 y2 y3 # 1 0 4 8 # 2 0 1 1 # 3 1 0 0 ``` ```r as.matrix(Y.hmm.DistEu) # 1 2 3 # 1 0.000000 7.615773 9.000000 # 2 7.615773 0.000000 1.732051 # 3 9.000000 1.732051 0.000000 ``` ] .pull-right[ ```r Y.hmm.DistCh <- vegdist(Y.hmm, method = "chord") ``` ```r as.matrix(Y.hmm.DistCh) # 1 2 3 # 1 0.0000000 0.3203645 1.414214 # 2 0.3203645 0.0000000 1.414214 # 3 1.4142136 1.4142136 0.000000 ``` ] -- Adding any number of double zeroes to a pair of sites does _not_ change the value of `\(D_3\)`. Hence, _Chord distances_ can be used to compare sites described by species abundances! ??? `method = "euclidean"` is the default parameter --- # (Dis)similarity measures: Jaccard's Another popular association coefficient is the _Jaccard similarity coefficient_ (1900). It is only appropriate for **binary data**, and its distance coefficient is defined with the size of the intersection divided by the size of the union of the sample sets. `$$D_{7}(x_1,x_2) = 1 - \frac{\vert x_1 \cap x_2 \vert}{\vert x_1 \cup x_2 \vert} = 1 - \frac{\vert x_1 \cap x_2 \vert}{\vert x_1 \vert + \vert x_2 \vert - \vert x_1 \cap x_2 \vert} = 1-\frac{a}{a+b+c}$$` where, - `\(a\)` is the number of species shared between `\(x_1\)` and `\(x_2\)` that are coded `\(1\)`; - `\(b\)` is the number of occurrences where `\(x_1\)` and `\(x_2\)` are known to be different; - `\(c\)` is the number of common absences between `\(x_1\)` and `\(x_2\)`, _i.e._ both `\(0\)`. -- <br> .pull-left[ For example, for sites `\(x_1\)` and `\(x_2\)`: | `\(x_1,x_2\)` | `\(y_1\)` | `\(y_2\)` | `\(y_3\)` | `\(y_4\)` | `\(y_5\)` | |:----: | :----:| :---: | :---: | :---: | :---: | | `\(x_1\)` | 0 | 1 | 0 | 1 | 0 | | `\(x_2\)` | 0 | 1 | 1 | 1 | 1 | ] .pull-right[ .pull-left[ So: - `\(a\)` = 1 + 1 = 2 - `\(b\)` = 1 + 1 = 2 - `\(c\)` = 1 ] .pull-right[ <br> `\(D_{7}(x_1,x_2) =\)` `\(1-\frac{2}{2+2+1}=\)` `\(0.6\)` ] ] --- # (Dis)similarity measures: Sørensen's All parameters in _Jaccard's similarity coefficient_ have equal weights. `$$D_{7}(x_1,x_2)=1-\frac{a}{a+b+c}$$` However, you may want to consider that a presence of a species is more informative than its absence. The distance corresponding to _Sørensen's similarity coefficient_ (1948) gives weight to double presences: `$$D_{13}(x_1,x_2)=1-\frac{2a}{2a+b+c}=\frac{b+c}{2a+b+c}$$` where, - `\(a\)` is the number of species shared between `\(x_1\)` and `\(x_2\)` that are coded `\(1\)`; - `\(b\)` is the number of occurrences where `\(x_1\)` and `\(x_2\)` are known to be different; - `\(c\)` is the number of common absences between `\(x_1\)` and `\(x_2\)`, _i.e._ both `\(0\)`. --- # (Dis)similarity measures: Bray-Curtis The _Bray-Curtis dissimilarity coefficient_ is a modified version of the Sørensen's index and allows for species abundances: .pull-left[ `$$D_{14}(x_1,x_2)=\frac{\sum{\vert y_{1j}-y_{2j}\vert}}{\sum{( y_{1j}+y_{2j})}}=$$` `$$D_{14}(x_1,x_2)=1 - \frac{2W}{A+B}$$` where, - `\(W\)` is the sum of the lowest abundances in each species found between sites `\(x_1\)` and `\(x_2\)`; - `\(A\)` is the sum of all abundances in `\(x_1\)`; and, - `\(B\)` is the sum of all abundances in `\(x_2\)`. ] -- .pull-right[ For example, for sites `\(x_1\)` and `\(x_2\)`: | `\(x_1,x_2\)` | `\(y_1\)` | `\(y_2\)` | `\(y_3\)` | `\(y_4\)` | `\(y_5\)` | |:----: | :----:| :---: | :---: | :---: | :---: | | `\(x_1\)` | _2_ | _1_ | _0_ | 5 | 2 | | `\(x_2\)` | 5 | _1_ | 3 | _1_ | 1 | <br> So: - `\(W = 2 + 1 + 0 + 1 + 1 = 5\)` - `\(A = 2 + 1 + 0 + 5 + 0 = 8\)` - `\(B = 5 + 1 + 3 + 1 + 2 = 12\)` `$$D_{14}(x_1,x_2) = 1-\frac{2 \times 5}{8+12}=$$` `$$D_{14}(x_1,x_2) = 0.5$$` ] --- ##### (Dis)similarity measures: Jaccard's and Sørensen's coefficients In `R`, you can use use the `vegan::vegdist()` function to calculate the Jaccard's and Sørensen's indices: .pull-left[ ```r spe.D.Jac <- vegdist(spe, method = "jaccard", binary = TRUE) ``` ] .pull-right[ ```r spe.D.Sor <- vegdist(spe, method = "bray", binary = TRUE) ``` ] <br> > Because both Jaccard's and Sørensen's are only appropriate for presence-absence data, you must binary-transform abundance data using `binary = TRUE` in `vegdist()`. <br> ##### Bray-Curtis dissimilarity coefficient To calculate the _Bray-Curtis dissimilarity coefficient_, which can account for abundances, you need to set `binary = FALSE`. ```r spe.db.pa <- vegdist(spe, method = "bray", binary = FALSE) spe.db <- as.matrix(spe.db.pa) ``` --- # (Dis)similarity measures: Mahalanobis distance In many datasets, variables are correlated, and using a distance metric that ignores this can lead to misleading results. The Mahalanobis distance takes into account the correlation between variables in the data. `$$D_{Mahal}^2 = (x - \mu)^T \Sigma^{-1} (x - \mu)$$` `\(D\)` represents the Mahalanobis distance, `\(x\)` is the point you're measuring the distance from, `\(\mu\)` is the mean vector of the distribution you're measuring against, and `\(\Sigma\)` is the covariance matrix of the distribution. It is also robust to changes in the distribution of the data (it does not assume normality). -- In `R`, we can compute it as follows: ```r # Set the seed for reproducibility set.seed(123) # Generate random data from a multivariate normal distribution data <- data.frame(x = rnorm(100, mean = 10, sd = 2), y = rnorm(100, mean = 5, sd = 1), z = rnorm(100, mean = 20, sd = 4)) # Calculate the Mahalanobis distance mahalanobis_dist <- mahalanobis(data, center = colMeans(data), cov = cov(data)) ``` --- # (Dis)similarity measures: representation We can create graphical depictions of association matrices using the `coldiss()` function: ```r coldiss(spe.D.Jac) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-20-1.png" width="1152" style="display: block; margin: auto;" /> --- # Transformations for community composition data Communities sampled over homogeneous or short environmental conditions can have species compositions with few zeroes, so that Euclidean distances could be enough to characterize them. Nevertheless, this is rarely the reality. Species may be highly frequent when conditions are favourable, or may be absent from many sites. Sometimes, this skewness may introduce spurious problems to our analyses. We may then have to transform our composition data to appropriately analyze it. -- In `R`, we can rely on `vegan::decostand()` for many types of transformations. Take a look into the help of this function to see the available options: ``` ?decostand() ``` Let us see some of them. --- ## Transformations: presence-absence We can change the argument `method` to `"pa"` to transform our abundance data into presence-absence data: .center[ If `\(y_{ij} \geq 1\)`, then, `\(y'_{ij} = 1\)`. ] .pull-left[ Let us recall our `spe` data set: ```r spe[1:6, 1:6] # CHA TRU VAI LOC OMB BLA # 1 0 3 0 0 0 0 # 2 0 5 4 3 0 0 # 3 0 5 5 5 0 0 # 4 0 4 5 5 0 0 # 5 0 2 3 2 0 0 # 6 0 3 4 5 0 0 ``` ] Let us transform `spe` abundances to presence-absences: ```r spe.pa <- decostand(spe, method = "pa") spe.pa[1:6, 1:6] # CHA TRU VAI LOC OMB BLA # 1 0 1 0 0 0 0 # 2 0 1 1 1 0 0 # 3 0 1 1 1 0 0 # 4 0 1 1 1 0 0 # 5 0 1 1 1 0 0 # 6 0 1 1 1 0 0 ``` --- ## Transformations: species profiles Sometimes, one wants to remove the effects of highly abundant units. We can transform the data into profiles of relative species abundances through the following equation: `$$y'_{ij} = \frac{y_{ij}}{y_{i+}}$$` .pull-left[ Let us recall our `spe` dataset: ```r spe[1:5, 1:6] # CHA TRU VAI LOC OMB BLA # 1 0 3 0 0 0 0 # 2 0 5 4 3 0 0 # 3 0 5 5 5 0 0 # 4 0 4 5 5 0 0 # 5 0 2 3 2 0 0 ``` ] In `decostand()`: ```r spe.total <- decostand(spe, method = "total") spe.total[1:5, 1:6] # CHA TRU VAI LOC OMB BLA # 1 0 1.00000000 0.00000000 0.00000000 0 0 # 2 0 0.41666667 0.33333333 0.25000000 0 0 # 3 0 0.31250000 0.31250000 0.31250000 0 0 # 4 0 0.19047619 0.23809524 0.23809524 0 0 # 5 0 0.05882353 0.08823529 0.05882353 0 0 ``` ??? yi+ is used to indicate the sample total count over all j=1,…,m species, for the ith sample. --- ## Transformations: Hellinger We can take the square-root of the _species profile transformation_ and obtain the _Hellinger transformation_, which has very good mathematical properties and allows us to reduce the effects of `\(y_{ij}\)` values that are extremely large. `$$y'_{ij} = \sqrt{\frac{y_{ij}}{y_{i+}}}$$` .pull-left[ Let us recall our `spe` dataset: ```r spe[1:5, 1:6] # CHA TRU VAI LOC OMB BLA # 1 0 3 0 0 0 0 # 2 0 5 4 3 0 0 # 3 0 5 5 5 0 0 # 4 0 4 5 5 0 0 # 5 0 2 3 2 0 0 ``` ] In `decostand()`: ```r spe.total <- decostand(spe, method = "hellinger") spe.total[1:5, 1:6] # CHA TRU VAI LOC OMB BLA # 1 0 1.0000000 0.0000000 0.0000000 0 0 # 2 0 0.6454972 0.5773503 0.5000000 0 0 # 3 0 0.5590170 0.5590170 0.5590170 0 0 # 4 0 0.4364358 0.4879500 0.4879500 0 0 # 5 0 0.2425356 0.2970443 0.2425356 0 0 ``` --- ## Transformations: Standardization Standardizing environmental variables is crucial as you cannot compare the effects of variables with different units: ```r ## ?decostand env.z <- decostand(env, method = "standardize") ``` <Br> This centres and scales the variables to make your downstream analysis more appropriate: ```r apply(env.z, 2, mean) # das alt pen deb pH # 1.000429e-16 1.814232e-18 -1.659010e-17 1.233099e-17 -4.096709e-15 # dur pho nit amm oxy # 3.348595e-16 1.327063e-17 -8.925898e-17 -4.289646e-17 -2.886092e-16 # dbo # 7.656545e-17 apply(env.z, 2, sd) # das alt pen deb pH dur pho nit amm oxy dbo # 1 1 1 1 1 1 1 1 1 1 1 ``` .center[*More on this later!*] --- exclude: true # Total Species Richness Visualize how many species are present at each site: ```r site.pre <- rowSums(spe > 0) barplot(site.pre, main = "Species richness", xlab = "Sites", ylab = "Number of species", col = "grey ", las = 1) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-29-1.png" width="720" style="display: block; margin: auto;" /> --- exclude: true # Visualization of distance matrices ```r # the code for the coldiss() function is in the workshop script. coldiss(spe.db.pa) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-31-1.png" width="720" style="display: block; margin: auto;" /> --- class: inverse, middle, center # Clustering --- # Clustering One application of association matrices is clustering. Clustering highlights structures in the data by partitioning either the objects or the descriptors. One goal of ecologists could be to divide a set of sites into groups with respect to their environmental conditions or their community composition. Its results can be represented as dendrograms (tree-like diagrams), which describe how closely observations are. <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-32-1.png" width="720" style="display: block; margin: auto;" /> ??? .center[] --- # Hierarchical clustering methods Clustering algorithms generally build on this series of steps: 1. calculate an association matrix with all pair-wise similarities among all objects; 2. Join together pairs of objects that are most similar (or dissimilar); 3. Recompute the similarity matrix for that cluster _versus_ all remaining objects; 4. Repeat steps 2 and 3 until all objects are joined. -- <br> From the many existing hierarchical clustering algorithms, we will explore: 1. Single linkage agglomerative clustering; 2. Complete linkage, agglomerative clustering; 3. Ward's minimum variance clustering. -- <br> In `R`, we will use the functions `hclust()` and `agnes()` to build our dendrograms. ??? In single linkage agglomerative clustering (also called nearest neighbour sorting), the objects at the closest distances agglomerate. which often generates long thin clusters or chains of objects. Conversely, in complete linkage agglomerative clustering, an object agglomerates to a group only when linked to the furthest element of the group, which in turn links it to all members of that group. It will form many small separate groups, and is more appropriate to look for contrasts, discontinuities in the data. Ward's minimum variance clustering differ from these two methods in that it clusters objects into groups using the criterion of least squares (similar to linear models). Its dendogram shows squared distances by default. To compare with other methods, calculate the sqaure root of the distances first. --- # Complete linkage clustering .pull-left4[  ] .pull-right4[ - The objects divided into small groups (1-2, 3-4, 5) - Connect small groups using the largest distance between their elements * (1-3=0.15, 2-4=0.35, 2-3=0.6, select 0.6 to connect group 1-2 and 3-4)  ] --- # Comparison Create a distance matrix from Hellinger transformed Doubs river data and compute the single linkage clustering: ```r spe.dhe1 <- vegdist(spe.hel, method = "euclidean") spe.dhe1.single <- hclust(spe.dhe1, method = "single") plot(spe.dhe1.single) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-33-1.png" width="504" style="display: block; margin: auto;" /> --- # Comparison ```r spe.dhe1 <- vegdist(spe.hel, method = "euclidean") spe.dhe1.complete <- hclust(spe.dhe1, method = "complete") plot(spe.dhe1.single, main="Single linkage clustering", hang =-1) plot(spe.dhe1.complete, main="Complete linkage clustering", hang=-1) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-34-1.png" width="1080" style="display: block; margin: auto;" /> .pull-left[ **Single linkage:** Chains of objects occur (e.g. 19,29,30,26) ] .pull-right[ **Complete linkage:** Contrasted groups are formed of objects occur ] ???  --- # Ward's minimum variance method .pull-left[ - Uses the criterion of least squares to cluster objects into groups - At each step, the pair of clusters merging is the one leading to the minimum increase in total within-group sum of squares - Clusters generated using this method tend to be more spherical and to contain similar number of objects ] --- # Ward's method Compute the Ward's minimum variance clustering and plot the dendrogram by using the square root of the distances: ```r spe.dhel.ward <- hclust(spe.dhe1, method = "ward.D2") spe.dhel.ward$height <- sqrt(spe.dhel.ward$height) plot(spe.dhel.ward, hang = -1) # hang = -1 aligns objects at 0 ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-35-1.png" width="360" style="display: block; margin: auto;" /> > Ward's clustering algorithm can only be applied with Euclidean distances. Bray-Curtis distances are not Euclidean, so they must be square-rooted first before used with Ward's algorithm. --- # Important questions to address - Choosing the 'appropriate' method depends on your objective; - Do you want to highlight gradients or contrasts? - This is *not* an statistical method and you may need to rely on complementary methods to support your hypotheses. However, clustering allows us to: - Determine the optimal number of interpretable clusters; - Compute clustering statistics; - Combine clustering to ordination to distinguish groups of sites. --- ## Now, what? While **cluster analysis** looks for *discontinuities* in a dataset, **ordination** extracts the main trends in the form of continuous axes. From now, we will look into four types of **unconstrained ordination methods**... -- .right[...**wait**, what do we mean about **unconstrained ordinations**? *Anyone*?] -- <br> .center[*If no one speaks out, choose a "volunteer", presenter!*] -- <br> **Unconstrained ordinations** assess relationships within a single set of variables. *No attempt* is made to define the relationship between a set of independent variables and one or more dependent variables. -- In other words, the interpretation of potential effects of other factors that generated observed patterns can only be made indirectly, because those factors are *not* explicitly included in the analyses. -- Here, we will explore: .center[ .pull-left[ **P**rincipal **C**omponent **A**nalysis **P**rincipal **Co**ordinate **A**nalysis ] .pull-right[ **C**orrespondence **A**nalysis **N**on-**M**etric Multi**D**imensional **S**caling ] ] --- ### But first, let us *recap*... We already understand the meaning of **variance** and **mean**, and how to calculate them: .pull-left[ `$$\sigma_x^2 = \frac{\sum_{i=1}^{n}(x_i - \mu)^2} {n}$$` ] .pull-right[ `$$\mu_x = \frac{1}{n} \sum_{i=i}^{n} x_{i}$$` ] These are very useful to understand the *centre* and the *dispersion* of a given variable or dimension. Nevertheless, we are often interested **in more than one dimension**, and want to measure how much of each dimension vary from the mean with *respect to each other*. -- **Covariance** is such a measure, which can depict how **two dimensions co-vary**: .pull-left[ `$$var_x = \sigma_x^2 = \frac{\sum_{i=1}^{n}(x_i - \mu)^2} {n}$$` ] .pull-right[ `$$cov_{x,y}=\frac{\sum_{i=1}^{N}(x_{i}-\bar{x})(y_{i}-\bar{y})}{N-1}$$` ] --- ### But first, let us *recap*... Intuitively, we can measure the **covariance** between more than two variables. Let us say, between the variables `\(x\)`, `\(y\)`, and `\(z\)`: .pull-left[ `$$cov_{x,y}=\frac{\sum_{i=1}^{N}(x_{i}-\bar{x})(y_{i}-\bar{y})}{N-1}$$` ] .pull-right[ `$$cov_{x,z}=\frac{\sum_{i=1}^{N}(x_{i}-\bar{x})(z_{i}-\bar{z})}{N-1}$$` ] `$$cov_{z,y}=\frac{\sum_{i=1}^{N}(z_{i}-\bar{z})(y_{i}-\bar{y})}{N-1}$$` We can represent these calculations in a **covariance matrix**: `$${C(x, y, z)} = \left[ \begin{array}{ccc} cov_{x,x} & cov_{y,x} & cov_{z,x} \\ cov_{x,y} & cov_{y,y} & cov_{z,y} \\ cov_{x,z} & cov_{y,z} & cov_{z,z} \end{array} \right]$$` -- .center[**QUIZ TIME** *What are the diagonals?* *And, what happens if variables are independent?* ] --- #### Still *recapping*... .center[***What are the diagonals?***] If, `\(cov_{x,y}=\frac{\sum_{i=1}^{N}(x_{i}-\bar{x})(y_{i}-\bar{y})}{N-1}\)`, then: `$$cov_{x,x}=\frac{\sum_{i=1}^{N}(x_{i}-\bar{x})(x_{i}-\bar{x})}{N} = \frac{\sum_{i=1}^{n}(x_i - \bar{x})^2} {N} = var_x$$` -- So, that: `$${C(x, y, z)} = \left[ \begin{array}{ccc} cov_{x,x} & cov_{y,x} & cov_{z,x} \\ cov_{x,y} & cov_{y,y} & cov_{z,y} \\ cov_{x,z} & cov_{y,z} & cov_{z,z} \end{array} \right] = \left[ \begin{array}{ccc} var_{x} & cov_{y,x} & cov_{z,x} \\ cov_{x,y} & var_{y} & cov_{z,y} \\ cov_{x,z} & cov_{y,z} & var_{z} \end{array} \right]$$` <br> .center[The covariance of a variable with itself is its *variance*!] --- #### Still *recapping*... .center[***What happens if the variables are independent?***] .pull-left[ ```r x <- rnorm(5000, mean = 0, sd = 1) y <- rnorm(5000, mean = 0, sd = 1) z <- rnorm(5000, mean = 0, sd = 1) xyz <- data.frame(x, y, z) GGally::ggpairs(xyz) ``` <img src="workshop09-pres-en_files/figure-html/xyz-norm-1.png" width="360" style="display: block; margin: auto;" /> ] -- .pull-right[ ```r cov(xyz) ``` ``` # x y z # x 0.98469 -0.00787 0.00880 # y -0.00787 1.00997 0.00286 # z 0.00880 0.00286 1.01408 ``` If variables are perfectly independent (or uncorrelated), the covariance matrix `\(C(x, y, z)\)` is: `$${C(x, y, z)} = \left[ \begin{array}{ccc} var_{x} & 0 & 0 \\ 0 & var_{y} & 0 \\ 0 & 0 & var_{z} \end{array} \right]$$` *i*.*e*. a covariance closer to `\(1\)` means that variables are *colinear*. And, here, `\(var_{x} = var_{y} = var_{z} = 1\)`. ] --- ## Linear transformations We are often interested in observing variables in *different ways*. In this process, we create a new variable, let us say `\(x_{new}\)`, by multiplying and/or adding the values of the original variable `\(x\)` by constants. For instance: -- .pull-left[ We can transform a variable of distances measured in kilometres `\(d_{km}\)` into miles, as: `$$d_{mi} = 0.62 \times d_{km}$$` ] .pull-right[ We can also transform Fahrenheit degrees to Celsius degrees as: `$$T_{C} = 0.5556\times T_{Fahr} - 17.778$$` ] These are examples of **linear transformations**: the transformed variables are linearly related to the original variables and the shapes of the distribution are not changed. -- Two types of transformations are very important for us: .pull-left[ **Centering**, which subtracts the values of a predictor by the mean: `$$x' = x_i - \bar{x}$$` ] .pull-right[ **Scaling**, which divides the predictor variables by their standard deviation: `$$x'' = \frac{x_i}{\sigma_x}$$` ] ??? Centering is basically a technique where the mean of independent variables is subtracted from all the values. It means all independent variables have zero mean. Scaling is similar to centering. Predictor variables are divided by their standard deviation. The presenter should mention here that centering brings the mean to zero and scaling brings the standard deviation to one-unit. They also should mention that variables become comparable when scaling, as their unit is lost. --- ## Eigendecomposition **Square matrices**, such as the **covariance matrix**, can be decomposed into *Eigenvalues* and *Eigenvectors*. For a square matrix, `\(A_{n \times n}\)`, a vector `\(v\)` is an *Eigenvector* of `\(A\)`, if there is a *scalar*, `\(\lambda\)`, for which: .center[ `\(A_{n \times n} v_{n \times 1} = \lambda v_{n \times 1}\)`, or `\(\left(\begin{matrix}a_{11}&\cdots&a_{1n}\\\vdots&\ddots&\vdots\\a_{1n}&\cdots&a_{nn}\\\end{matrix}\right)\left(\begin{matrix}v_1\\\vdots\\v_n\\\end{matrix}\right)=\lambda\left(\begin{matrix}v_1\\\vdots\\v_n\\\end{matrix}\right)\)` ] with the value of `\(\lambda\)` being the corresponding *Eigenvalue*. -- In other words, the matrix `\(A\)` effectively *stretches* the Eigenvector `\(v\)` by the amount specified by the Eigenvalue (*scalar*) `\(\lambda\)`. An *Eigenvector* is a vector whose direction remains unchanged when a **linear transformation** is applied to it. <br> .center[Wait! What do we mean by *unchanged direction*?] --- ## Eigendecomposition .center[Wait! What do we mean by *unchanged direction*?] <br> Let us represent this with this simple example. We can transform a square into a parallelogram using a single-axis **shear transformation**. -- .pull-left[ Let `\(S\)` be the square with vertices `\((0,0),\,(1,0),\,(1,1),\,(1,0)\)` that will be shear-transformed to the `\(P\)` parallelogram with vertices `\((0,0),\,(1,0),\,(1,1.57),\,(1,0.57)\)`. We can see that after the linear transformation, the purple arrow has not changed direction, *i.e.* it is an *Eigenvector* of `\(S\)`. On the other hand, the red arrow changed direction, and thus is *not* an *Eigenvector* of `\(S\)`. ] .pull-right[ <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-36-1.png" width="360" style="display: block; margin: auto;" /> ] --- ## Eigendecomposition: implications .center[ *Keep up with the algebra torture!* ] ### Orthogonality A *fabulous* and *simple* property of *symmetric* matrices that we can explain here! .pull-left[ Let us assume that `\(x\)` is an eigenvector of `\(A\)` corresponding to the eigenvalue `\(λ_1\)` and `\(y\)` an eigenvector of `\(A\)` corresponding to the eigenvalue `\(λ_2\)`, with `\(λ_1≠λ_2\)`. `$$Ax=\lambda_1x \\ Ay=\lambda_2y$$` Let us multiply each one by the other transposed *Eigenvector*. `$$y^{\intercal}Ax=\lambda_1y^{\intercal}x \\ x^{\intercal}A^{\intercal}y=\lambda_2x^{\intercal}y$$` ] -- .pull-right[ Now subtract the second equation from the first one and use the commutativity of the scalar product: `\(y^{\intercal}Ax-x^{\intercal}A^{\intercal}y=\lambda_1y^{\intercal}x - \lambda_2x^{\intercal}y \\ 0 = (\lambda_1 - \lambda_2)y^{\intercal}x\)` Because we know that `\(\lambda_1-\lambda_2\neq 0\)`, then `\(y^{\intercal}x = 0\)`, *i*.*e*., `\(\mathbf{x}\perp\mathbf{y}\)`, *i*.*e*. are **orthogonal**! <br> <br> *So, what does the Eigendecomposition of a variance-covariance matrix tell us?* ] ??? Hi, Presenter. The explanation of this part is very useful and quite simple, so everyone can understand what orthogonality is. It is a matter of simple equation operations and subtractions. --- ## Eigendecomposition: implications .center[ *Keep up with the algebra torture!* ] ### Maximization .pull-left[ If `\(v_i' v_i = 1\)`, then `\(Av_i=\lambda_iv_i\)` can be written as: `$$v_i' A v_i = \lambda_i$$` In fact, `\(v' A v\)` is the variance of a linear combination with weights in `\(v\)`, *i*.*e*. `\(\text{Var}(v_i'\,A)=v_i'\,\text{Var}(A)\,v_i\)`. *Hence, we can connect the dots!* ] -- .pull-right[ Remember that the *Eigenvalues* in our *variance-covariance matrix* `\(A\)` are directly related to the variance! Thus, to find a vector `\(v\)` that maximizes the variance, `\(v' A v\)`, all we must do is to choose the *Eigenvector* corresponding to the largest *Eigenvalue* `\(\lambda_i\)`! So that the maximum variance is `\(\lambda_1\)`! ] -- The *explained variance* of each *Eigenvector* obeys the order: `\(\lambda_1 > \lambda_2 > \dots > \lambda_k\)`. This allows us to condense a larger number of original variables into a smaller set of selected vectors with minimal loss of information (i.e. ***dimensionallity reduction***). --- # Unconstrained ordination methods This is a good startpoint to set us in the direction of the **unconstrained ordination methods** we will study today! .pull-left[ They allow us to: - Assess relationships *within* a set of variables (species or environmental variables); - Find key components of variation among samples, sites, species; - Reduce the number of dimensions in multivariate data while limiting substantial loss of information; - Create new variables for use in subsequent analyses. ] .pull-right[ Here, we will learn: 1. **P**rincipal **C**omponent **A**nalysis; 2. **P**rincipal **Co**ordinate **A**nalysis; 3. **N**on-Metric **M**ulti**d**imensional **S**caling; ] --- # Principal Component Analysis The Principal Component Analysis (PCA) is a *linear* dimensionality-reduction technique, *i.e.* it reduces strongly correlated data. In a nutshell, the PCA *linearly* transforms the feature from the original space to a new feature space, containing **principal components** that explain most of the variance in the dataset, i.e. maximize the separation between the data. -- The *principal component space* can be written as: `$$Z_p = ∑_{j=1}^p ϕ_j * X_j$$` where, 1. `\(Z_p\)` is the principal component `\(p\)`; 2. `\(ϕ_j\)` is the loading vector comprising the `\(j\)` loadings for the `\(p\)` principal component, i.e. the coefficients of the linear combination of the original variables from which the principal components are constructed; 3. `\(X_j\)` is the normalized predictors, i.e. with means equal to zero and standard deviations equal to one. ??? That is: the reconstruction for the data can be given by a simple linear complination of the components. Future versions of this should include a figure (such as a heatmap) with the original standardized data on a left side, and on the right side, a heatmap of the loadings times a heatmap of the components (which can be thought as equal to the sum of rank-one matrices) being equal to the heatmap of the reconstructed data. --- # Principal Component Analysis PCA can be computed in at least *four* different ways. For the sake of simplicity, we will focus here on how to obtain principal components from a correlation matrix. We will learn how to do it from "scratch" and then how to use `R` packages to compute the principal components. --- # Principal Component Analysis: step-by-step .pull-left3[ 1. Starting point: a matrix `\(Y\)` of `\(n\)` observations and `\(p\)` normally distributed continuous variables; ] .pull-right3[ In `R`, from scratch! ```r data(varechem) str(varechem) # 'data.frame': 24 obs. of 14 variables: # $ N : num 19.8 13.4 20.2 20.6 23.8 22.8 26.6 24.2 29.8 28.1 ... # $ P : num 42.1 39.1 67.7 60.8 54.5 40.9 36.7 31 73.5 40.5 ... # $ K : num 140 167 207 234 181 ... # $ Ca : num 519 357 973 834 777 ... # $ Mg : num 90 70.7 209.1 127.2 125.8 ... # $ S : num 32.3 35.2 58.1 40.7 39.5 40.8 33.8 27.1 42.5 60.2 ... # $ Al : num 39 88.1 138 15.4 24.2 ... # $ Fe : num 40.9 39 35.4 4.4 3 ... # $ Mn : num 58.1 52.4 32.1 132 50.1 ... # $ Zn : num 4.5 5.4 16.8 10.7 6.6 9.1 7.4 5.2 9.3 9.1 ... # $ Mo : num 0.3 0.3 0.8 0.2 0.3 0.4 0.3 0.3 0.3 0.5 ... # $ Baresoil: num 43.9 23.6 21.2 18.7 46 40.5 23 29.8 17.6 29.9 ... # $ Humdepth: num 2.2 2.2 2 2.9 3 3.8 2.8 2 3 2.2 ... # $ pH : num 2.7 2.8 3 2.8 2.7 2.7 2.8 2.8 2.8 2.8 ... ``` ] --- # Principal Component Analysis: step-by-step .pull-left3[ 1. Starting point: a matrix `\(Y\)` of `\(n\)` observations and `\(p\)` normally distributed continuous variables; ] .pull-right3[ In `R`, from scratch! ```r data(varechem) # Step 1 Y <- varechem[, 1:2] head(Y) # N P # 18 19.8 42.1 # 15 13.4 39.1 # 24 20.2 67.7 # 27 20.6 60.8 # 23 23.8 54.5 # 19 22.8 40.9 ``` ] --- # Principal Component Analysis: step-by-step .pull-left3[ 1. Starting point: a matrix `\(Y\)` of `\(n\)` observations and `\(p\)` normally distributed continuous variables; 2. Standardizing observations, as in `\(Y_{std} = \frac{y_i - \bar{y}}{\sigma_y}\)`; which is the same as centring, as in `\(y_c = [y_i - \bar{y}]\)`, and then scaling, as in `\(y_s = \frac{y_i}{\sigma_y}\)`; ] .pull-right3[ In `R`, from scratch! ```r data(varechem) # Step 1 Y <- varechem[, 1:2] # Step 2 Y_std <- as.matrix(scale(Y)) head(Y_std) # N P # 18 -0.46731082 -0.1993234 # 15 -1.62503567 -0.4000407 # 24 -0.39495301 1.5134640 # 27 -0.32259521 1.0518143 # 23 0.25626722 0.6303080 # 19 0.07537271 -0.2796103 round(apply(Y_std, 2, mean)) # N P # 0 0 round(apply(Y_std, 2, sd)) # N P # 1 1 ``` ] ??? The presenter may recall here or even ask the participants what the standardization is doing. --- # Principal Component Analysis: step-by-step .pull-left3[ 1. Starting point: a matrix `\(Y\)` of `\(n\)` observations and `\(p\)` normally distributed continuous variables; 2. Standardizing observations, as in `\(Y_{std} = \frac{y_i - \bar{y}}{\sigma_y}\)`; which is the same as centring, as in `\(y_c = [y_i - \bar{y}]\)`, and then scaling, as in `\(y_s = \frac{y_i}{\sigma_y}\)`; 3. Compute the variance-covariance matrix `\(R = cov(Y_{std})\)`; ] .pull-right3[ In `R`, from scratch! ```r data(varechem) # Step 1 Y <- varechem[, 1:2] # Step 2 Y_std <- as.matrix(scale(Y)) # Step 3 (Y_R <- cov(Y_std)) # N P # N 1.0000000 -0.2511603 # P -0.2511603 1.0000000 ``` ] --- # Principal Component Analysis: step-by-step .pull-left3[ 1. Starting point: a matrix `\(Y\)` of `\(n\)` observations and `\(p\)` normally distributed continuous variables; 2. Standardizing observations, as in `\(Y_{std} = \frac{y_i - \bar{y}}{\sigma_y}\)`; which is the same as centring, as in `\(y_c = [y_i - \bar{y}]\)`, and then scaling, as in `\(y_s = \frac{y_i}{\sigma_y}\)`; 3. Compute the variance-covariance matrix `\(R = cov(Y_{std})\)`; 4. Perform the Eigendecomposition of the covariance matrix to obtain the matrix `\(U\)` of Eigenvectors, containing the *Principal Components*; ] .pull-right3[ In `R`, from scratch! ```r data(varechem) # Step 1 Y <- varechem[, 1:2] # Step 2 Y_std <- as.matrix(scale(Y)) # Step 3 Y_R <- cov(Y_std) # Step 4 (Eigenvalues <- eigen(Y_R)$values) # [1] 1.2511603 0.7488397 (Eigenvectors <- eigen(Y_R)$vectors) # [,1] [,2] # [1,] -0.7071068 -0.7071068 # [2,] 0.7071068 -0.7071068 ``` ] --- # Principal Component Analysis: step-by-step The *Eigenvectors* here are the **Principal Components**, and as we have seen, each *Eigenvector* has its corresponding *Eigenvalue*. .pull-left[ We can represent the distances from the observations to the first Eigenvector (`PC1`, in red). The first principal component is drawn so that the variation of the values along its line is maximal. The arrows on the principal components are obtained by multiplying their *Eigenvalues* by the *Eigenvectors*. ] .pull-right[ <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-42-1.png" width="360" style="display: block; margin: auto;" /> ] ??? In this first representation, we can observe that a first direction (or the first linear component) is drawn attempting to maximize the variance of the data. Participants may ask you how different this is from a linear regression. One key difference is on the how the error squares are inimized perpendicularly to the straight line (90 degrees; making it orthogonal), while in the linear regression, the error squares are minimized towards the y-direction. --- # Principal Component Analysis: step-by-step The *Eigenvectors* here are the **Principal Components**, and as we have seen, each *Eigenvector* has its corresponding *Eigenvalue*. .pull-left[ We can then represent the distances from the observations to the second Eigenvector (`PC2`, in orange). The second principal component is also drawn maximizing the variance of the data. Note how the principal components are orthogonal! ] .pull-right[ <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-43-1.png" width="360" style="display: block; margin: auto;" /> ] -- .pull-left[ *We represented the Eigenvectors, i.e. the principal components!* *But, what is the use of the Eigenvalues?* ] ??? Here, the a second direction (or the second linear component) is drawn such as that the variance of the data is maximized with respect to this second component. --- # Principal Component Analysis: step-by-step We have seen that the *Eigenvalues* represent the magnitude (the variance) in the principal components. .pull-left[ In fact, the sum of all *Eigenvalues* is equal to the sum of variances, which are represented on the diagonal of the variance-covariance matrix. ] .pull-right[ ```r sum(diag(cov(Y_std))) # [1] 2 sum(eigen(cov(Y_std))$values) # [1] 2 ``` ] -- Intuitively, one can obtain the relative influence of each *Eigenvector* `\(v_{k}\)` (or `\(\text{PC}_{k}\)`) by dividing their values by the sum of all *Eigenvalues*. `$$\text{Explained variance of}~v_{k} = \frac{\lambda_{v_k}}{\sum^p_{i=1}{\lambda_{v}}}$$` By doing this, we can say that the `\(\text{PC}1\)` explains 63% of the variance in the data, while `\(\text{PC}2\)` explains 37% of the variance. -- Finally, we can proceed to the last step of our computation of principal components! --- # Principal Component Analysis: step-by-step .pull-left3[ 1. Starting point: a matrix `\(Y\)` of `\(n\)` observations and `\(p\)` normally distributed continuous variables; 2. Standardizing observations, as in `\(Y_{std} = \frac{y_i - \bar{y}}{\sigma_y}\)`; which is the same as centring, as in `\(y_c = [y_i - \bar{y}]\)`, and then scaling, as in `\(y_s = \frac{y_i}{\sigma_y}\)`; 3. Compute the variance-covariance matrix `\(R = cov(Y_{std})\)`; 4. Perform the Eigendecomposition of the covariance matrix to obtain the matrix `\(U\)` of Eigenvectors, containing the *Principal Components*; 5. Obtain the feature space by multiplying `\(U\)` with the standardized matrix `\(Y_{std}\)`, *i*.*e*. the *score matrix* `\(F\)`. ] .pull-right3[ In `R`, from scratch! ```r # Step 1 Y <- varechem[, 1:2] # Step 2 Y_std <- as.matrix(scale(Y)) # Step 3 Y_R <- cov(Y_std) # Step 4 Eigenvalues <- eigen(Y_R)$values Eigenvectors <- eigen(Y_R)$vectors # Step 5 F_PrComps <- Y_std %*% Eigenvectors head(F_PrComps) # [,1] [,2] # 18 0.1894957 0.4713816 # 15 0.8662023 1.4319452 # 24 1.3494546 -0.7909067 # 27 0.9718543 -0.5156358 # 23 0.2644868 -0.6269034 # 19 -0.2510109 0.1444178 ``` ] --- # Principal Component Analysis: step-by-step The score matrix, `\(F\)`, (object `F_PrComps`) allows one to *rotate* the new data space, so it is represented in relation to the principal components. .pull-left[ .center[ `\(\text{N}\)` ~ `\(\text{P}\)` ] <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-46-1.png" width="252" style="display: block; margin: auto;" /> ] .pull-right[ .center[ `\(\text{PC}1\)` ~ `\(\text{PC}2\)` ] <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-47-1.png" width="252" style="display: block; margin: auto;" /> ] ??? The axis labels are not being printed. I included them at the top of each plot while this is not fixed. The presenter here should emphasize the rotation, and speak about what the scores are. You can hover the points and show them what was the position of the points in the "de-rotated" plots, and now in the "rotated" ones, highlighting that now, the "new axes" are PC1 and PC2. This understand will be useful when the participants are going to use the PCA functions that are implemented in R. --- # Principal Component Analysis: step-by-step PCA can also be computed using the `stats::prcomp()`, `stats::princomp()`, `vegan::rda()`, and `ade4::dudi.pca()` functions. .pull-left[ How our PCA from scratch compares ```r data(varechem) Y <- varechem[, 1:2] Y_std <- as.matrix(scale(Y)) Y_R <- cov(Y_std) Eigenvalues <- eigen(Y_R)$values Eigenvectors <- eigen(Y_R)$vectors F_PrComps <- Y_std %*% Eigenvectors head(F_PrComps) # [,1] [,2] # 18 0.1894957 0.4713816 # 15 0.8662023 1.4319452 # 24 1.3494546 -0.7909067 # 27 0.9718543 -0.5156358 # 23 0.2644868 -0.6269034 # 19 -0.2510109 0.1444178 ``` ] .pull-right[ to `stats::prcomp()`? ```r PCA_prcomp <- prcomp(Y, center = TRUE, scale = TRUE) # or PCA_prcomp <- prcomp(Y_std) head(PCA_prcomp$x) # PC1 PC2 # 18 -0.1894957 -0.4713816 # 15 -0.8662023 -1.4319452 # 24 -1.3494546 0.7909067 # 27 -0.9718543 0.5156358 # 23 -0.2644868 0.6269034 # 19 0.2510109 -0.1444178 ``` ] --- # Principal Component Analysis: step-by-step PCA can also be computed using the `stats::prcomp()`, `stats::princomp()`, `vegan::rda()`, and `ade4::dudi.pca()` functions. .pull-left[ How our PCA from scratch compares ```r data(varechem) Y <- varechem[, 1:2] Y_std <- as.matrix(scale(Y)) Y_R <- cov(Y_std) Eigenvalues <- eigen(Y_R)$values Eigenvectors <- eigen(Y_R)$vectors F_PrComps <- Y_std %*% Eigenvectors head(F_PrComps) # [,1] [,2] # 18 0.1894957 0.4713816 # 15 0.8662023 1.4319452 # 24 1.3494546 -0.7909067 # 27 0.9718543 -0.5156358 # 23 0.2644868 -0.6269034 # 19 -0.2510109 0.1444178 ``` ] .pull-right[ to `stats::princomp()`? ```r PCA_princomp <- princomp(Y_std) head(PCA_princomp$scores) # Comp.1 Comp.2 # 18 -0.1894957 -0.4713816 # 15 -0.8662023 -1.4319452 # 24 -1.3494546 0.7909067 # 27 -0.9718543 0.5156358 # 23 -0.2644868 0.6269034 # 19 0.2510109 -0.1444178 ``` ] --- # Principal Component Analysis: step-by-step PCA can also be computed using the `stats::prcomp()`, `stats::princomp()`, `vegan::rda()`, and `ade4::dudi.pca()` functions. .pull-left[ How our PCA from scratch compares ```r data(varechem) Y <- varechem[, 1:2] Y_std <- as.matrix(scale(Y)) Y_R <- cov(Y_std) Eigenvalues <- eigen(Y_R)$values Eigenvectors <- eigen(Y_R)$vectors F_PrComps <- Y_std %*% Eigenvectors head(F_PrComps) # [,1] [,2] # 18 0.1894957 0.4713816 # 15 0.8662023 1.4319452 # 24 1.3494546 -0.7909067 # 27 0.9718543 -0.5156358 # 23 0.2644868 -0.6269034 # 19 -0.2510109 0.1444178 ``` ] .pull-right[ to `vegan::rda()`? ```r PCA_vegan_rda <- rda(Y_std) scores(PCA_vegan_rda, display = "sites", scaling = 1, choices = seq_len(PCA_vegan_rda$CA$rank), const = sqrt(PCA_vegan_rda$tot.chi * (nrow(PCA_vegan_rda$CA$u) - 1)))[1:5, ] # PC1 PC2 # 18 -0.1894957 -0.4713816 # 15 -0.8662023 -1.4319452 # 24 -1.3494546 0.7909067 # 27 -0.9718543 0.5156358 # 23 -0.2644868 0.6269034 ``` `vegan::rda()` is a bit special. It uses alternative scalings. We will not cover them here, but you can study the `vignette("decision-vegan")`. ] ??? Tell participants that the name `rda` refers to a diferent type of constrained ordination technique, but that if we run `rda()` with just one variable, it will execute a PCA. --- # Principal Component Analysis We have implemented PCA on a two-variables dataset, for simplicity. Let us advance and apply it to our fish species dataset. For this, we will use the `vegan::rda()` function on the *Hellinger-transformed* fish data and summarise the results: ```r spe.h.pca <- rda(spe.hel) # summary(spe.h.pca) ``` --- # Principal Component Analysis .pull-left[ The first lines of `summary.rda()` tell us about the *Total variance* and *Unconstrained variance* in our model. ] .pull-right[ ``` # [1] "Partitioning of variance:" " Inertia Proportion" # [3] "Total 0.5023 1" "Unconstrained 0.5023 1" ``` ] -- .pull-left2[ ``` # [1] "Importance of components:" # [2] " PC1 PC2 PC3 PC4 PC5 PC6 PC7" # [3] "Eigenvalue 0.2491 0.06592 0.04615 0.03723 0.02125 0.01662 0.01477" # [4] "Proportion Explained 0.4959 0.13122 0.09188 0.07412 0.04230 0.03309 0.02940" # [5] "Cumulative Proportion 0.4959 0.62715 0.71903 0.79315 0.83544 0.86853 0.89794" # [6] " PC14 PC15 PC16 PC17 PC18 PC19" # [7] "Eigenvalue 0.002612 0.001505 0.001387 0.001037 0.0007815 0.0004749" # [8] "Proportion Explained 0.005200 0.002996 0.002761 0.002065 0.0015557 0.0009454" # [9] "Cumulative Proportion 0.987229 0.990225 0.992986 0.995051 0.9966069 0.9975523" ``` ] .pull-right2[ This is followed by the *Eigenvalues*, and their contribution to the variance. In fact, if we sum all our *Eigenvalues*, we will obtain the amount of uncostrained variance explained by the analysis! ```r sum(spe.h.pca$CA$eig) # [1] 0.5023429 ``` ] ??? Since we have not constrained our ordination, the proportional unconstrained variance is equal to the total variance. Take a moment to explain the proportion explained, and show that the cummulative proportion will equal to 1 at the 27th PC. --- # Principal Component Analysis The next information is related to the *scaling*, to the *species scores*, and to the *site scores*. ``` # [1] "Eigenvalue 0.0004263 0.0002812 0.0002188 0.0001382 0.0000876" # [2] "Proportion Explained 0.0008487 0.0005598 0.0004356 0.0002752 0.0001744" # [3] "Cumulative Proportion 0.9984010 0.9989608 0.9993965 0.9996717 0.9998460" # [4] " PC25 PC26 PC27" # [5] "Proportion Explained 1.062e-04 2.938e-05 1.835e-05" # [6] "Cumulative Proportion 1.000e+00 1.000e+00 1.000e+00" # [7] "Scaling 2 for species and site scores" # [8] "* Species are scaled proportional to eigenvalues" # [9] "* Sites are unscaled: weighted dispersion equal on all dimensions" # [10] "* General scaling constant of scores: 1.953663 " # [11] "" # [12] "" # [13] "Species scores" # [14] "BCO -0.20055 0.08332 -0.074787 -0.0504875 0.073890 0.0249842" # [15] "PCH -0.14626 0.05268 -0.072012 -0.0432572 0.050318 0.0178776" # [16] "GAR -0.35085 -0.09353 0.198664 0.0178669 0.023796 -0.0971362" # [17] "BBO -0.24167 0.03598 -0.079528 -0.0339049 0.096690 0.0620979" # [18] "ABL -0.42269 -0.22879 0.007158 0.1128353 0.006759 0.1248913" # [19] "ANG -0.20521 0.11557 -0.072060 -0.0159902 0.072030 -0.0003801" # [20] "" # [21] "" # [22] "Site scores (weighted sums of species scores)" ``` ] --- # Principal Component Analysis .pull-left[ *Species* refer to your descriptors (i.e. the columns in your dataset), which here are the fish species. *Scores* refer to the position of every species along the principal components. ] .pull-right[ ``` # [1] "Cumulative Proportion 1.000e+00 1.000e+00 1.000e+00" # [2] "Scaling 2 for species and site scores" # [3] "* Species are scaled proportional to eigenvalues" # [4] "* Sites are unscaled: weighted dispersion equal on all dimensions" # [5] "* General scaling constant of scores: 1.953663 " # [6] "" # [7] "" # [8] "Species scores" ``` ] -- <br> .pull-left2[ ``` # [1] "PCH -0.14626 0.05268 -0.072012 -0.0432572 0.050318 0.0178776" # [2] "GAR -0.35085 -0.09353 0.198664 0.0178669 0.023796 -0.0971362" # [3] "BBO -0.24167 0.03598 -0.079528 -0.0339049 0.096690 0.0620979" # [4] "ABL -0.42269 -0.22879 0.007158 0.1128353 0.006759 0.1248913" # [5] "ANG -0.20521 0.11557 -0.072060 -0.0159902 0.072030 -0.0003801" # [6] "" # [7] "" # [8] "Site scores (weighted sums of species scores)" ``` ] .pull-right2[ *Sites* represent the rows in your dataset, which here are the different sites along the *Doubs* river. ] -- <br> .pull-left[ This information can be obtained with the `score()` function that we used before: ] .pull-right[ ```r scores(spe.h.pca, display = "species" or "sites") ``` ] --- # Principal Component Analysis: condensing data Here, we have 27 principal components. However, we can apply algorithms to select the lowest number of principal components that still account for a large variance in the data. -- #### Kaiser-Guttman criterion .pull-left[ We can select the principal components that capture more variance than the average explanation of all principal components. We do this by: 1. Extracting the *Eigenvalues* associated to the principal components; 2. Subsetting the *Eigenvalues* above the mean *Eigenvalue*: ```r ev <- spe.h.pca$CA$eig # ev[ev > mean(ev)] ``` ] .pull-right[ ```r n <- length(ev) barplot(ev, main = "", col = "grey", las = 2) abline(h = mean(ev), col = "red3", lwd = 2) legend("topright", "Average eigenvalue", lwd = 2, col = "red3" , bty = "n") ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-63-1.png" width="720" style="display: block; margin: auto;" /> ] --- # Principal Component Analysis: condensing data Here, we have 27 principal components. However, we can apply algorithms to select the lowest number of principal components that still account for a large variance in the data. #### Broken-stick model .pull-left[ The broken-stick model retains components that explain more variance than would be expected by randomly dividing the variance into `\(p\)` parts. ```r head(bstick(spe.h.pca)) # PC1 PC2 PC3 PC4 PC5 PC6 # 0.07240169 0.05379640 0.04449375 0.03829199 0.03364067 0.02991961 ``` ] .pull-right[ ```r screeplot(spe.h.pca, bstick = TRUE, type = "lines") ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-65-1.png" width="324" style="display: block; margin: auto;" /> ] --- # Principal Component Analysis All that is left is to discuss *scaling* and to *visualize* our results. Let us practice and compute a PCA on the standardized environmental variables for the same dataset. ```r env.pca <- rda(env.z) # summary(env.pca, scaling = 2) ``` -- Determine our subset of *Eigenvalues* and their corresponding *Eigenvectors*: .pull-left[ ```r ev <- env.pca$CA$eig ``` ```r ev[ev>mean(ev)] # PC1 PC2 PC3 # 5.968749 2.163818 1.065164 ``` ] -- .pull-right[ <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-69-1.png" width="576" style="display: block; margin: auto;" /> ] --- # Principal Component Analysis: `plot()` The information computed by the PCA can be represented with *biplots*. We can produce a *quick and dirty* biplot of the PCA using the function `plot()` in base `R`. ```r plot(spe.h.pca) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-70-1.png" width="360" style="display: block; margin: auto;" /> --- # Principal Component Analysis: `biplot()` `biplot()` from `base` `R` allows for a better interpretation. .pull-left2[ ```r biplot(spe.h.pca) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-71-1.png" width="468" style="display: block; margin: auto;" /> ] .pull-right2[ The arrows are plotted to show the directionality and angle of the descriptors in the ordination. Descriptors at 180 degrees of each other are negatively correlated; Descriptors at 90 degrees of each other have zero correlation; Descriptors at 0 degrees of each other are positively correlated. ] --- # Principal Component Analysis: *Scaling* .small[ *Type 2 scaling* (`default`): distances among objects are not approximations of Euclidean distances; angles between descriptor (species) vectors reflect their correlations. ] .small[ *Type 1 scaling*: attempts to preserve the Euclidean distance (in multidimensional space) among objects (sites): the angles among descriptor (species) vector are not meaningful. ] .pull-left[ ```r biplot(spe.h.pca, scaling = 1) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-72-1.png" width="324" style="display: block; margin: auto;" /> ] .pull-right[ ```r biplot(spe.h.pca, scaling = 2) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-73-1.png" width="324" style="display: block; margin: auto;" /> ] ??? 2: **Best for interpreting relationships among descriptors (species)!** 1: **Best for interpreting relationships among objects (sites)!** --- # Challenge #2 ![:cube]() Using everything you have learned, compute a PCA on the mite species abundance data ```r data(mite) ``` Be ready to discuss and answer: - What are the *most relevant* principal components, i.e. subset them? - Which groups of sites can you identify? - Which groups of species are related to these groups of sites? --- # Challenge #2: Solution Compute PCA on the Hellinger-transformed species data ```r mite.spe.hel <- decostand(mite, method = "hellinger") mite.spe.h.pca <- rda(mite.spe.hel) ``` -- .pull-left[ Apply the Kaiser-Guttman criterion ```r ev <- mite.spe.h.pca$CA$eig ev[ev>mean(ev)] n <- length(ev) barplot(ev, main = "Eigenvalues", col = "grey", las = 2) abline(h = mean(ev), col = "red3", lwd = 2) legend("topright", "Average eigenvalue", lwd = 2, col = "red3", bty = "n") ``` ] .pull-right[ <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-77-1.png" width="360" style="display: block; margin: auto;" /> ] --- # Challenge #2: Solution ```r biplot(mite.spe.h.pca, col = c("red3", "grey15")) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-78-1.png" width="504" style="display: block; margin: auto;" /> --- # Principal Coordinates Analysis The **PCoA** is similar in spirit to PCA, but it takes *dissimilarities* as input data! It was previously called as *Classical Multidimensional Scaling* (MDS) and it aims at faithfully representing distances with the lowest possible dimensional space. It begins with the (i) computation of a distance matrix for the `\(p\)` elements, then (ii) the centering of the matrix by rows and columns, and finally, the (iii) *Eigendecomposition* of the centered distance matrix. -- To compute a PCoA, we can use the `cmdscale()` or the `pcoa()`functions from the `stats` and `ape` packages: Run a PCoA on the Hellinger distance-transformed fish dataset: ```r library(ape) spe.h.pcoa <- pcoa(dist(spe.hel)) summary(spe.h.pcoa) # Length Class Mode # correction 2 -none- character # note 1 -none- character # values 5 data.frame list # vectors 810 -none- numeric # trace 1 -none- numeric ``` --- # Principal Coordinates Analysis ```r head(spe.h.pcoa$values) # Eigenvalues Relative_eig Broken_stick Cumul_eig Cumul_br_stick # 1 7.2247720 0.49593627 0.14412803 0.4959363 0.1441280 # 2 1.9115366 0.13121526 0.10709099 0.6271515 0.2512190 # 3 1.3384761 0.09187817 0.08857247 0.7190297 0.3397915 # 4 1.0797364 0.07411728 0.07622679 0.7931470 0.4160183 # 5 0.6161946 0.04229798 0.06696753 0.8354450 0.4829858 # 6 0.4820459 0.03308949 0.05956013 0.8685345 0.5425459 ``` --- # Principal Coordinates Analysis We can also see the *Eigenvectors* associated to each *Eigenvalue* containing the coordinates in the Euclidean space for each site. ```r head(spe.h.pcoa$vectors)[, 1:5] # Axis.1 Axis.2 Axis.3 Axis.4 Axis.5 # 1 -0.510156945 0.34756659 0.60308559 -0.3105433 -0.197553521 # 2 -0.697567847 0.04025083 0.10420566 -0.2256121 0.163849922 # 3 -0.639279415 -0.02078733 0.03988954 -0.2636284 0.130319412 # 4 -0.411968300 -0.13472113 -0.14290276 -0.2900746 0.003953021 # 5 0.005052046 -0.09541567 -0.30540211 -0.2852990 -0.319905818 # 6 -0.292971968 -0.11423456 -0.31865809 -0.2359718 -0.055670665 ``` --- # Principal Coordinates Analysis: `biplot.pcoa()` We can display, the distances between sites using the `biplot.pcoa()` function, as well as represent the species associated to each site. ```r biplot.pcoa(spe.h.pcoa, spe.hel) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-82-1.png" width="576" style="display: block; margin: auto;" /> --- ### Principal Coordinates Analysis: non-metric distances PCoA can also be used to capture information contained in non-metric distances, such as the popular Bray-Curtis distance. Let us give it a try: .pull-left[ ```r spe.bray.pcoa <- pcoa(spe.db.pa) ``` ```r spe.bray.pcoa$values$Eigenvalues # [1] 3.695332e+00 1.197352e+00 8.465325e-01 5.253896e-01 4.147227e-01 # [6] 2.977969e-01 1.913094e-01 1.554757e-01 1.307617e-01 1.272105e-01 # [11] 8.550928e-02 4.357746e-02 3.862321e-02 2.727686e-02 1.303318e-02 # [16] 6.704839e-03 3.817779e-03 1.300463e-03 0.000000e+00 -3.597737e-05 # [21] -4.167639e-03 -8.970687e-03 -1.473688e-02 -1.601025e-02 -2.165231e-02 # [26] -3.127263e-02 -3.443356e-02 -3.763160e-02 -6.064415e-02 -6.882758e-02 ``` ] .pull-right[ Note the negative eigenvalues! This is because non-metric distances cannot be represented in Euclidean space without corrections (*see* Legendre & Legendre 2012 for more details on this): ```r spe.bray.pcoa <- pcoa(spe.db.pa, correction = "cailliez") ``` ] --- ### Principal Coordinates Analysis: non-metric distances .pull-left[ The corrected Eigenvalues are now on a new column! ```r spe.bray.pcoa$values$Corr_eig # [1] 5.208616362 1.735210656 1.249426268 0.813115534 0.690430331 0.513559025 # [7] 0.387520962 0.363702867 0.296206500 0.273082611 0.215178142 0.154525233 # [13] 0.150803988 0.118203435 0.088726577 0.073233820 0.069460754 0.057344134 # [19] 0.056008651 0.054525339 0.049309077 0.040482831 0.037906147 0.034709860 # [25] 0.029748166 0.023624321 0.019072278 0.003071851 0.000000000 0.000000000 ``` ] .pull-right[ Use a biplot without the species to represent it! ```r biplot.pcoa(spe.bray.pcoa) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-87-1.png" width="432" style="display: block; margin: auto;" /> ] --- # Challenge #3 ![:cube]() Compute a PCoA on the Hellinger-transformed mite species abundance data Be ready to answer: - What are the significant *Eigenvectors* and *Eigenvalues*? - Which groups of sites can you identify? - Which groups of species are related to these groups of sites - How do the PCoA results compare with the PCA results? --- # Challenge #3: Solution - Hellinger transform the species data ```r mite.spe <- mite mite.spe.hel <- decostand(mite.spe, method = "hellinger") ``` - Compute PCoA ```r mite.spe.h.pcoa <- pcoa(dist(mite.spe.hel)) ``` --- # Challenge #3: Solution - Build a biplot to visualize the results: ```r biplot.pcoa(mite.spe.h.pcoa, mite.spe.hel) ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-90-1.png" width="432" style="display: block; margin: auto;" /> --- # Non-metric Multidimensional Scaling - In PCA and PCoA, objects are ordinated in a few number of dimensions (generally > 2); - 2D-biplots may not represent all the variation within the dataset; - Sometimes, we aim at representing the data in a specified smaller number of dimensions; - How can we plot the ordination space to represent the most variation as possible in the data? -- We can attempt using *non-metric multidimensional scaling*! * NMDS is the non-metric counterpart of PCoA; * It uses an iterative optimization algorithm to find the best representation of distances in reduced space; --- # Non-metric Multidimensional Scaling - NMDS applies an iterative procedure that tries to position the objects in the requested number of dimensions in such a way as to minimize a stress function (scaled from 0 to 1), which measures the goodness-of-fit of the distance adjustment in the reduced-space configuration. - Consequently, the lower the stress value, the better the representation of objects in the ordination-space is. - nMDS is implemented in `vegan` as `metaMDS()` where: - `distance` specifies the distance metric to use; - `k` specifies the number of dimensions. ```r # Dune meadow vegetation data, dune, has cover class values of 30 species on 20 # sites. data(dune) spe.nmds <- metaMDS(dune, distance = 'bray', k = 2) ``` --- #### Non-metric Multidimensional Scaling: *goodness-of-fit* The *Shepard* diagram and stress values can be obtained from `stressplot()`: ```r spe.nmds$stress # [1] 0.1183186 stressplot(spe.nmds, main = "Shepard plot") ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-93-1.png" width="360" style="display: block; margin: auto;" /> The Shepard plot identifies a strong correlation between observed dissimilarity and ordination distance (R2 > 0.95) highlighting a high goodness of fit of the NMDS. --- # Non-metric Multidimensional Scaling: `biplot()` Construct the biplot ```r plot(spe.nmds, type = "none", main = paste("NMDS/Bray - Stress =", round(spe.nmds$stress, 3)), xlab = c("NMDS1"), ylab = "NMDS2") points(scores(spe.nmds, display = "sites", choices = c(1,2)), pch = 21, col = "black", bg = "steelblue", cex = 1.2) text(scores(spe.nmds, display = "species", choices = c(1)), scores(spe.nmds, display = "species", choices = c(2)), labels = rownames(scores(spe.nmds, display = "species")), col = "red", cex = 0.8) ``` --- # Non-metric Multidimensional Scaling: `biplot()` .pull-left[ The biplot of the NMDS shows a group of closed sites characterized by the species BLA, TRU, VAI, LOC, CHA and OMB, while the other species form a cluster of sites in the upper right part of the graph. Four sites in the lower part of the graph are strongly different from the others. ] .pull-right[ <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-95-1.png" width="360" style="display: block; margin: auto;" /> ] --- # Challenge #4 ![:cube]() <br> Run the NMDS of the `mite.spe` species abundances in 2 dimensions based on a Bray-Curtis distance. Assess the goodness-of-fit of the ordination and interpret the biplot. Recall these helpful functions: ```r ?metaMDS ?stressplot ``` --- # Challenge #4: Solution Run the NMDS of the `mite` species abundances in 2 dimensions based on a Bray-Curtis distance: ```r mite.nmds <- metaMDS(mite.spe, distance = 'bray', k = 2) ``` --- # Challenge #4: Solution ```r plot(mite.nmds, type = "none", main = paste("NMDS/Bray - Stress =", round(mite.nmds$stress, 3)), xlab = c("NMDS1"), ylab = "NMDS2") points(scores(mite.nmds, display = "sites", choices = c(1,2)), pch = 21, col = "black", bg = "steelblue", cex = 1.2) text(scores(mite.nmds, display = "species", choices = c(1)), scores(mite.nmds, display = "species", choices = c(2)), labels = rownames(scores(mite.nmds, display = "species")), col = "red", cex = 0.8) ``` --- # Challenge #4: Solution <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-99-1.png" width="504" style="display: block; margin: auto;" /> There is not really any obvious clustering of sites in the NMDS biplot. This tells us that the species occurred in most of the sites. Only a few sites shelter specific communities. --- # Challenge #4: Solution ```r stressplot(mite.nmds, main = "Shepard plot") ``` <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-100-1.png" width="360" style="display: block; margin: auto;" /> The observed dissimilarity is highly correlated with ordination distance, and the NMDS stress value is relatively low, which tells us that the NMDS ordination is relatively accurate. --- # Conclusion .alert[Many ordination techniques exist, but their specificity should guide your choices on which methods to use] | | Distance preserved | Variables | Maximum number of axis | |---|---------|--------------|------| |PCA| Euclidean | Quantitative data, linear relationships | p | |CA| Chi2 | Non-negative, quantitative homogeneous data, binary data | p-1 | |PCoA| User defined | Quantitative, semi-quantitative, mixed data| p-1| |NMDS| User defined | Quantitative, semi-quantitative, mixed data| User defined| --- # Quiz time! .alert[What does PCA stand for?] -- Principal Component Analysis -- .alert[Which one is the best way to visualize the *distances* between the community composition of many sites?] -- Principal Coordinate Analysis (PCoA) -- .alert[What does an eigenvalue represent in PCA?] -- The proportion of variance explained by a principal component --- # Quiz time! What is wrong with this plot?  -- .alert[ - The data is not centred. Yikes! ] -- .alert[ - The first two principal components explain 100% of the variation! ] --- class: inverse, center, bottom # Thank you for attending this workshop!  --- exclude: true ### Principal Component Analysis: *Improved visualization* We can build more detailed and aesthetic plots: ```r plot(spe.h.pca, scaling = 1, type = "none", xlab = c("PC1 (%)", round(spe.h.pca$CA$eig[1]/sum(spe.h.pca$CAeig)*100,2)), ylab = c("PC2 (%)", round(spe.h.pca$CA$eig[2]/sum(spe.h.pca$CA$eig)*100,2))) points(scores(spe.h.pca, display = "sites", choices = c(1,2), scaling = 1), pch=21, col = "black", bg = "steelblue" , cex = 1.2) text(scores(spe.h.pca, display = "species", choices = 1, scaling = 1), scores(spe.h.pca, display = "species", choices = 2, scaling = 1), labels = rownames(scores(spe.h.pca, display = "species", scaling = 1)), col = "red", cex = 0.8) spe.cs <- scores(spe.h.pca, choices = 1:2, scaling = 1 , display = "sp") arrows(0, 0, spe.cs[,1], spe.cs[,2], length = 0) ``` --- exclude: true ### Principal Component Analysis: *Improved visualization* <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-102-1.png" width="504" style="display: block; margin: auto;" /> --- exclude: true # Correspondence Analysis (CA) ## Euclidean vs Chi<sup>2</sup> distances - PCA preserves **euclidean distances** between objects, and thus postulates **linear relationships** between species, and between species and environmental gradients. - ... but in **some cases, species instead present unimodal responses** to environmental gradients --- exclude: true # Principles of CA - In such cases, CA should be preferred compared to PCA as it preserves **Chi2 distances between sites**... and thus better represents uni modal relationships --- exclude: true # How to run a CA? - CA is implemented in the `vegan` package using the function `cca()`: ```r spe.ca <- cca(spe[-8,]) # only take columns which rowsums are > than 0. ``` - CA on fish species abundances --- exclude: true # CA: R output - CA results are presented in the same way as PCA results and can be called using: ```r summary(spe.ca) # # Call: # cca(X = spe[-8, ]) # # Partitioning of scaled Chi-square: # Inertia Proportion # Total 1.167 1 # Unconstrained 1.167 1 # # Eigenvalues, and their contribution to the scaled Chi-square # # Importance of components: # CA1 CA2 CA3 CA4 CA5 CA6 CA7 # Eigenvalue 0.601 0.1444 0.10729 0.08337 0.05158 0.04185 0.03389 # Proportion Explained 0.515 0.1237 0.09195 0.07145 0.04420 0.03586 0.02904 # Cumulative Proportion 0.515 0.6387 0.73069 0.80214 0.84634 0.88220 0.91124 # CA8 CA9 CA10 CA11 CA12 CA13 # Eigenvalue 0.02883 0.01684 0.010826 0.010142 0.007886 0.006123 # Proportion Explained 0.02470 0.01443 0.009278 0.008691 0.006758 0.005247 # Cumulative Proportion 0.93594 0.95038 0.959655 0.968346 0.975104 0.980351 # CA14 CA15 CA16 CA17 CA18 CA19 # Eigenvalue 0.004867 0.004606 0.003844 0.003067 0.001823 0.001642 # Proportion Explained 0.004171 0.003948 0.003294 0.002629 0.001562 0.001407 # Cumulative Proportion 0.984522 0.988470 0.991764 0.994393 0.995955 0.997362 # CA20 CA21 CA22 CA23 CA24 # Eigenvalue 0.001295 0.0008775 0.0004217 0.0002149 0.0001528 # Proportion Explained 0.001110 0.0007520 0.0003614 0.0001841 0.0001309 # Cumulative Proportion 0.998472 0.9992238 0.9995852 0.9997693 0.9999002 # CA25 CA26 # Eigenvalue 8.949e-05 2.695e-05 # Proportion Explained 7.669e-05 2.310e-05 # Cumulative Proportion 1.000e+00 1.000e+00 # # Scaling 2 for species and site scores # * Species are scaled proportional to eigenvalues # * Sites are unscaled: weighted dispersion equal on all dimensions # # # Species scores # # CA1 CA2 CA3 CA4 CA5 CA6 # CHA 1.50075 -1.410293 0.26049 -0.307203 0.271777 -0.003465 # TRU 1.66167 0.444129 0.57571 0.166073 -0.261870 -0.326590 # VAI 1.28545 0.285328 -0.04768 0.018126 0.043847 0.200732 # LOC 0.98662 0.360900 -0.35265 -0.009021 -0.012231 0.253429 # OMB 1.55554 -1.389752 0.80505 -0.468471 0.471301 0.225409 # BLA 0.99709 -1.479902 -0.48035 0.079397 -0.105715 -0.332445 # HOT -0.54916 -0.051534 0.01123 -0.096004 -0.382763 0.134807 # TOX -0.18478 -0.437710 -0.57438 0.424267 -0.587150 0.091866 # VAN 0.01337 -0.095342 -0.57672 0.212017 0.126668 -0.389103 # CHE 0.01078 0.140577 -0.34811 -0.538268 0.185286 0.167087 # BAR -0.33363 -0.300682 -0.04929 0.170961 -0.157203 0.103068 # SPI -0.38357 -0.255310 -0.20136 0.374057 -0.385866 0.239001 # GOU -0.32152 -0.034382 -0.07423 -0.031236 0.014417 -0.156351 # BRO -0.26165 0.187282 0.00617 0.183771 0.295142 -0.262808 # PER -0.28913 0.121044 -0.18919 0.367615 0.218087 -0.163675 # BOU -0.60298 -0.057369 0.20341 0.214299 -0.050977 0.211926 # PSO -0.58669 -0.082467 0.21198 0.050175 -0.120456 0.108724 # ROT -0.61815 0.124733 0.13339 0.147190 0.317736 -0.340380 # CAR -0.57951 -0.110732 0.20173 0.308547 0.006854 0.153224 # TAN -0.37880 0.138023 -0.07825 0.095793 0.256285 -0.029245 # BCO -0.70235 0.011155 0.40242 0.211582 0.138186 0.132297 # PCH -0.73238 -0.009098 0.55678 0.321852 0.281812 0.172271 # GRE -0.69300 0.038971 0.37688 -0.183965 -0.051945 -0.011126 # GAR -0.44181 0.176915 -0.23691 -0.345104 0.129676 -0.043802 # BBO -0.70928 0.032317 0.40924 0.030224 0.049050 0.114560 # ABL -0.63114 0.053594 0.15204 -0.661381 -0.414796 -0.206611 # ANG -0.63578 -0.041894 0.30093 0.224044 0.030444 0.203160 # # # Site scores (weighted averages of species scores) # # CA1 CA2 CA3 CA4 CA5 CA6 # 1 2.76488 3.076306 5.3657489 1.99192 -5.07714 -7.80447 # 2 2.27540 2.565531 1.2659130 0.87538 -1.89139 -0.13887 # 3 2.01823 2.441224 0.5144079 0.79436 -1.03741 0.56015 # 4 1.28485 1.935664 -0.2509482 0.76470 0.54752 0.10579 # 5 0.08875 1.015182 -1.4555434 0.47672 2.69249 -2.92498 # 6 1.03188 1.712163 -0.9544059 0.01584 0.91932 0.39856 # 7 1.91427 2.256208 -0.0001407 0.39844 -1.07017 0.32127 # 9 0.25591 1.443008 -2.5777721 -3.41400 2.36613 2.71741 # 10 1.24517 1.526391 -1.9635663 -0.41230 0.69647 1.51859 # 11 2.14501 0.110278 1.6108693 -0.82023 0.53918 1.01153 # 12 2.17418 -0.251649 1.5845397 -0.81483 0.52623 1.05501 # 13 2.30944 -2.034439 1.9181448 -0.60481 0.64435 -0.14844 # 14 1.87141 -2.262503 1.1066796 -0.80840 1.09542 0.11038 # 15 1.34659 -1.805967 -0.6441505 -0.52803 0.76871 -0.67165 # 16 0.70214 -1.501167 -1.9735888 0.98502 -0.93585 -1.27168 # 17 0.28775 -0.836803 -1.2259108 0.73302 -1.57036 0.57315 # 18 0.05299 -0.647950 -0.9234228 0.35770 -0.95401 0.77738 # 19 -0.20584 -0.007252 -1.0154343 0.07041 -1.03450 0.51442 # 20 -0.57879 0.042849 -0.3660551 -0.15019 -0.61357 0.10115 # 21 -0.67320 0.038875 0.1194956 0.17256 -0.14686 -0.12018 # 22 -0.71933 0.014694 0.2204186 0.13598 0.09459 -0.02068 # 23 -0.70438 0.735398 -0.6546250 -6.61523 -2.49441 -1.73215 # 24 -0.83976 0.390120 0.5605295 -4.38864 -2.56916 -0.96702 # 25 -0.68476 0.418842 -0.2860819 -2.80336 -0.37540 -3.93791 # 26 -0.75808 0.210204 0.5894091 -0.70004 -0.01880 -0.10779 # 27 -0.75046 0.100869 0.5531191 -0.12946 0.29164 0.11280 # 28 -0.77878 0.088976 0.7379012 0.05204 0.40940 0.43236 # 29 -0.60815 -0.203235 0.5522726 0.43621 0.15010 0.25618 # 30 -0.80860 -0.019592 0.6686542 0.88136 0.52744 0.16456 ``` --- exclude: true # CA: Interpretation of results .pull-left2[  ] .pull-right2[ 26 CA axes identified % CA1 = 51.50% % CA2 = 12.37% ] --- exclude: true # CA: biplots .center[ ] .small[ The group of sites on the left is characterized by the species *GAR*, *TAN*, *PER*, *ROT*, *PSO*, and *CAR* The group of sites in the upper right corner is characterized by the species *LOC*, *VAI* and *TRU* The group of sites in the lower right corner is characterized by the species *BLA*, *CHA*, and *OMB* ] --- exclude: true # Challenge #4 ![:cube]() Using everything you have learned to execute a CA on the mite species abundance data: ```r mite.spe <- mite ``` - What are the significant axes? - Which groups of sites can you identify? - Which groups of species are related to these groups of sites? --- exclude: true # Solution #4 - Compute CA: ```r mite.spe.ca <- cca(mite.spe) ``` - Check significant axes using the Guttman-Kaiser criterion ```r ev <- mite.spe.ca$CA$eig ev[ev > mean(ev)] n <- length(ev) barplot(ev, main = "Eigenvalues", col = "grey", las = 2) abline(h = mean(ev), col = "red3", lwd = 2) legend("topright", "Average eigenvalue", lwd = 2, col = red3, bty = "n") ``` --- exclude: true # Solution #4 <img src="workshop09-pres-en_files/figure-html/unnamed-chunk-108-1.png" width="720" style="display: block; margin: auto;" />