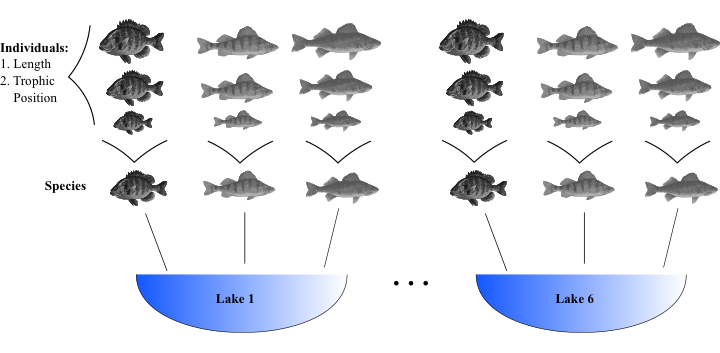

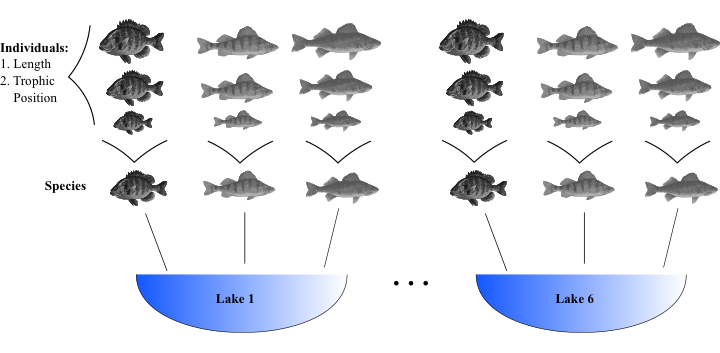

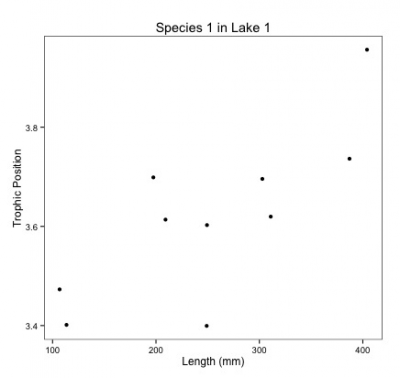

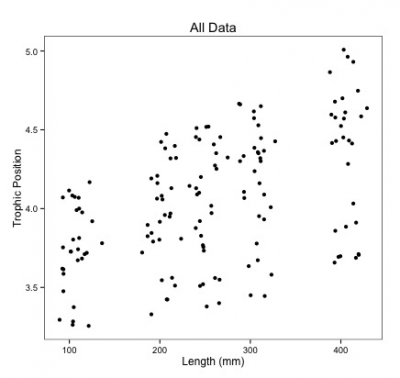

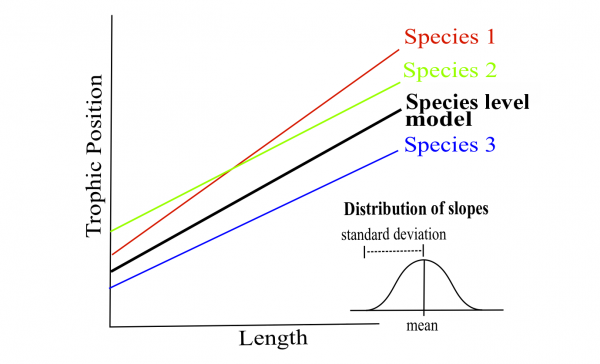

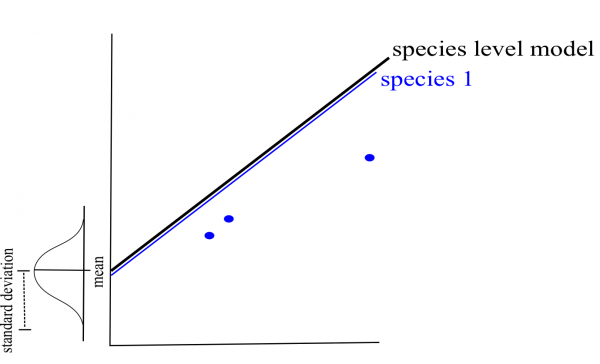

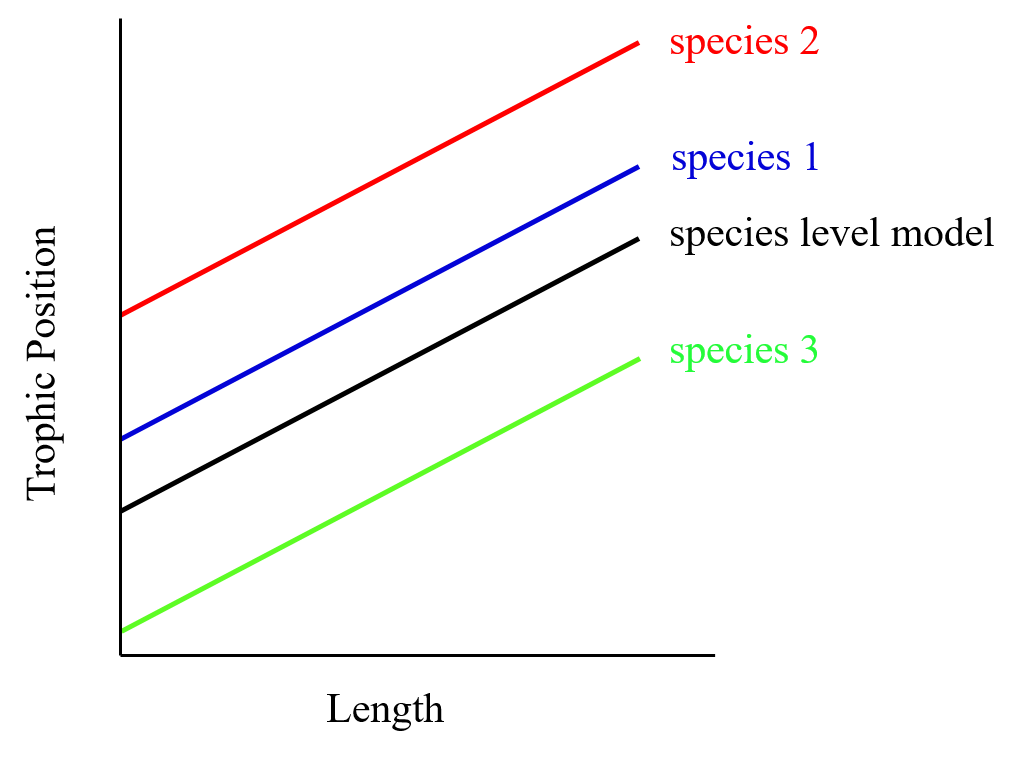

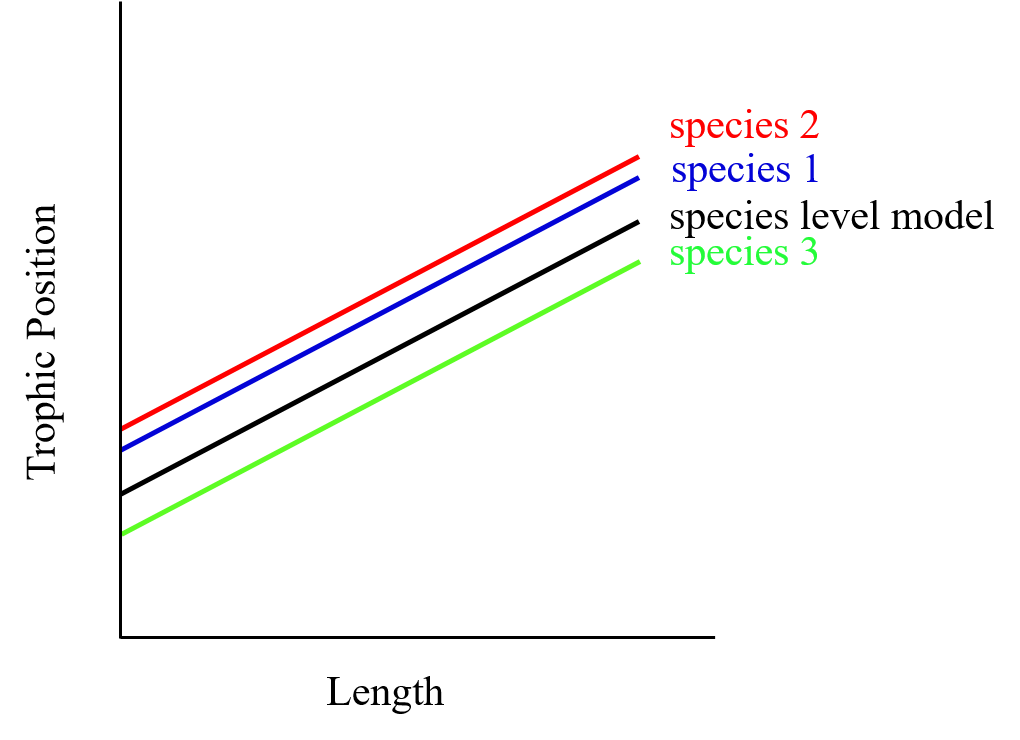

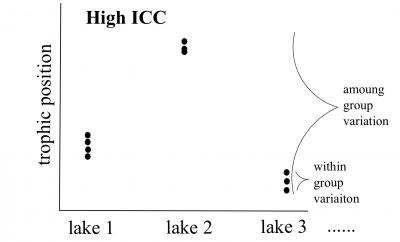

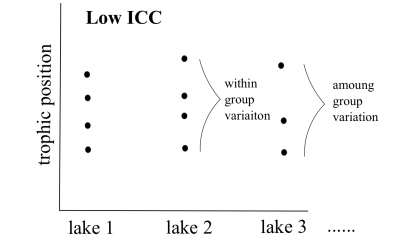

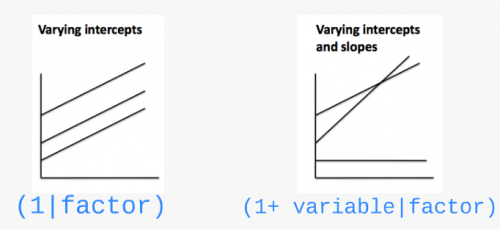

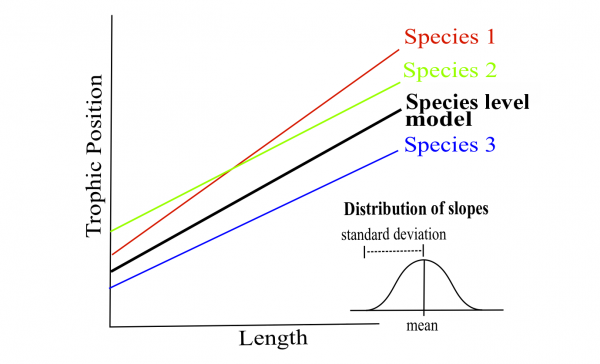

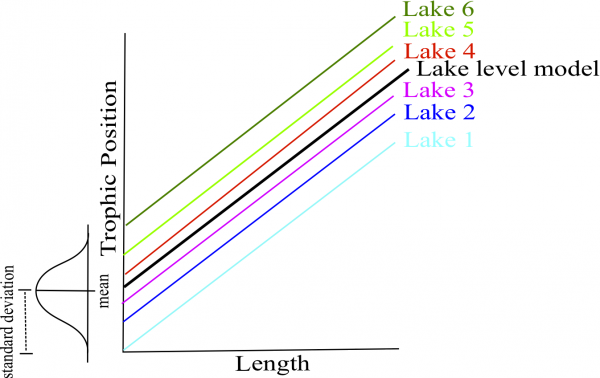

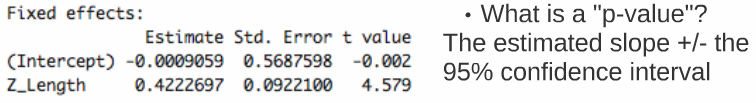

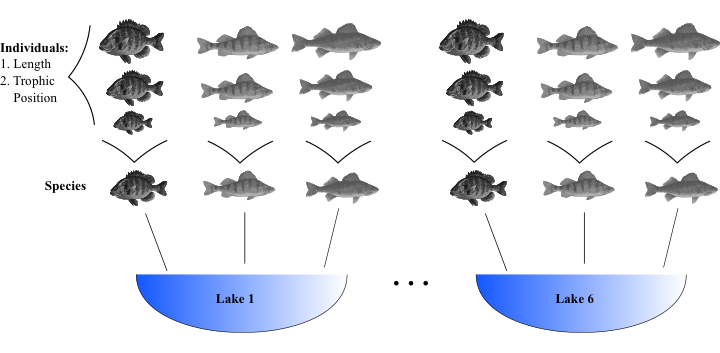

class: center, middle, inverse, title-slide .title[ # Workshop 7: Linear and generalized linear mixed models (LMM and GLMM) ] .subtitle[ ## QCBS R Workshop Series ] .author[ ### Quebec Centre for Biodiversity Science ] --- class: inverse, center, middle # About this workshop [](https://r.qcbs.ca/workshop07/pres-en/workshop07-pres-en.html) [](https://r.qcbs.ca/workshop07/book-en/index.html) [](https://r.qcbs.ca/workshop07/book-en/workshop07-script-en.R) [](https://github.com/QCBSRworkshops/workshop07) ??? Note: Communicate to the participants that all the materials for this workshop is available on the R workshops website, and that there is an additional 'bookdown' with additional text. There is a lot of content for this workshop, so participants will be able to review the concepts they understood a bit less after on their own after the workshop. --- <p style="font-size:75%"> .center[ **Contributors to the development of this workshop** by modifying and improving its content as part of the <br> *Le*arning *a*nd *D*evelopment *A*ward ] .pull-left[ .right[ **2022** - **2021** - **2020** [Maxime Fraser Franco]() <br> [Hassen Allegue]() <br> [Linley Sherin]() <br> [Pedro Henrique P. Braga]() <br> [Katherine Hébert]() <br> [Kevin Cazelles]() <br> [Janaína Serrano]() <br> [Dominique Caron]() ] ] .pull-right[ .left[ **2019** - **2018** - **2017** [Nicolas Princeloup]() <br> [Marie Hélène Brice]() **2016** - **2015** - **2014** [Catherine Baltazar]() <br> [Dalal Hanna]() <br> [Jacob Ziegler]() <br> [Cédric Frenette Dussault]() <br> [Vincent Fugère]() <br> [Thomas Lamy]() <br> [Zofia Taranu]() ] ] </p> --- # Required material To follow this workshop, you are required to have downloaded and installed the latest [RStudio](https://rstudio.com/products/rstudio/download/#download) and [R](https://cran.rstudio.com/) versions. .pull-left[ You must also use these packages: * [lme4](http://cran.r-project.org/web/packages/lme4/index.html) * [MASS](http://cran.r-project.org/web/packages/MASS/index.html) * [vcdExtra](http://cran.r-project.org/web/packages/vcdExtra/index.html) * [bbmle](http://cran.r-project.org/web/packages/bbmle/index.html) * [MuMIn](http://cran.r-project.org/web/packages/MuMIn/index.html) * [ggplot2](http://cran.r-project.org/web/packages/ggplot2/index.html) * [DescTools](http://cran.r-project.org/web/packages/DescTools/index.html) * [remotes](http://cran.r-project.org/web/packages/remotes/index.html) * [gridExtra](http://cran.r-project.org/web/packages/gridExtra/index.html) * [lattice](http://cran.r-project.org/web/packages/lattice/index.html) ] .pull-right[ To install them from CRAN, run: ```r install.packages(c("lme4", "MASS", "vcdExtra", "bbmle", "MuMIn", "ggplot2", "DescTools", "remotes", "gridExtra", "lattice")) ``` ] ??? Note: To save time: (1) Ask the participants to install the packages before the workshop, (2) Don't wait that everyone managed to install every packages and help the students that did not manage to install some packages during challenge #1. --- # Required material .pull-left2[ We will also use the following data sets: - <a href="https://r.qcbs.ca/workshop07/pres-en/data/fishdata.csv">fishdata.csv</a> - <a href="https://r.qcbs.ca/workshop07/pres-en/data/arabidopsis.csv">arabidopsis.csv</a> - <a href="https://r.qcbs.ca/workshop07/pres-en/data/inverts.csv">inverts.csv</a> ] <br> .pull-left2[ Throughout this workshop, there will be a series of **challenges** that you can recognize by this rubix cube. ] .pull-right2[ .center[  ] ] .center[ **During those challenges, do not hesitate to collaborate!** ] --- # Learning objectives **1.** Describe what are (generalized) mixed effects models **2.** Identify situations in which the use of mixed effects is appropriate **3.** Implement basic linear mixed models (LMM) with `R` **4.** Execute basic generalized linear mixed models (GLMM) with `R` **5.** Validate, interpret and visualize mixed models with `R` --- # Research question .alert[**Does fish trophic position increase with fish size?** ] To answer this question, we use a dataset where body length was measured in 3 different fish species with 10 individuals sampled per species across 6 different lakes. .center[  ] --- exclude: true # 1. Why choose mixed models? Introduction to the example dataset .center[  ] .comment[**Q: Does fish trophic position increase with fish size for all three fish species?** ] --- # Challenge 1 ![:cube]() **Get acquainted with the dataset** **1.** Open the workshop script in `R` **2.** Open the example dataset in `R` **3.** Reproduce the plots 1 to 3 (from the script) of the relationship between trophic position and size. Observe the plots and try to get a sense of what you see. ??? Note: Should not be super long, they have the script to make the plots. You can almost just give them time to think while you help participants with installing packages. If there is none, you can just skip the time to think, and simply have a group discussion plot by plot. --- # Solution ![:cube]() <br> <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-2-1.png" width="432" style="display: block; margin: auto;" /> --- # Solution ![:cube]() <br> <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-3-1.png" width="432" style="display: block; margin: auto;" /> --- # Solution ![:cube]() <br> <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-4-1.png" width="432" style="display: block; margin: auto;" /> --- # Group discussion ![:cube]() **Do we expect an increase in trophic position with length in the exact same way for : ** * all species? * all lakes? <br> -- **How might these relationships differ?** --- # Why choose mixed models? ### Ecological and biological data can be complex! * Hierarchical structure in the data * Many covariates and grouping factors * Unbalanced study/experimental design ??? Note: Use the workshop data as example. We have data on fish body length and trophic level that are structured through 3 species and 6 lakes. This creates a hierarchical structure in the data. --- exclude: true # 1. Why choose mixed models? Ecological and biological data can be complex! * Inherent structure to data * Many covariates and grouping factors * Small sample size ??? Note: _MFF: Not sure that mixed models help when the sample size is small. Also, I think that saying the data has a hierarchical structure makes more sense in the context of mixed models_ --- # Why choose mixed models? ### How could we analyze this data? -- <br> **Option 1. Separate** - Run separate analyses for each species in each lake <br> **Option 2. Lump** - Run one analysis ignoring lake and species --- # Why choose mixed models? .pull-left[ ] .pull-right[ **Option 1. Separate** We could run separate analyses for each species : * Estimate 6 intercepts and 6 slopes for each species (i.e. 6 lakes) * Sample size *n* = 10 for each analysis (i.e. 10 fish/species/lake) * Low chance of detecting an effect due to small *n* ] --- # Why choose mixed models? .pull-left[] .pull-right[ **Option 2. Lump** * Big sample size! * What about pseudoreplication? (fish within a lake and within a species might be correlated) * A lot of noise in the data! Some of it might be due to **differences** among **species** and **lakes**. ] --- # Why choose mixed models? For **our question**, we only want to know if there is a **general effect of body length on the trophic position**. <br> <br> However : * **this relationship might differ** slightly among **species** due to unmeasured biological processes (e.g. growth rate) and/or among **lakes** due to unmeasured environmental variables (e.g. differences in food availability). * We need to **control** those potential effects in the model. -- <br> LMMs enable us to **lump and separate the analysis**. They : 1. Estimate slope and intercept parameters for each species and lake (**separating**) while estimating fewer parameters than classical regression. 2. Use all the data available (**lumping**) while accounting for pseudoreplication by controlling differences among lakes and species. --- # Why choose mixed models? ### Fixed vs Random Effects We frequently encounter the terms 'fixed' and 'random' effects in the LMM literature. There are many possible ways to introduce them and we chose to present those we think are easier to apply when doing your analyses. --- # Why choose mixed models? ### Fixed effects : deterministic processes Data comes from : * all possible levels of a factor (**qualitative variable**) * a predictor (**quantitative variable**) <br> We wish to make conclusions about the levels of the factor or about the relationship between the response variable and the predictor. <br> *Example: We are interested in the effect of a treatment (e.g. clear cut) on a response variable (e.g. species richness). The variable treatment is the factor with two levels: clear cut or not.* ??? Note: Emphasize that we gathered data for all levels of the factor that are of interest. We are not interested in other possible levels. --- # Why choose mixed models? ### Random effects : stochastic processes * Only **qualitative variables** = random factor * Data includes only a random sample of all possible factor levels, all of interest * Enable us to structure the error process <br> *Example: We sampled fishes in 6 out of 15 lakes of a region. The variable lake is the factor with 15 levels. We sampled 6 of them, but we aim to make inference about the variability across all the lakes of the region.* ??? Note: Emphasize that we gathered data for a random sample of all levels of the factor. We are interested in all possible levels. --- # Why choose mixed models? ### How do LMMs work? <br> **A.** Intercepts and/or slopes are allowed to vary according to a given factor (**random effect**) (e.g. by lake and/or species). <br> **B.** Intercepts, slopes and their confidence intervals are adjusted to **take the data structure into account**. --- # Random effects : enabling differences in intercepts and/or slopes among grouping levels .pull-left[] .pull-right[] <br> * It is assumed that the intercepts/slopes come from a normal distribution. * The model estimates a general intercept and/or slope with a standard deviation for each random effect (i.e. the normal distribution). <br> This avoids estimating an intercept and a slope for each species (+2 parameters per species) = **saves degrees of freedom** (less parameter estimation is needed). ??? Note: This is pretty important, you can spend some time explaining that instead of having independent slopes and/or intercepts for each levels, we assume that they come from a normal distribution. Make sure that they understand. --- # Random intercept <br> <img style="float: right; width:50%;margin: 1%" src="images/fig_7_w5.png"> It is assumed that the intercepts come from a normal distribution. Only need to estimate the mean (general intercept) and standard deviation of the normal distribution (random effect) instead of 3 intercepts, i.e. one for each species (which would add 2 parameters). <br><br> .comment[Note that the more levels your factor has, the more accurately the mean and standard deviation of the normal distribution will be estimated. Three levels may be a little low, but it is certainly easier to visualize!] --- # Random intercept <br><br> <img style="float: right; width:50%;margin: 1%" src="images/fig_8_w5.png"> Same principle for lakes. Estimate 2 parameters (mean and standard deviation) instead of 6 intercepts. This saves degrees of freedom (less parameter estimation is needed). --- # Random slope <br><br> <img style="float: right; width:50%;margin: 1%" src="images/fig_9_w5.png"> The same principle applies to slopes that vary according to a given factor, just more difficult to visualize. As for intercepts, only the mean and standard deviation of the slopes are estimated instead of three distinct slopes. --- # Taking the data structure into account <br> **What happens if the sample size for a specific factor level is small? (e.g. low `\(n\)` for a specific species)** If a certain species or lake is poorly represented in the data, the model will give more weight to the pooled model to estimate the intercept and/or slope of that species or lake (i.e. shrinkage). Ideally, you should have a minimum of `\(n\)`=3 for any specific factor level. .center[ ] --- # Taking the data structure into account <br> **How to assess the impact of a random effect on the model?** * The confidence intervals for the **general** intercepts and slopes are adjusted to account for pseudo-replication based on the **intraclass correlation coefficient (ICC)**. * The ICC describes the proportion of variance in the response variable that is attributed to a specific random effect : `$$ICC = \frac{\sigma_{\alpha}^2}{\sigma_{\alpha}^2 + \sigma_{\varepsilon}^2}$$` The ICC is calculated as the **ratio** of variance between the random effect (ex. ordonnées à l'origine des espèces) and the total variance. Thus, the ICC informs us of the **extent** to which the average trophic position (i.e. intercepts) **varies** among species or lakes. ??? Note: Tell the audience that mathematical notations may vary according to the article/book and according to how the model equation was written. --- # Taking the data structure into account .pull-left[ **High ICC**  The % of variance (ICC) is high because **species differ strongly** in their average trophic position. The confidence intervals for the general intercept and slope are high. ] .pull-right[ **Low ICC**  The % of variance (ICC) is low because **species differ poorly** in their average trophic position. The confidence intervals for the general intercept and slope are small. ] --- exclude: true # Taking the data structure into account .pull-left[ **High ICC**  the points coming from the same lake are treated as a single observation because they are very correlated  small effective sample size and large confidence intervals for slope and intercept. ] .pull-right[ **Low ICC**  the points coming from the same lake are treated independently because little correlated  large effective sample size and small confidence intervals for slope and intercept. ] --- # Challenge 2 ![:cube]() <br> **How will the ICC and the confidence intervals be affected in these two scenarios?** **Q1.** Fish trophic positions do not vary among lakes. <br> **Q2.** Fish trophic positions are similar within lakes but different among lakes. ??? Note: For this challenge, you can create a zoom poll. It should not take too much time. --- # Solution ![:cube]() **Q1.** Fish trophic positions do not vary among lakes. .alert[A1. Low ICC, small confidence intervals] <br> -- **Q2.** Fish trophic positions are similar within lakes but different among lakes. .alert[A2. High ICC, large confidence intervals] <br><br><br><br><br> -- **For more details on the ICC** : <br> Nakagawa and Schielzeth (2013) https://doi.org/10.1111/j.1469-185X.2010.00141.x Nakagawa *et al.* (2017) https://doi.org/10.1098/rsif.2017.0213 ??? Note: Repeat that the ICC measure the % of the variance explained by the random factor (variation between classes) and that the larger the difference between classes, the larger the confidence interval for the global slope and intercept will be large. --- # How to implement mixed models in R? <img style="float: right; width:40%;margin: 1%" src="images/lego.jpg"> ###### **Step 1.** *A priori* model building and data exploration <br> **Step 2.** Code potential models and model selection <br> **Step 3.** Model validation <br> **Step 4.** Model interpretation and visualization --- # Step 1. *A priori* model building **What we know *a priori*:** * We want to determine if the trophic position can be predicted by body length, while taking into account the variation between species and lakes. * So we want a model that looks like this: `$$TP_{ijk} \sim Length_i + Lake_j + Species_k + \epsilon_{ijk}$$` ??? Note: Describe the equation. The trophic position of a fish is a function of body length, the lake and the species of the individual. The last term is the error term (the difference between the expected trophic position and the true trophic position). --- # Step 1. Data exploration **Does the data have the right structure?** ```r fish.data <- read.csv('data/fishdata.csv') str(fish.data) # 'data.frame': 180 obs. of 4 variables: # $ Lake : chr "L1" "L1" "L1" "L1" ... # $ Fish_Species: chr "S1" "S1" "S1" "S1" ... # $ Fish_Length : num 105 195 294 414 237 ... # $ Trophic_Pos : num 2.6 2.7 2.74 2.74 2.79 ... ``` It is recommended to clean up your working space (`rm.list()`) before building a model. ??? Note: You can go fairly quickly for step 1 (slides 37-50). The participants should be relatively familiar with data exploration. --- # Step 1. Data exploration **Look at the distribution of samples for each factor:** ```r table(fish.data[ , c("Lake", "Fish_Species")]) # Fish_Species # Lake S1 S2 S3 # L1 10 10 10 # L2 10 10 10 # L3 10 10 10 # L4 10 10 10 # L5 10 10 10 # L6 10 10 10 ``` This dataset is perfectly balanced, but **mixed models can be used to analyze unbalanced experimental plans**, as it is often the case in ecology! ??? Note: Explain what is a balanced vs unbalanced dataset (i.e. equal number of samples accross classes). --- # Step 1. Data exploration **Look at the distribution of continuous variables:** ```r hist(fish.data$Fish_Length, xlab = "Length (mm)", main = "") hist(fish.data$Trophic_Pos, xlab = "Trophic position", main = "") ``` <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-8-1.png" width="720" style="display: block; margin: auto;" /> Major deviations could cause heteroscedasticity problems. If necessary, make transformations. In this case, **the data seems OK**. --- # Step 1. Data exploration **Check for collinearity between your explanatory variables** The problem with collinear predictors is simply that they explain the same thing, so their effect on the response variable will be confounded in the model. In this example, there is no risk of collinearity since there is only one continuous variable. If you had another continuous variable (`Var2`), one simple way to check for collinearity would be: ```r plot(fish.data) cor(var1, var2) ``` Here is an [example of collinearity](https://yetanotheriteration.netlify.app/2018/01/high-collinearity-effect-in-regressions/). --- # Challenge 3 ![:cube]() What additional measures could we have taken in the field that could have been strongly correlated with body length? -- > An example is fish body mass - this variable is strongly correlated with fish length. Therefore, we do not want to include these two variables in the same model. ??? Note: The challenge should be quick. It's mostly for cutting the lecture and interacting with the audience. --- # Step 1. Data exploration **Consider the scale of your data** * If two variables in the same model have very different scales, the mixed model will likely return a `convergence error` when trying to compute the parameters. * The <a href="https://fr.wikipedia.org/wiki/Cote_Z_(statistiques)">Z-correction</a> standardizes the variables and solves this problem (function `scale()` in `R`): `$$z = \frac{x-mean(x)}{standard.deviation(x)}$$` --- # Step 1. Data exploration **Consider the scale of your data** * Body length  Long scale * Trophic position  Short scale --- # Step 1. Data exploration **Consider the scale of your data** Since our data have very different scales of variation, we apply the **Z-correction**. ```r # Standardized length, "by hand" fish.data$Z_Length <- (fish.data$Fish_Length - mean(fish.data$Fish_Length)) / sd(fish.data$Fish_Length) # Standardized trophic position, with the function scale fish.data$Z_TP <- scale(fish.data$Trophic_Pos) ``` --- # Step 1. Data exploration To find out if a mixed model is needed for your data, you need to determine whether it is important to consider the random effects that might influence the relationship you are interested in (in our case, lake and species). **We can do it by:** 1. Creating a linear model without random effect 2. Calculating the residuals of this linear model 3. Plot the residuals against the levels of the potential random factors --- # Step 1. Data exploration Create a linear model without random effects ```r lm.test <- lm(Z_TP ~ Z_Length, data = fish.data) ``` Calculate residuals of this linear model ```r lm.test.resid <- rstandard(lm.test) ``` --- # Step 1. Data exploration Plot the residuals against the levels of the potential random factors ```r plot(lm.test.resid ~ as.factor(fish.data$Fish_Species), xlab = "Species", ylab = "Standardized residuals") abline(0, 0, lty = 2) plot(lm.test.resid ~ as.factor(fish.data$Lake), xlab = "Lake", ylab = "Standardized residuals") abline(0, 0, lty = 2) ``` --- # Step 1. Data exploration Plot the residuals against the levels of the potential random factors <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-14-1.png" width="720" style="display: block; margin: auto;" /> .alert[These patterns suggest that there is residual variance that could be explained by these factors, so they should be included in the model.] --- # How to implement an LMM in R? <img style = "float: right; width:40%;margin: 1%" src = "images/lego.jpg"> **Step 1. ** *A priori* model building and data exploration <br> ###### **Step 2.** Code potential models and model selection <br> **Step 3.** Model validation <br> **Step 4.** Model interpretation and visualization --- # Step 2. Code potential models **Translate this model...** `$$PT_{ijk} \sim Length_i + Lake_j + Species_k + \epsilon_{ijk}$$` **... into R code** ```r library(lme4) lmer(Z_TP ~ Z_Length + (1 | Lake) + (1 | Fish_Species), data = fish.data, REML = TRUE) ``` -- * `lmer`  "linear mixed model" function from `lme4` package * `(1 | Lake)`  indicate varying intercepts among lakes * `REML = TRUE`  estimation method --- # Step 2. Code potential models **What if we want the slopes to vary?** .center[  ] --- # Step 2. Code potential models **Different structures for the model:** - `(1 | Lake)` random effect by lake at the intercept - `(1 + Z_Length | Lake)` random effect by lake at the intercept and slope in response to the body length (NB: `(Z_Length | Lake)` gives the same random structure) - `(-1 + Z_Length | Lake)` to have only the random effect at the slope - `(1 | Lake) + (1 | Species)` for crossed random effects (e.g., the effect of species i is the same across lakes) - `(1 | Lake:Fish_Species)` for the interaction between 2 random effects (e.g., the effect of species i change across lakes) - If your dataset includes nested random effects (e.g., if we had an random effect for lakes nested in a random effect for the region where the lake is), you could use `/` to specify them, e.g. `(1 | factor1 / factor2)` if `factor2` is nested in `factor1` ([see ](https://stats.stackexchange.com/questions/228800/crossed-vs-nested-random-effects-how-do-they-differ-and-how-are-they-specified)). ??? Note: Make sure to make the distinction between crossed random effects, interactions between random effects and nested random effects. --- # Note on estimation methods REML (*Restricted Maximum Likelihood*) is the default method in `lmer` (see `?lmer`). The *Maximum Likelihood* (ML) method underestimates model variances by a factor of `\((n-k)/n\)`, where `\(k\)` is the number of fixed effects. The REML method corrects this bias. See this [article](https://towardsdatascience.com/maximum-likelihood-ml-vs-reml-78cf79bef2cf) for more information on the difference between ML and REML. ??? Note:Explain that the estimation methods is the algorithm used to estimate the parameters (slopes, intercepts) of the model. Specify that they don't need to understand the details, but they can get more information if they follow the hyperlink. The next slides summarize the main point they should know about. --- # Note on estimation methods **Remember that you should use:** * **REML** to compare models with different **random effect** structure and the same fixed effect structure * **ML** to compare models with different **fixed effect** structure and the same random effect structure * **ML** to compare models **with random effects** to models **without random effects** ??? Note: This is probably hard to understand, but you can specify that the next few challenges should help them understand the use of each estimation methods --- # Challenge 4 ![:cube]() Re-write the following code so that the **slopes** of the relationship between trophic position and body length **vary by lake and species**: ```r lmer(Z_TP ~ Z_Length + (1 | Lake) + (1 | Fish_Species), data = fish.data, REML = TRUE) # Linear mixed model fit by REML ['lmerMod'] # Formula: Z_TP ~ Z_Length + (1 | Lake) + (1 | Fish_Species) # Data: fish.data # REML criterion at convergence: 72.4662 # Random effects: # Groups Name Std.Dev. # Lake (Intercept) 0.4516 # Fish_Species (Intercept) 0.9301 # Residual 0.2605 # Number of obs: 180, groups: Lake, 6; Fish_Species, 3 # Fixed Effects: # (Intercept) Z_Length # 6.858e-14 4.198e-01 ``` --- # Solution ![:cube]() ```r lmer(Z_TP ~ Z_Length + (1 + Z_Length | Lake) + (1 + Z_Length | Fish_Species), data = fish.data, REML = TRUE) # Linear mixed model fit by REML ['lmerMod'] # Formula: # Z_TP ~ Z_Length + (1 + Z_Length | Lake) + (1 + Z_Length | Fish_Species) # Data: fish.data # REML criterion at convergence: 20.5786 # Random effects: # Groups Name Std.Dev. Corr # Lake (Intercept) 0.45280 # Z_Length 0.02379 -0.82 # Fish_Species (Intercept) 0.93097 # Z_Length 0.15727 1.00 # Residual 0.22341 # Number of obs: 180, groups: Lake, 6; Fish_Species, 3 # Fixed Effects: # (Intercept) Z_Length # -0.0009025 0.4223738 # optimizer (nloptwrap) convergence code: 0 (OK) ; 0 optimizer warnings; 1 lme4 warnings ``` --- # Step 2. Model selection * To determine if you have built the best mixed model based on your prior knowledge, you should compare this *a priori* model to other alternative models. * With the dataset you are working on, there are several alternative models that might better fit your data. --- # Challenge 5 ![:cube]() Make a list of 7 alternative models that could be compared to this one: ```r lmer(Z_TP ~ Z_Length + (1 | Lake) + (1 | Fish_Species), data = fish.data, REML = TRUE) ``` .comment[Note: If we had different fixed effects between the models or a model without random effects, we would have to specify `REML = FALSE` to compare with likelihood methods like AIC.] ??? Note: To determine if you have built the best mixed model based on your prior knowledge, you should compare this a priori model to other alternative models. With the dataset you are working on, there are several alternative models that might better fit your data. --- # Solution ![:cube]() We first will also build the **basic linear model** `lm()` because it is always useful to see the variation in the AICc values. ```r M0 <- lm(Z_TP ~ Z_Length, data = fish.data) ``` However, to compare this model to the LMMs, it is important to .alert[change the estimation method to ML (`REML=FALSE`)] because `lm()` does not use the same estimation method as `lmer()`. --- # Solution ![:cube]() ```r # Linear model with no random effects M0 <- lm(Z_TP ~ Z_Length, data = fish.data) # Full model with varying intercepts M1 <- lmer(Z_TP ~ Z_Length + (1 | Fish_Species) + (1 | Lake), data = fish.data, REML = FALSE) # Full model with varying intercepts and slopes M2 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 + Z_Length | Lake), data = fish.data, REML = FALSE) # No Lake, varying intercepts only M3 <- lmer(Z_TP ~ Z_Length + (1 | Fish_Species), data = fish.data, REML = FALSE) # No Species, varying intercepts only M4 <- lmer(Z_TP ~ Z_Length + (1 | Lake), data = fish.data, REML = FALSE) # No Lake, varying intercepts and slopes M5 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species), data = fish.data, REML = FALSE) # No Species, varying intercepts and slopes M6 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Lake), data = fish.data, REML = FALSE) # Full model with varying intercepts and slopes only varying by lake M7 <- lmer(Z_TP ~ Z_Length + (1 | Fish_Species) + (1 + Z_Length | Lake), data = fish.data, REML = FALSE) # Full model with varying intercepts and slopes only varying by species M8 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 | Lake), data = fish.data, REML = FALSE) ``` --- # Solution ![:cube]() When fitting LMMs with `lmer()`, you may encounter some errors or warnings such as: * `boundary (singular) fit: see ?isSingular`, see [this discussion ](https://stats.stackexchange.com/questions/378939/dealing-with-singular-fit-in-mixed-models) * `Model failed to converge with max|grad| ...`, see [this discussion ](https://stats.stackexchange.com/questions/242109/model-failed-to-converge-warning-in-lmer) Here a [list](https://rdrr.io/cran/lme4/man/troubleshooting.html) of possible problems and how to troubleshoot them. --- # Step 2. Model selection * Now that we have a list of potential models, we want to compare them to each other to select the one(s) with the highest predictive power given the data. * Models can be compared by using the `AICc` function from the` MuMIn` package. * The Akaike Information Criterion (AIC) is a **measure of model quality** that can be used to compare models. * `AICc` corrects bias created by small sample sizes. To find the AICc value of a model, use: ```r library(MuMIn) MuMIn::AICc(M1) # [1] 77.30499 ``` --- # Step 2. Model selection To group all AICc values into a single table, use: ```r AIC.table <- MuMIn::model.sel(M0, M1, M2, M3, M4, M5, M6, M7, M8) (AIC.table <- AIC.table[ , c("df", "logLik", "AICc", "delta")]) # df logLik AICc delta # M8 7 -8.597929 31.84702 0.000000 # M2 9 -8.216010 35.49084 3.643823 # M1 5 -33.480080 77.30499 45.457965 # M7 7 -33.186374 81.02391 49.176889 # M5 6 -128.310995 269.10754 237.260517 # M3 4 -134.532965 277.29450 245.447480 # M4 4 -224.715763 457.66010 425.813076 # M6 6 -224.671201 461.82795 429.980930 # M0 3 -236.861362 479.85909 448.012065 ``` * `df` is the degree of freedom * `logLik` is the loglikelihood * `delta` is the AICc difference with the lowest value We only displayed part of the results returned by the function `model.sel()`, see `?model.sel` for more information. ??? Note: Then we can select only the columns of interest to print into a table `df` is the degree of freedom `logLik` is the loglikelihood `delta` is the AICc difference with the lowest value. --- # Step 2. Model selection What do these AICc values mean? ```r AIC.table # df logLik AICc delta # M8 7 -8.597929 31.84702 0.000000 # M2 9 -8.216010 35.49084 3.643823 # M1 5 -33.480080 77.30499 45.457965 # M7 7 -33.186374 81.02391 49.176889 # M5 6 -128.310995 269.10754 237.260517 # M3 4 -134.532965 277.29450 245.447480 # M4 4 -224.715763 457.66010 425.813076 # M6 6 -224.671201 461.82795 429.980930 # M0 3 -236.861362 479.85909 448.012065 ``` * The model with the smallest AICc has the highest predictive power. * Some suggest that if models are within 2 AICc units of each other then they are equally plausible. * Let's take a closer look at M8 and M2. We can exclude other model because they have such higher AICc. --- # Step 2. Model selection ```r M8 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 | Lake), data = fish.data, REML = TRUE) M2 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 + Z_Length | Lake), data = fish.data, REML = TRUE) MuMIn::model.sel(M2,M8)[ , c("df", "logLik", "AICc", "delta")] # df logLik AICc delta # M8 7 -10.84011 36.33137 0.000000 # M2 9 -10.28932 39.63747 3.306098 ``` Model `M8` seems to be the best among all models that we tested. Note that we use now REML (i.e. `REML = TRUE`) as we are comparing two models with nested random effects and the same fixed effect structure. --- # Step 2. Model selection What is the structure of the best model? ```r M8 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 | Lake), data = data, REML = FALSE) ``` Both the intercepts and slopes of the relationship between trophic position and length may vary by fish species, but only the intercepts may vary by lake. .pull-left[] .pull-right[] --- # Step 2. Model selection Once the best model is selected, the estimation method must be reset to `REML = TRUE`. ```r M8 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 | Lake), data = fish.data, REML = TRUE) ``` --- exclude: true # Challenge 6 ![:cube]() Take 2 minutes with your neighbour to draw out the model structure of M2. Biologically, how does it differ from M8? Why is it not surprising that it's AICc value was 2nd best? ```r M8 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 | Lake), data = fish.data, REML = TRUE) M2 <- lmer(Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 + Z_Length | Lake), data = fish.data, REML = TRUE) ``` --- exclude: true # Solution **Group discussion...** -- exclude: true .alert[M2] The trophic position is a function of length and both the intercept and the effect of length on trophic position can vary by fish species and lake. * .small[the intrinsic factors of species and lakes cause the relationship between trophic position and length to differ (i.e. both slopes and intercepts) (i.e. slopes and intercepts)] .alert[M8] The trophic position is a function of length and both the intercept and the effect of length on trophic position can vary by fish species but only the intercept can vary by lake (not the slope of trophic position on length). * .small[intrinsic factors of species alone cause this relationship to differ (i.e. slopes) and that on average trophic positions might be higher or lower in one lake versus another (i.e. intercepts).] --- # How to implement an LMM in R? <img style="float: right; width:40%;margin: 1%" src="images/lego.jpg"> **Step 1. ** *A priori* model building and data exploration <br> **Step 2.** Code potential models and model selection <br> ###### **Step 3.** Model validation <br> **Step 4 -** Model interpretation and visualization --- # Step 3. Model validation **You must verify that the model follows all the basic assumptions:** <br> **3.1 Check the homogeneity of the variance** - Plot predicted values vs residual values <br> **3.2 Check the independence of the model residuals** - Plot residuals vs each covariate of the model - Plot residuals vs each covariate not included in the model <br> **3.3 Check the normality of the model residuals** - Histogram of residuals --- # Step 3. Model validation **3.1 Check the homogeneity of the variance** ```r plot(resid(M8) ~ fitted(M8), xlab = 'Predicted values', ylab = 'Normalized residuals') abline(h = 0, lty = 2) ``` <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-29-1.png" width="324" style="display: block; margin: auto;" /> Homogeneous dispersion of the residuals  the assumption is respected! --- # Step 3. Model validation **3.1 Check the homogeneity of the variance** .center[  ] ??? Note: Heteroscedasticity refers to the circumstance in which the variability of a variable is unequal across the range of values of a second variable that predicts it. A scatterplot of these variables will often create a cone-like shape, as the scatter (or variability) of the dependent variable widens or narrows as the value of the independent variable increases. The inverse of heteroscedasticity is homoscedasticity, which indicates that a dependent variable variability is equal across values of an independent variable. --- # Step 3. Model validation **3.2 Check the independence of the model residuals with each covariate** ```r plot(resid(M8) ~ fish.data$Z_Length, xlab = "Length", ylab = "Normalized residuals") abline(h = 0, lty = 2) boxplot(resid(M8) ~ Fish_Species, data = fish.data, xlab = "Species", ylab = "Normalized residuals") abline(h = 0, lty = 2) boxplot(resid(M8) ~ Lake, data = fish.data, xlab = "Lakes", ylab = "Normalized residuals") abline(h = 0, lty = 2) ``` --- # Step 3. Model validation **3.2 Check the independence of the model residuals with each covariate** <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-31-1.png" width="864" style="display: block; margin: auto;" /> Homogeneous dispersion of the residuals around 0  no pattern of residuals depending on the variable, the assumption is respected! .comment[Note: The clusters are due to the data structure, where fish of only 5 size classes (large, small, and three groups in between) were captured.] --- # Step 3. Model validation **3.2 Check the independence of the model residuals with each covariate** - Plot residuals vs each covariate not included in the model - If you observe patterns in these plots, you will know that there is variation in your dataset that could be explained by these covariates. You should consider including them in your model. - Since we have included all the measured variables in our model, we can not do this step. --- # Step 3. Model validation **3.3 Check the normality of the model residuals** * Residuals following a normal distribution indicate that the model is not biased. ```r hist(resid(M8)) ``` <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-32-1.png" width="360" style="display: block; margin: auto;" /> --- # How to implement an LMM in R? <img style="float: right; width:40%;margin: 1%" src="images/lego.jpg"> **Step 1:** *A priori* model building and data exploration <br> **Step 2:** Code potential models and model selection <br> **Step 3:** Model validation <br> ###### **Step 4:** Model interpretation and visualization --- # Step 4. Interpretation and visualization ```r (summ_M8 <- summary(M8)) # Linear mixed model fit by REML ['lmerMod'] # Formula: Z_TP ~ Z_Length + (1 + Z_Length | Fish_Species) + (1 | Lake) # Data: fish.data # # REML criterion at convergence: 21.7 # # Scaled residuals: # Min 1Q Median 3Q Max # -2.77185 -0.60165 0.05589 0.64239 2.27777 # # Random effects: # Groups Name Variance Std.Dev. Corr # Lake (Intercept) 0.20505 0.4528 # Fish_Species (Intercept) 0.86676 0.9310 # Z_Length 0.02465 0.1570 1.00 # Residual 0.05039 0.2245 # Number of obs: 180, groups: Lake, 6; Fish_Species, 3 # # Fixed effects: # Estimate Std. Error t value # (Intercept) -0.0009059 0.5686591 -0.002 # Z_Length 0.4222698 0.0921927 4.580 # # Correlation of Fixed Effects: # (Intr) # Z_Length 0.929 # optimizer (nloptwrap) convergence code: 0 (OK) # boundary (singular) fit: see help('isSingular') ``` ??? Note: Let’s take a closer look at our final model using the summary() function. How can we interpret this information? --- # Step 4. Interpretation and visualization # Random effects: # Groups Name Variance Std.Dev. Corr # Lake (Intercept) 0.20500 0.4528 # Fish_Species (Intercept) 0.86621 0.9307 # Z_Length 0.02464 0.1570 1.00 # Residual 0.05040 0.2245 - `Groups`: grouping factors - `Name`: - `(Intercept)` for the intercepts, - or the name of the variable on which the random slope is estimated (`Z_length` in this example) - `Variance` the variance of the estimated effect (`Std.Dev.` is the standard deviation of this estimate) - `Corr` the correlation between the random interpet and the random slope for a given grouping factor (see [this dicussion ](https://stats.stackexchange.com/questions/320978/understanding-and-coding-random-intercept-correlation-lmer)) ??? Note: Let’s go section by section and try to understand what we are looking at. The output is broken up into descriptions of the Random effects (things we allow to vary based on the normal distribution) and Fixed effects (things we estimate in the same way as classical regression). --- # Step 4. Interpretation and visualization # Fixed effects: # Estimate Std. Error t value # (Intercept) -0.000906 0.568493 -0.002 # Z_Length 0.422270 0.092170 4.581 This part presents the fixed effect estimates. The value of the t statistics [(Student test)](https://en.wikipedia.org/wiki/T-statistic) is shown **without the p-value** (it is a decision from the package authors, see why in [this discussion](https://stats.stackexchange.com/questions/185360/t-value-associated-with-nlme-lme4)). This statistics could be used as it is. You could also calculate the 95% confidence interval (CI) with this equation: $$ CI = Estimate \pm 1.96*Std.Error $$ If 0 is in the interval, then the parameter is not significantly different from zero at a threshold of `\(\alpha\)` = 0.05. --- # Step 4. Interpretation and visualization **Some useful functions** - `coef(M8)` and `ranef(M8)` return random effects of model M8 - `coef(summary(M8))` returns fixed effects - `sigma(M8)` returns standard deviation of residuals - `fitted(M8)` returns predicted values by the model - `residuals(M8)` returns residuals --- exclude: true # Step 4. Interpretation and visualization .center[  ] <br> .center[  ] If the 95% confidence interval of the slope ($slope ± SE * 1.96$) includes zero, the slope (here = 0.4223), and therefore the effect of length on trophic position, is not significantly different from zero at the threshold `\(\alpha\)` = 0.05. --- # Challenge 6 ![:cube]() 1. What is the slope and confidence interval of the Z_Length variable in the M8 model? 2. Is the slope of Z_Length significantly different from 0? --- # Solution ![:cube]() 1. What is the slope and confidence interval of the Z_Length variable in the M8 model? - slope = 0.422; - CI upper limit = 0.4223 + 0.09*1.96 = 0.5987 - CI lower limit = 0.4223 - 0.09*1.96 = 0.2459 2. Is the slope of Z_Length significantly different from 0? - Yes, because the CI [0.2459, 0.5987] does not include 0 ??? Note: If the 95% confidence interval of the slope includes zero, the slope (here = 0.4223), and therefore the effect of length on trophic position, is not significantly different from zero at the alpha threshold of 0.05. --- # Challenge 7 ![:cube]() It is possible to visualize graphically the different intercepts and slopes of the model to better interpret the results. Take 2 minutes to think about different ways to represent the results of M8. *Hint: consider the different "levels" of the model* --- # Solution ![:cube]() a) Figure with all data grouped b) Figure by species c) Figure by lake --- # Solution ![:cube]() To produce these figures, we need: - The coefficients of the full model that are in the model summary ```r summ_M8$coefficients # Estimate Std. Error t value # (Intercept) -0.0009059101 0.56865914 -0.001593064 # Z_Length 0.4222697624 0.09219268 4.580296200 ``` - Intercept = `\(-9.0591014\times 10^{-4}\)` - Slope = `\(0.4222698\)` --- # Solution ![:cube]() To produce these figures, we need: - The coefficients for each level of the model, which can be obtained with the `coef` function ```r coef(M8) # $Lake # (Intercept) Z_Length # L1 -0.085984110 0.4222698 # L2 0.002205189 0.4222698 # L3 -0.301816663 0.4222698 # L4 -0.574040006 0.4222698 # L5 0.218650190 0.4222698 # L6 0.735549939 0.4222698 # # $Fish_Species # (Intercept) Z_Length # S1 -1.0752976 0.2410721 # S2 0.5597866 0.5168313 # S3 0.5127933 0.5089059 # # attr(,"class") # [1] "coef.mer" ``` --- # Solution ![:cube]() a) Figure with all data grouped ```r library(ggplot2) # Create a simplified ggplot theme fig <- theme_bw() + theme(panel.grid.minor=element_blank(), panel.grid.major=element_blank(), panel.background=element_blank()) + theme(strip.background=element_blank(), strip.text.y = element_text()) + theme(legend.background=element_blank()) + theme(legend.key=element_blank()) + theme(panel.border = element_rect(colour="black", fill=NA)) plot <- ggplot(aes(Z_Length, Z_TP), data = fish.data) Plot_AllData <- plot + geom_point() + xlab("Length (mm)") + ylab("Trophic position") + labs(title = "All data") + fig Plot_AllData + geom_abline(intercept = summ_M8$coefficients[1,1], slope = summ_M8$coefficients[2,1]) ``` --- # Solution ![:cube]() a) Figure with all data grouped <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-37-1.png" width="432" style="display: block; margin: auto;" /> --- # Solution ![:cube]() b) Figure by species ```r # create a table with the coefs to facilitate their manipulation Lake.coef <- coef(M8)$Lake colnames(Lake.coef) <- c("Intercept", "Slope") Species.coef <- coef(M8)$Fish_Species colnames(Species.coef) <- c("Intercept", "Slope") Plot_BySpecies <- plot + geom_point(aes(colour = factor(Fish_Species)), size = 4) + xlab("Length (mm)") + ylab("Trophic position") + labs(title = "By species") + fig # Add regression lines for each species Plot_BySpecies + geom_abline(intercept = Species.coef[1,1], slope = Species.coef[1,2], col = "coral2") + geom_abline(intercept = Species.coef[2,1], slope = Species.coef[2,2], col = "green4") + geom_abline(intercept = Species.coef[3,1], slope = Species.coef[3,2], col = "blue1") ``` --- # Solution ![:cube]() b) Figure by species <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-39-1.png" width="576" style="display: block; margin: auto;" /> --- # Solution ![:cube]() c) Figure by lake ```r Plot_ByLake <- plot + geom_point(aes(colour = factor(Lake)), size = 4) + xlab("Length (mm)") + ylab("Trophic Position") + labs(title = "By Lake") + fig # Add in regression lines with the intercepts specific to each lake Plot_ByLake + geom_abline(intercept = Lake.coef[1,1], slope = Lake.coef[1,2], col = "coral2") + geom_abline(intercept = Lake.coef[2,1], slope = Lake.coef[2,2], col = "khaki4") + geom_abline(intercept = Lake.coef[3,1], slope = Lake.coef[3,2], col = "green4") + geom_abline(intercept = Lake.coef[4,1], slope = Lake.coef[4,2], col = "darkgoldenrod") + geom_abline(intercept = Lake.coef[5,1], slope = Lake.coef[5,2], col = "royalblue1") + geom_abline(intercept = Lake.coef[6,1], slope = Lake.coef[6,2], col = "magenta3") ``` --- # Solution ![:cube]() c) Figure by lake <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-41-1.png" width="576" style="display: block; margin: auto;" /> --- exclude: true # Mixed model and ecological data Mixed models are very useful for taking into account the complex structure of ecological data while not loosing too many degrees of freedom .center[  ] --- exclude: true # Challenge 8 ![:cube]() **Situation:** * You have inventoried species richness **in 1000 quadrats** that are within **10 different sites** which are also within **10 different forests**. * You also **measured productivity** in each **quadrat**. * You want to know if productivity is a good predictor of biodiversity .alert[What mixed model could you use for this dataset?] --- exclude: true # Solution!![:cube]() ```r lmer(Biodiv ~ Productivity + (1 | Forest / Site)) ``` Here the random effects are nested (i.e. Sites within forest) and not crossed. Why use `(1 | Forest / Site)` rather than `(1 | Forest) + (1 | Site)`? See [the answer in ](https://stats.stackexchange.com/questions/228800/crossed-vs-nested-random-effects-how-do-they-differ-and-how-are-they-specified)! --- exclude: true # Challenge 10![:cube]() **Situation:** * You have collected **200 fish** from **12 different sites** evenly distributed across **4 habitat types** that are found within **the same lake**. * You measured **the length of each fish** and the **amount of mercury in its tissue**. * You want to know if habitat is a good predictor of mercury concentration. .alert[What mixed model could you use for this dataset?] --- exclude: true ## Solution!![:cube]() ```r lmer(Mercury ~ Length * Habitat_Type + (1 | Site)) ``` --- class: inverse, center, middle # GLMMs --- exclude: true # Review: Linear Mixed Models **Review of LMM Workshop**: - Structure in the dataset or correlation among observations can result in **lack of independence among observations** sampled from same sites or time points - Account for this by including r**andom effect terms** **Random effects**: - Parameter is a sample from the population, i.e. the subjects you happen to be working with - Explains the variance of the response variable **Fixed effects**: - Parameter is reproducible, i.e. would be the same across studies - Explain the mean of the response variable --- exclude: true # Review: Linear Mixed Models .pull-left[ **Shrinkage estimates** - Random effects are often called **shrinkage estimates** because they represent a weighted average of the data and the overall fit (fixed effect) - The random effect shrinkage toward the overall fit (fixed effect) is more severe if the within-group variability is large compared to the among-group variability ] .pull-right[ <br>  ] --- # Generalized Linear Mixed Models (GLMMs) **Extension of GLMs to account for additional structure in dataset** Follows similar steps introduced in LMM section : **1.** Incorporate random effects (like LMMs) **2.** Handle non-normal data (letting errors take on different distribution families - e.g. Poisson or negative binomial) (like GLMs) --- exclude: true # Review: Generalized Linear Models (GLM) _Include 2-3 slides recalling GLM_ --- # Implementing GLMMs in R Import the `Arabidopsis` dataset `arabidopsis.csv` into `R`. ```r dat.tf <- read.csv("data/arabidopsis.csv") ``` Description: data on genetic variation in responses to fertilization and simulated herbivory in *Arabidopsis*. For more details see [here](https://www.rdocumentation.org/packages/lme4/versions/1.1-27.1/topics/Arabidopsis) ```r # In this dataset, the column headers are defined as: # reg: region (e.g. NL for Netherlands) # popu: factor with a level for each population # gen: factor with a level for each genotype # rack: a nuisance factor with 2 levels, one for each of two greenhouse racks # nutrient: factor with levels for low (value = 1) or high (value = 8) # amd: factor with levels for no damage or simulated herbivory # total.fruits: integer indicating the number of fruits per plant ``` The effect of nutrient availability and herbivory (**fixed effects**) on the fruit production (**response variable**) of *Arabidopsis thaliana* was evaluated by measuring 625 plants across 9 different populations, each comprised of 2 to 3 different genotypes (**random effects**) --- # Choosing an error distribution The response variable is count data which suggests we need a **Poisson distribution** (i.e. the variance is equal to the mean) <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-46-1.png" width="432" style="display: block; margin: auto;" /> Read more about error distributions [here](http://ganymede.nmsu.edu/holtz/a535/ay535notes/node3.html) However, as we will soon see, the variance increases with the mean much more rapidly than expected under the Poisson distribution... In other words, the data is *overdispersed*. --- # Overdispersion: Review For a Poisson distribution, `\(var[y] = μ\)`. However, in practice the apparent variance of the data often exceeds `\(μ\)`, reflecting *overdispersion* in the model parameters. <br> Overdispersion arises because the mean `\(μ\)` innately varies, even when all the explanatory variables are fixed, or because the events that are being counted are positively correlated. <br> .center[*Are you able to think of a situation in biology that can cause overdispersion?*] <br> **Why should we care about overdispersion?** >Tests on the explanatory variables will generally appear to be more significant and confidence intervals for the parameters will be narrower than warranted by the data! --- # Exploring variance To illustrate heterogeneity in variance we will first create boxplots of the **log** of total fruit production (**response variable**) versus different environmental factors. Let's create new variables that represent every combination of **nutrient** x **clipping** x **random factor** ```r dat.tf <- within(dat.tf, { # genotype x nutrient x clipping gna <- interaction(gen,nutrient,amd) gna <- reorder(gna, total.fruits, mean) # population x nutrient x clipping pna <- interaction(popu,nutrient,amd) pna <- reorder(pna, total.fruits, mean) }) ``` --- # Exploring variance .small[ ```r # Boxplot of total fruits vs genotype x nutrient x clipping interaction ggplot(data = dat.tf, aes(factor(x = gna), y = log(total.fruits + 1))) + geom_boxplot(colour = "skyblue2", outlier.shape = 21, outlier.colour = "skyblue2") + ylab("log (Total fruits)\n") + # \n creates a space after the title xlab("\nGenotype x nutrient x clipping") + # space before the title theme_bw() + theme(axis.text.x = element_blank()) + stat_summary(fun = mean, geom = "point", colour = "red") ``` <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-48-1.png" width="576" style="display: block; margin: auto;" /> ] .comment[Similarly, the variance of total fruits shows a large amount of heterogeneity among populations (population x nutrient x clipping interaction).] --- # Choosing error distribution As we just saw, there is a large amount of heterogeneity among group variances even when the response variable is transformed (i.e. log). If we plot the **group variances vs group means** (genotype x nutrient x clipping grouping), we can appreciate that the Poisson family is the least appropriate distribution (i.e. variances increase much faster than the means). <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-49-1.png" width="576" style="display: block; margin: auto;" /> --- # Poisson GLMM **Given the mean-variance relationship, we will most likely need a model with overdispersion.** To understand why, let's start with a Poisson model. An assumption that must be fulfilled on Poisson distribution is the mean value of data equals to the variance value (or so-called equidispersion). If the variance value is greater than the mean value, it is called **overdispersion**. -- To run a GLMM in `R` we will use the `glmer()` function from the `lme4` package : ```r library(lme4) mp1 <- glmer(total.fruits ~ nutrient*amd + rack + status + (1|popu)+ (1|gen), data = dat.tf, family = "poisson") ``` **Random effects**: `(1|popu)` and `(1|gen)`. We model random intercepts for both factors so that total fruit production can vary among populations (`popu`) and genotypes (`gen`). --- # Overdispersion check We can check for overdispersion using the `overdisp_fun()` function (Bolker *et al*. 2011) which divides the Pearson residuals by the residual degrees of freedom. The function tests whether **the ratio is greater than 1**. -- Let's run the test : ```r # Download the glmm_funs.R code from the wiki page and source it to run the function source(file = "data/glmm_funs.R") # Overdispersion? overdisp_fun(mp1) # chisq ratio p logp # 15755.86835 25.57771 0.00000 -6578.47028 ``` -- .alert[Ratio is significantly > 1] <br><br> As expected, we need to model a **different distribution** where the variance increases more rapidly than the mean. --- # Negative binomial GLMM .small[(Poisson-gamma)] **Option 1.** Recall that the **negative binomial** (or Poisson-gamma) distribution meets the assumption that the variance is proportional to the square of the mean <br> -- We model this distribution using the function `glmer.nb()` : ```r mnb1 <- glmer.nb(total.fruits ~ nutrient*amd + rack + status + (1|popu)+ (1|gen), data = dat.tf, control = glmerControl(optimizer = "bobyqa")) # Control argument specifies the way we optimize the parameter values ``` -- We test again for overdispersion : ```r # Overdispersion? overdisp_fun(mnb1) # chisq ratio p logp # 721.034461131 1.170510489 0.002143425 -6.145350288 ``` .alert[Ratio is now much closer to 1 although p < 0.05] --- # Poisson-lognormal GLMM **Option 2.** The **Poisson-lognormal** distribution <br> This can be achieved simply by placing an **observation-level random effect** in the model formula. See Harrison (2014) for further details https://doi.org/10.7717/peerj.616. <br> -- To do so, we first create a variable named `X` : ```r # This variable is already in your data "dat.tf", but here is how we create it : dat.tf$X <- 1:nrow(dat.tf) ``` We take overdispersion into account by adding the random effect `(1|X)` in the formula : ```r mpl1 <- glmer(total.fruits ~ nutrient*amd + rack + status + (1|X) + (1|popu)+ (1|gen), data = dat.tf, family = "poisson", control = glmerControl(optimizer = "bobyqa")) ``` --- # Poisson-lognormal GLMM <br><br><br> Finally, we test for overdispersion : ```r overdisp_fun(mpl1) # chisq ratio p logp # 1.775360e+02 2.886764e-01 1.000000e+00 -3.754786e-73 ``` .alert[Ratio now meets our criterion, thus, < 1] --- # Poisson-lognormal GLMM **Let's visualize the model parameters** Can be obtained using the `coefplot2()` function from the `coefplot2` package : <br><br> .alert[ This package is not on CRAN! We install it from GitHub using the `remotes` package.] ```r if (!require("coefplot2")) remotes::install_github("palday/coefplot2", subdir = "pkg", upgrade = "always", quiet = TRUE) library(coefplot2) ``` --- # Poisson-lognormal GLMM .pull-left[ ```r # Variance terms coefplot2(mpl1, ptype = "vcov", intercept = TRUE, main = "Random effect variance") ``` <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-57-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ```r # Fixed effects coefplot2(mpl1, intercept = TRUE, main = "Fixed effect coefficient") ``` <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-58-1.png" width="432" style="display: block; margin: auto;" /> ] .comment[Note: error bars are only shown for the fixed effects because `glmer()` does not model uncertainty for random effects.] ??? Note: The random effect variance (σ2i) represents the mean random effect variance of the model. Since this variance reflects the “average” random effects variance for mixed models, it is also appropriate for models with more complex random effects structures, like random slopes or nested random effects. The fixed effects variance (σ2f) is the variance of the matrix-multiplication β∗X (parameter vector by model matrix). --- # Poisson-lognormal GLMM **Let's visualize the random effects** You can extract the random effect predictions using `ranef()` and plot them using a `dotplot()` from the `lattice` package. <br> -- We observe differences **among population** : - Spanish populations (SP) have larger values than Swedish (SW) or Dutch (NL) populations We observe mild differences **among genotypes** : - Difference among genotypes largely driven by genotype 34 ```r library(gridExtra) library(lattice) # dotplot code pp <- list(layout.widths = list(left.padding = 0, right.padding = 0), layout.heights = list(top.padding = 0, bottom.padding = 0)) r2 <- ranef(mpl1, condVar = TRUE) d2 <- dotplot(r2, par.settings = pp) grid.arrange(d2$gen, d2$popu, nrow = 1) ``` <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-59-1.png" width="432" style="display: block; margin: auto;" /> --- # Poisson-lognormal GLMM **Let's visualize the random effects** <br> <img src="workshop07-pres-en_files/figure-html/unnamed-chunk-60-1.png" width="648" style="display: block; margin: auto;" /> --- # Model selection The same methods can be used with a glmm or lmm to choose between models with various random intercepts and/or random slopes and to choose fixed effects to keep in final model. <br> Here are two approaches : - an **information theoretic approach** (e.g., AICc - Workshop 5) - a **frequentist approach** (where the significance of each term is evaluated using a likelihood ratio test; LRT, with the `anova()` function) --- # Model selection We first code potential models and compare them using AICc.comment[*]: ```r mpl2 <- update(mpl1, . ~ . - rack) # model without rack mpl3 <- update(mpl1, . ~ . - status) # model without status mpl4 <- update(mpl1, . ~ . - amd:nutrient) # without amd:nutrient interaction aic_tab <- MuMIn::model.sel(mpl1, mpl2, mpl3, mpl4) (round(aic_table <- aic_tab[ , c("AICc", "delta", "df")], digits = 2)) # AICc delta df # mpl1 5015.73 0.00 10 # mpl4 5017.11 1.38 9 # mpl3 5017.22 1.49 8 # mpl2 5070.75 55.02 9 ``` .comment[*NB: We do not cover all possible models above, however, the interaction `amd:nutrient` can only be evaluated if both amd and nutrient (i.e., the main effects) are included in the model. ] --- # Model selection Alternatively, we can use `drop1()` and `dfun()` functions to evaluate our fixed effects (`dfun()` converts the AIC values returned by the `drop1()` into `\(\Delta\)`AIC values) .small[ ```r dd_LRT <- drop1(mpl1, test = "Chisq") (dd_AIC <- dfun(drop1(mpl1))) # Single term deletions # # Model: # total.fruits ~ nutrient * amd + rack + status + (1 | X) + (1 | # popu) + (1 | gen) # npar dAIC # <none> 0.000 # rack 1 55.083 # status 2 1.612 # nutrient:amd 1 1.444 ``` ] -- - Strong **rack** effect (dAIC = 55.08 if we remove this variable) - Effects of **status** and **interaction** term are weak (dAIC < 2) --- # Model selection Let's start by **removing the non-significant interaction** term to test main effects of nutrient and clipping ```r mpl2 <- update(mpl1, . ~ . - amd:nutrient) mpl3 <- update(mpl2, . ~ . - rack) # without rack or interaction mpl4 <- update(mpl2, . ~ . - status) # without status or interaction mpl5 <- update(mpl2, . ~ . - nutrient) # without nutrient or interaction mpl6 <- update(mpl2, . ~ . - amd) # without herbivory or interaction ``` --- # Model selection Choose the method you want to select the best model: **Method with AICc** ```r aic_tab2 <- MuMIn::model.sel(mpl2, mpl3, mpl4, mpl5, mpl6) (round(aic_table2 <- aic_tab2[ , c("AICc", "delta", "df")], digits = 2)) # AICc delta df # mpl2 5017.11 0.00 9 # mpl4 5018.28 1.17 7 # mpl6 5027.27 10.16 8 # mpl3 5071.28 54.17 8 # mpl5 5152.74 135.63 8 ``` **Method with `drop1()`** ```r dd_LRT2 <- drop1(mpl2, test = "Chisq") dd_AIC2 <- dfun(drop1(mpl2)) ``` --- # Model selection **What are our conclusions ?** <br> Both the main effects of **nutrient** and **clipping** are strong (large change in AIC of `\(135.6\)` (`mpl5`) and `\(10.2\)` (`mpl6`) if either nutrient or clipping are dropped, respectively). <br> **Our final model includes :** Fixed effects * nutrients * clipping * rack Random effects * observation-level random effect `(1|X)` * populations `(1|popu)` * genotypes `(1|gen)` --- # Challenge 8 ![:cube]() Use the `inverts` dataset (larval development times (`PLD`) of 74 marine invertebrate and vertebrate species reared at different temperatures and time), answer the following questions: - What is the effect of feeding type and climate (**fixed effects**) on `PLD`? - Does this relationship vary among taxa (**random effects**)? - What is the **best distribution family** for this count data? - Finally, once you determined the best distribution family, re-evaluate your random and fixed effects. --- # Solution ![:cube]() ```r inverts <- read.csv('data/inverts.csv', header = TRUE) head(inverts) table(inverts$temp, inverts$feeding.type) mod.glm <- glm(PLD ~ temp + feeding.type, family = poisson(), data = inverts) summary(mod.glm) drop1(mod.glm, test = "Chisq") boxplot(PLD ~ temp, data = inverts) boxplot(PLD ~ feeding.type, data = inverts) boxplot(predict(mod.glm, type = "response")~inverts$temp) modglm <- glm(PLD ~ temp + feeding.type, family = poisson(), data = inverts) ``` --- # Solution ![:cube]() ```r r2 <- ranef(mpl1, condVar = TRUE) d2 <- dotplot(r2, par.settings = pp) plot(aggregate(PLD ~ taxon, FUN = mean, data = inverts)[,2], aggregate(PLD ~ taxon, FUN = var, data = inverts)[,2], pch = 19) abline(a = 0, b = 1, lty = 2) mod.glmer <- glmer.nb(PLD ~ temp + feeding.type + (1|taxon), data = inverts) mod.glm <- glm.nb(PLD ~ temp + feeding.type, data = inverts) plot(aggregate(PLD ~ taxon, FUN = var, data = inverts)[,2], aggregate(PLD ~ taxon, FUN = mean, data = inverts)[,2]) abline(a = 0, b = 1, lty = 2 ) ``` --- exclude: true ## Group discussion [:cube]() Do you have believe that you have a question that requires the use of a mixed model? --- # Additional ressources .center[    ] **Popular libraries for (G)LMMs** * Frequentist : `nlme`, `lme4`, `glmmTMB` * Bayesian : `brms`, `rstan`, `rstanarm`, `MCMCglmm` **Papers** * [Harrison *et al.* (2018), *PeerJ*, DOI 10.7717/peerj.4794 ](http://dx.doi.org/10.7717/peerj.4794) * [Silk *et al.* (2020), *PeerJ*, DOI 10.7717/peerj.9522 ](https://doi.org/10.7717/peerj.9522) * [Schielzeth *et al.* (2020), *Methods Ecol Evol.*, DOI: 10.1111/2041-210X.13434 ]( https://doi.org/10.1111/2041-210X.13434) * [Zuur & Ieno (2016), *Methods Ecol Evol.*, DOI: 10.1111/2041-210X.12577 ]( https://doi.org/10.1111/2041-210X.12577) --- class: inverse, center, bottom # Thank you for attending this workshop!