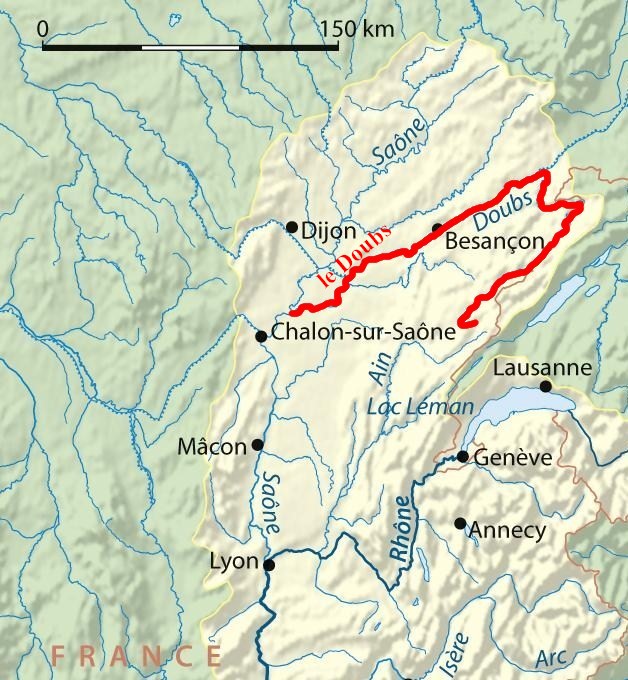

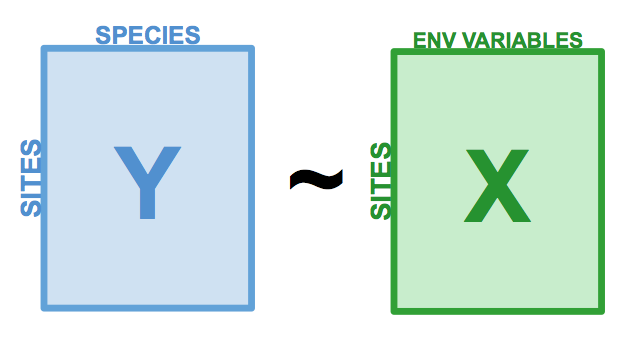

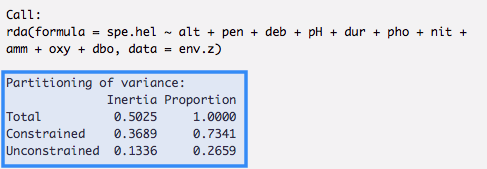

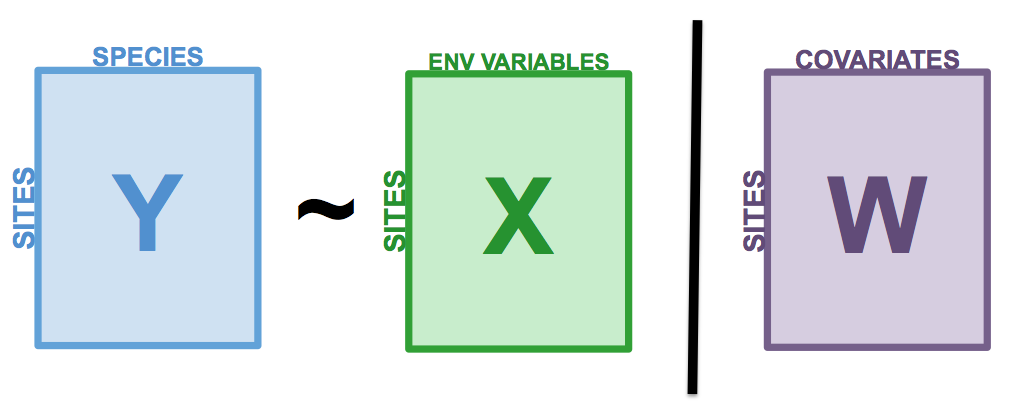

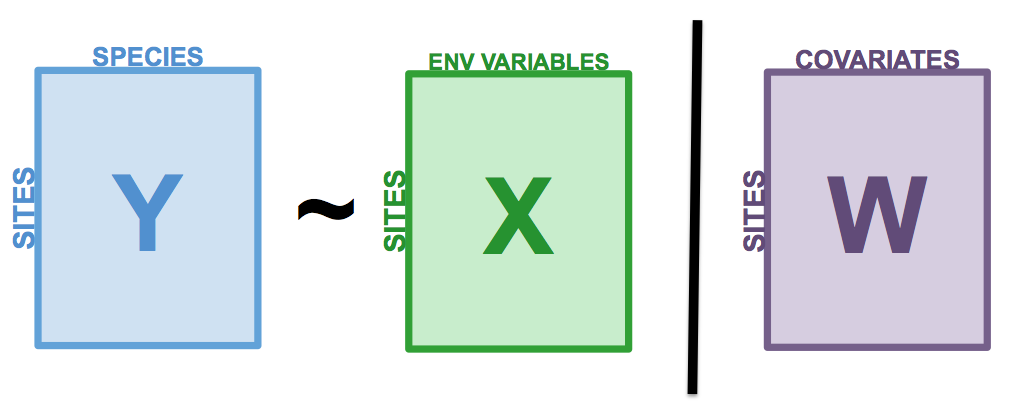

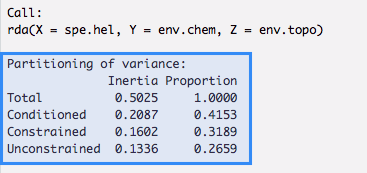

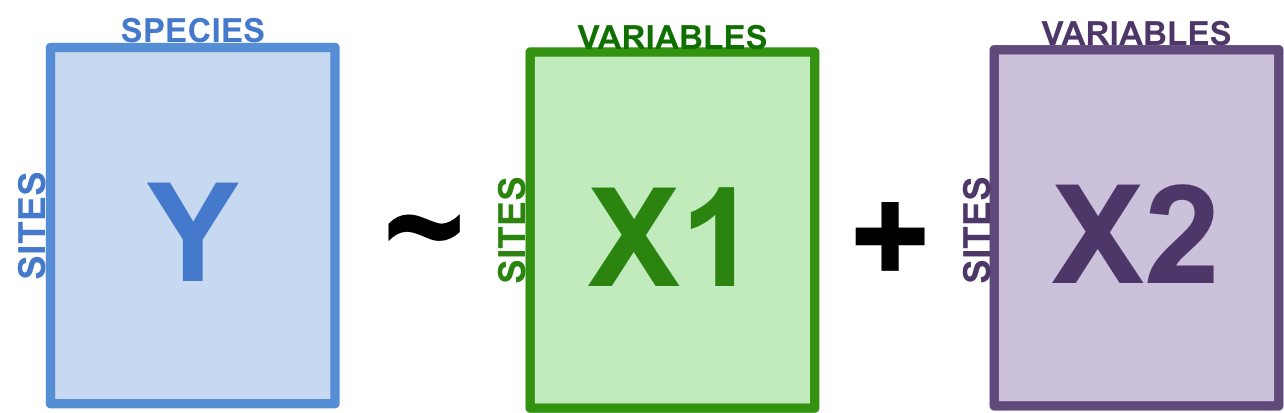

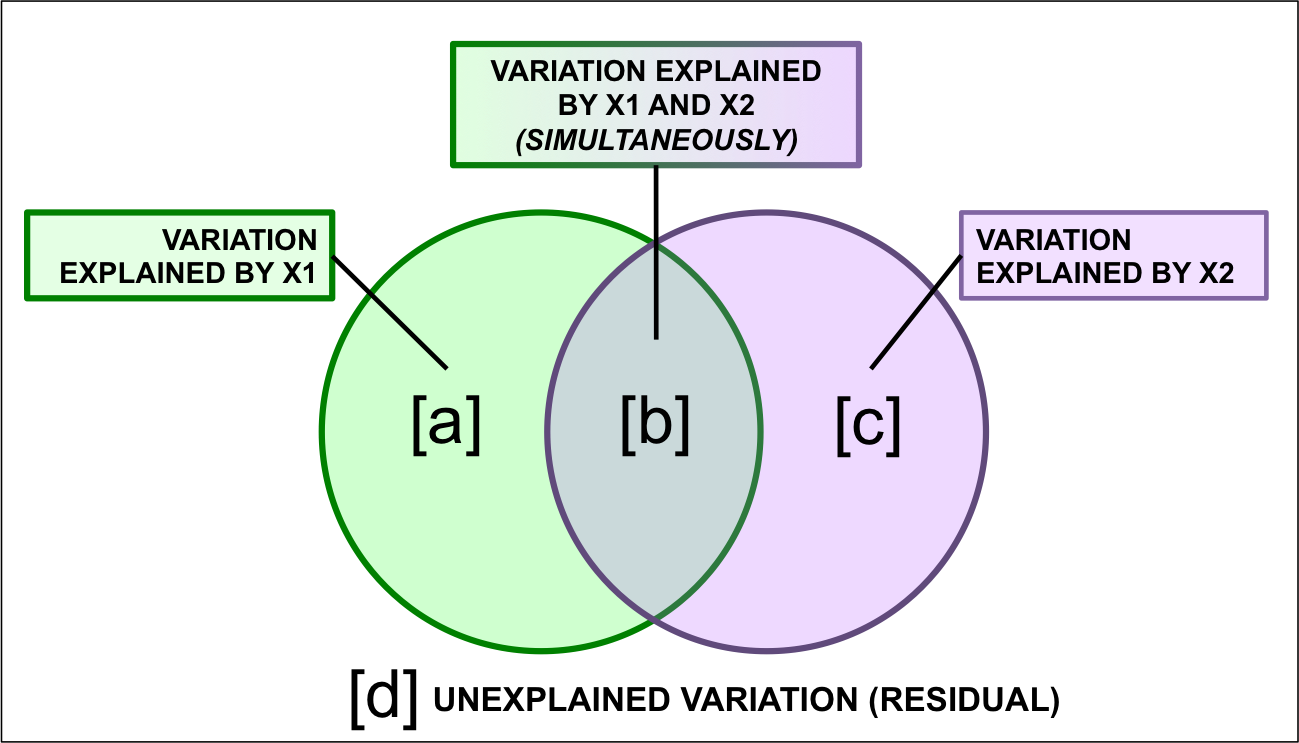

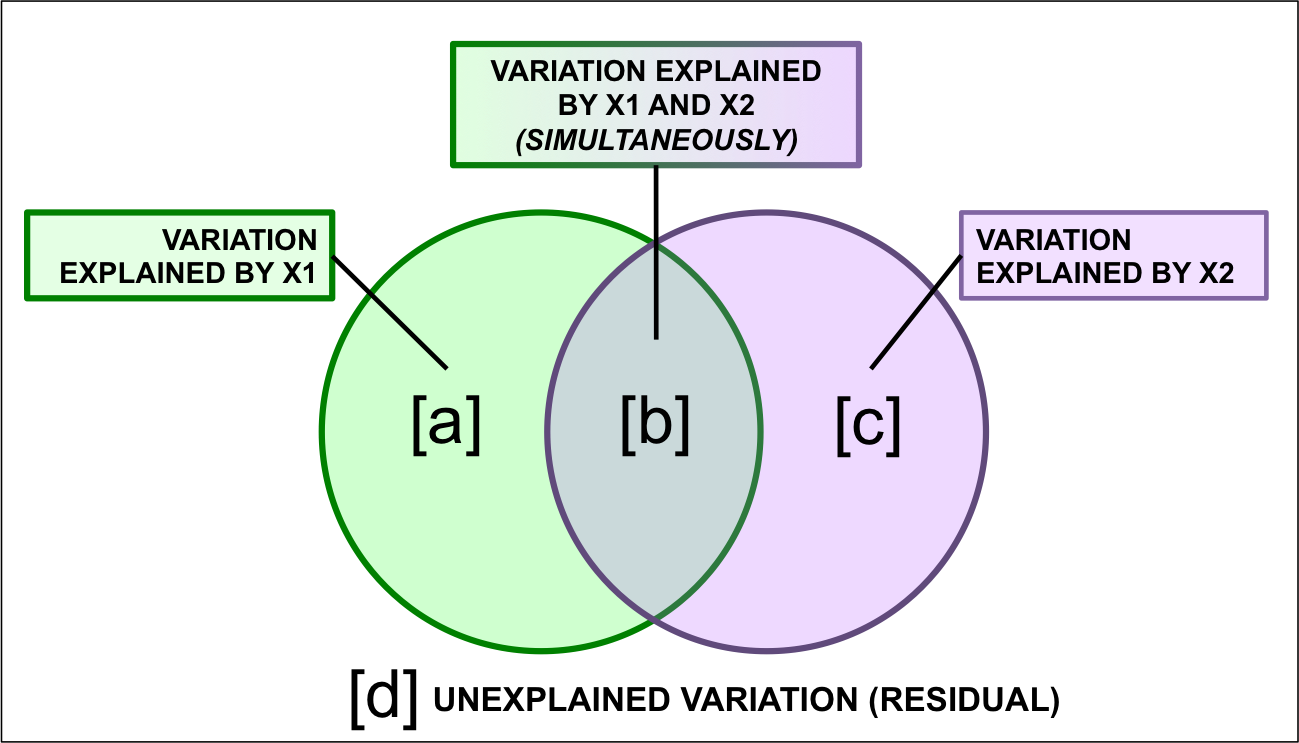

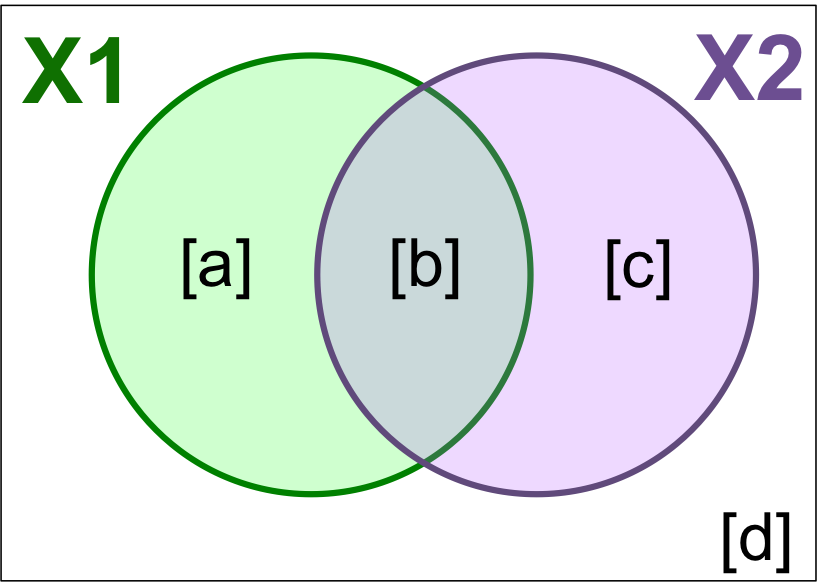

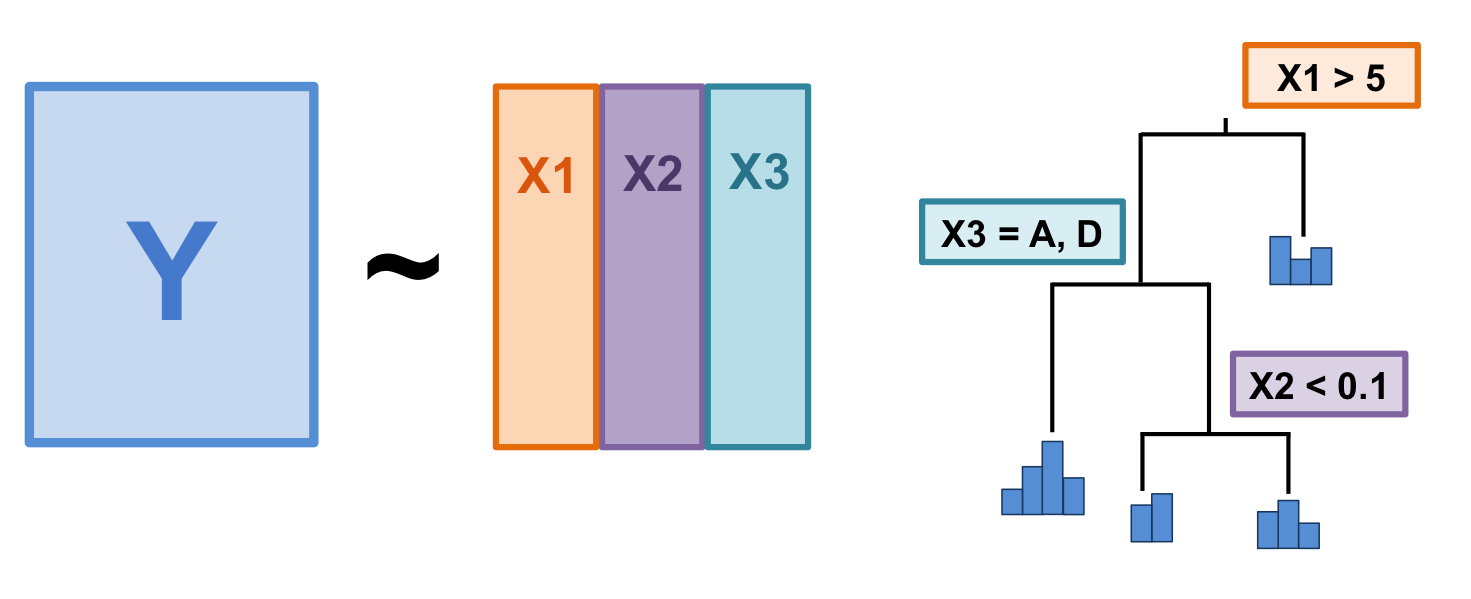

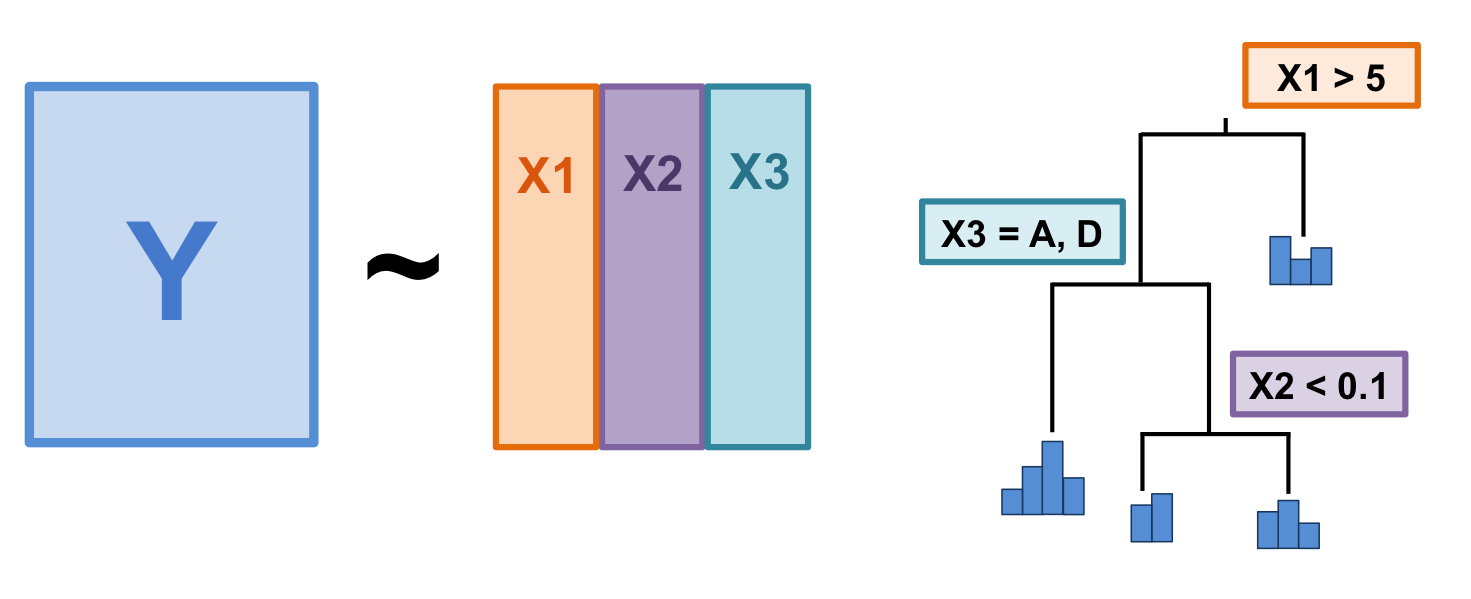

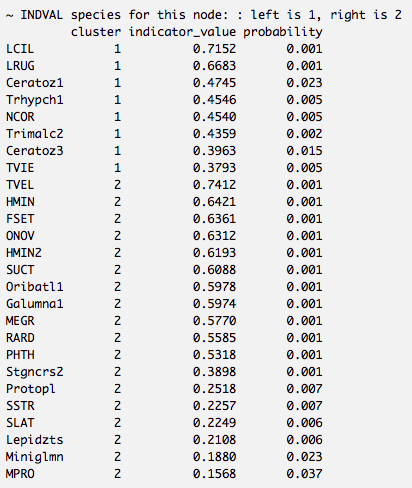

class: center, middle, inverse, title-slide # Workshop 10: Advanced multivariate analyses ## QCBS R Workshop Series ### Québec Centre for Biodiversity Science --- class: inverse, center, middle # About this workshop [](https://github.com/QCBSRworkshops/workshop10) [](https://wiki.qcbs.ca/r_workshop10) [](https://qcbsrworkshops.github.io/workshop10/workshop10-en/workshop10-en.html) [](https://qcbsrworkshops.github.io/workshop10/workshop10-en/workshop10-en.pdf) [](https://qcbsrworkshops.github.io/workshop10/workshop10-en/workshop10-en.R) --- # Required packages * [Hmisc](https://cran.r-project.org/package=Hmisc) * [labdsv](https://cran.r-project.org/package=labdsv) * [MASS](https://cran.r-project.org/package=MASS) * [vegan](https://cran.r-project.org/package=vegan) <br> ```R install.packages(c('Hmisc', 'labdsv', 'MASS', 'vegan')) ``` --- # Learning objectives ##### Use R to perform unconstrained ordinations --- class: inverse, center, middle ## Introduction --- ## Introduction This workshop is an extension of Workshop 9, which covered the basics of unconstrained analyses: * Distance metrics and transformations * Hierarchical clustering * Unconstrained ordinations (PCA, PCoA, CA, nmDS) These identify patterns in community composition data or in descriptors, .alert[**without**] exploring how environmental variables could be driving these patterns. --- # Introduction In this workshop, we will focus instead on .alert[**constrained**] analyses: * Redundancy analysis (RDA) * Partial redundancy analysis * Variation partitioning * Multivariate regression tree (MRT) * Linear discriminant analysis (LDA) These analyses allow us to .alert[**describe**] and .alert[**predict**] relationships between community composition data and environmental variables. (This means we can .alert[**test hypotheses**]!) --- # Download today's script and data All data and code can be found at [qcbs.ca/wiki/r_workshop10](http://qcbs.ca/wiki/r_workshop10) For this workshop, you will need: * R script * Data: * DoubsEnv data * DoubsSpe data * DoubsSpa data * Test data for linear discriminant analyses --- # Required packages Please make sure you have downloaded, installed, and loaded these packages: * vegan .small[(for multivariate analyses)] * rdaTest package .alert[(from wiki)] * mvpart package .alert[(from wiki)] * MVPARTwrap package .alert[(from wiki)] * labdsv .small[(to identify indicator species in the MRT)] * MASS .small[(for LDA)] --- # Follow along! As always, we recommend that you: * create your own script (or add comments to the provided script) * avoid copy-pasting or running the code directly from the script * remember to set the working directory to the folder in which your files are stored --- class: inverse, center, middle # Data exploration & preparation --- # Today's data: #### Doubs River Fish Dataset (Verneaux 1973) .pull-left[Description of fish communities along the Doubs River: * 27 species * 30 sites * 11 environmental variables  ] .pull-right[ ] --- # Load the Doubs River dataset .alert[Make sure the datasets are in your working directory!] Load the species data (*doubsspe.csv* ): ```r # Make sure the files are in your working directory! spe <- read.csv("data/doubsspe.csv", row.names = 1) spe <- spe[-8,] # remove site with no data ``` Load the environmental data (*doubsenv.csv* ): ```r env <- read.csv("data/doubsenv.csv", row.names = 1) env <- env[-8,] # remove site with no data ``` .alert[Note]: Only execute once! --- # Exploring the fish community dataset Let's briefly explore the fish community dataset: ```r names(spe) # names of objects (species) # [1] "CHA" "TRU" "VAI" "LOC" "OMB" "BLA" "HOT" "TOX" "VAN" "CHE" "BAR" "SPI" # [13] "GOU" "BRO" "PER" "BOU" "PSO" "ROT" "CAR" "TAN" "BCO" "PCH" "GRE" "GAR" # [25] "BBO" "ABL" "ANG" dim(spe) # dataset dimensions # [1] 29 27 ``` Let's take a closer look at the objects (species): ```r head(spe) # look at first 5 rows str(spe) # structure of objects in dataset summary(spe) # summary statistics for all objects (min, mean, max, etc.) ``` --- # Distribution of species frequencies Let's take a quick look at how the community is structured. ```r # Count number of species frequencies in each abundance class ab <- table(unlist(spe)) # Plot distribution of species frequencies barplot(ab, las = 1, # make axis labels perpendicular to axis xlab = "Abundance class", ylab = "Frequency", # label axes col = grey(5:0/5)) # 5-colour gradient for the bars ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-5-1.png" width="432" style="display: block; margin: auto;" /> .alert[Notice]: There are many 0s! --- # Distribution of species frequencies How many 0s are in the dataset? ```r sum(spe == 0) # [1] 408 ``` <br> What proportion of the dataset does that represent? ```r sum(spe==0)/(nrow(spe)*ncol(spe)) # [1] 0.5210728 ``` --- # Transforming the community data .alert[**Over 50%**] of our dataset consists of 0s, which is common in community datasets. <br> However, we don't want these common absences to **artificially increase** the similarity between sites. * To avoid this, we can .alert[transform] the community data. <br> Let's use the .alert[Hellinger] transformation: ```r # The decostand() function in the vegan package makes this easy for us: library(vegan) spe.hel <- decostand(spe, method = "hellinger") ``` --- # Exploring the environmental dataset Let's briefly explore the environmental dataset: ```r names(env) # names of objects (environmental variables) # [1] "das" "alt" "pen" "deb" "pH" "dur" "pho" "nit" "amm" "oxy" "dbo" dim(env) # dataset dimensions # [1] 29 11 head(env) # look at first 5 rows # das alt pen deb pH dur pho nit amm oxy dbo # 1 0.3 934 48.0 0.84 7.9 45 0.01 0.20 0.00 12.2 2.7 # 2 2.2 932 3.0 1.00 8.0 40 0.02 0.20 0.10 10.3 1.9 # 3 10.2 914 3.7 1.80 8.3 52 0.05 0.22 0.05 10.5 3.5 # 4 18.5 854 3.2 2.53 8.0 72 0.10 0.21 0.00 11.0 1.3 # 5 21.5 849 2.3 2.64 8.1 84 0.38 0.52 0.20 8.0 6.2 # 6 32.4 846 3.2 2.86 7.9 60 0.20 0.15 0.00 10.2 5.3 ``` For a closer look at the objects (environmental variables): ```r str(env) # structure of objects in dataset summary(env) # summary statistics for all objects (min, mean, max, etc.) ``` --- # Collinearity ```r # We can visually look for correlations between variables: pairs(env) ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-11-1.png" width="648" style="display: block; margin: auto;" /> .alert[Note:] Some variables look correlated... .small[(das vs. alt, das vs. deb, das vs. dur, das vs. nit, oxy vs. dbo, etc.)] --- # Standardizing the environmental variables You cannot compare the effects of variables with different units. <br> Before moving on to further analyses, .alert[standardizing] your environmental variables is therefore crucial. ```r # Scale and center variables env.z <- decostand(env, method = "standardize") # Variables are now centered around a mean of 0: round(apply(env.z, 2, mean), 1) # das alt pen deb pH dur pho nit amm oxy dbo # 0 0 0 0 0 0 0 0 0 0 0 # and scaled to have a standard deviation of 1 apply(env.z, 2, sd) # das alt pen deb pH dur pho nit amm oxy dbo # 1 1 1 1 1 1 1 1 1 1 1 ``` --- class: inverse, center, middle # Canonical analyses --- # Canonical analyses Canonical analyses allow us to: - identify relationships between a response matrix and explanatory matrix/matrices - test hypotheses about these relationships - make predictions --- class: inverse, center, middle # Canonical analyses ## Redundancy analysis (RDA) --- # Redundancy analysis (RDA) RDA is constrained ordination. - RDA is a direct extension of multiple regression. - RDA models the effect of an explanatory matrix on a response matrix .comment[.small[(instead of a single response variable)].] .center[ ] Variables can be quantitative, qualitative, or binary (0/1). - .alert[transform] and/or .alert[standardize] them prior to running an RDA. --- # Running an RDA in R Prepare the data ```r # We'll use our standardized environmental data # But we will remove 'das', which was correlated with many other variables: env.z <- subset(env.z, select = -das) ``` -- Run the RDA ```r # Model the effect of all environmental variables on fish community composition spe.rda <- rda(spe.hel ~ ., data = env.z) ``` -- Extract key results of the RDA ```r summary(spe.rda, display = NULL) ``` --- # RDA output in R .center[ ] * **Constrained Proportion**: variance of Y explained by X .alert[(73.41%)] * **Unconstained Proportion**: unexplained variance in Y .alert[(26.59%)] How would you report these results? * .comment[The included environmental variables explain .alert[73.41%] of the variation in fish community composition across sites.] --- # Selecting variables Using .alert[forward selection], we can select the explanatory variables that are statistically "important". <br> .comment[**Which variables significantly contribute to our model's explanatory power?**] -- ```r # Forward selection of variables: fwd.sel <- ordiR2step(rda(spe.hel ~ 1, data = env.z), # lower model limit (simple!) scope = formula(spe.rda), # upper model limit (the "full" model) direction = "forward", R2scope = TRUE, # can't surpass the "full" model's R2 pstep = 1000, trace = FALSE) # change to TRUE to see the selection process! ``` .small[Here, we are essentially adding one variable at a time, and retaining it if it significantly increases the model's adjusted R2.] --- # Selecting variables - Which variables are retained by the forward selection? ```r # Check the new model with forward-selected variables fwd.sel$call # rda(formula = spe.hel ~ alt + oxy + dbo, data = env.z) ``` -- - What is the adjusted R2 of the RDA with the selected variables? ```r # Write our new model spe.rda.signif <- rda(spe.hel ~ alt + oxy + dbo, data = env.z) # check the adjusted R2 RsquareAdj(spe.rda.signif) # $r.squared # [1] 0.5894243 # # $adj.r.squared # [1] 0.5401552 ``` --- # Significance testing Use .alert[anova.cca()] to test the significance of your RDA. ```r anova.cca(spe.rda.signif, step = 1000) # Permutation test for rda under reduced model # Permutation: free # Number of permutations: 999 # # Model: rda(formula = spe.hel ~ alt + oxy + dbo, data = env.z) # Df Variance F Pr(>F) # Model 3 0.29619 11.963 0.001 *** # Residual 25 0.20632 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` You can also test the significance of each variable! ```r anova.cca(spe.rda.signif, step = 1000, by = "term") ``` --- # RDA plot One of the most powerful aspects of RDA is the **simultaneous visualization** of your response and explanatory variables .comment[(i.e. species and environmental variables)]. <br> -- <br> There are 2 scaling options: <br> | Type 1 | Type 2 | | ---------------------------------------------------|---------------------------------------------------| | distances among objects reflect their similarities | angles between variables reflect their correlation| --- # RDA plot: Type 1 .pull-left[ ```r ordiplot(spe.rda.signif, scaling = 1, type = "text") ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-21-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ######Scaling 1 shows similarities between objects in the .comment[response matrix]. .small[ * Sites (numbers) that are .alert[closer together] have more similar communities. * Species that are .alert[closer together] occupy more sites in common. ] ] --- # RDA plot: Type 2 .pull-left[ ```r ordiplot(spe.rda.signif, scaling = 2, type = "text") ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-22-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ######Scaling 2 shows the effects of .comment[explanatory variables]. .small[ * .alert[Longer] arrows mean this variable strongly drives the variation in the community matrix. * Arrows pointing in .alert[opposite directions] have a negative relationship * Arrows pointing in the .alert[same direction] have a positive relationship ] ] --- # Customizing RDA plots Both plot() and ordiplot() make quick and simple ordination plots, but you can customize your plots by manually setting the aesthetics of points, text, and arrows. <img src="workshop10-en_files/figure-html/unnamed-chunk-23-1.png" width="432" style="display: block; margin: auto;" /> See the wiki page for more details! --- # Challenge 1 ![:cube]() Run an RDA to model the effects of environmental variables on mite species abundances. <br> .small[ First, load the mite data: ```r # Load mite species abundance data data("mite") # Load environmental data data("mite.env") ``` Recall some useful functions: ```r decostand() rda() ordiR2step() anova.cca() ordiplot() ``` ] --- # Challenge 1: Solution Step 1: Prepare the data ```r # Hellinger transform the community data mite.spe.hel <- decostand(mite, method = "hellinger") # Standardize quantiative environmental data mite.env$SubsDens <- decostand(mite.env$SubsDens, method = "standardize") mite.env$WatrCont <- decostand(mite.env$WatrCont, method = "standardize") ``` --- # Challenge 1: Solution Step 2: Select environmental variables ```r # Initial RDA with ALL of the environmental data mite.spe.rda <- rda(mite.spe.hel ~ ., data = mite.env) # Forward selection of environmental variables fwd.sel <- ordiR2step(rda(mite.spe.hel ~ 1, data = mite.env), scope = formula(mite.spe.rda), direction = "forward", R2scope = TRUE, pstep = 1000, trace = FALSE) fwd.sel$call # rda(formula = mite.spe.hel ~ WatrCont + Shrub + Substrate + Topo, # data = mite.env) ``` --- # Challenge 1: Solution Step 3: Run RDA and check adjusted R2 ```r # Re-run the RDA with the significant variables mite.spe.rda.signif <- rda(mite.spe.hel ~ WatrCont + Shrub + Substrate + Topo + SubsDens, data = mite.env) # Find the adjusted R2 of the model with the retained env variables RsquareAdj(mite.spe.rda.signif)$adj.r.squared # [1] 0.4367038 ``` --- # Challenge 1: Solution Step 4: Test model significance ```r anova.cca(mite.spe.rda.signif, step = 1000) # Permutation test for rda under reduced model # Permutation: free # Number of permutations: 999 # # Model: rda(formula = mite.spe.hel ~ WatrCont + Shrub + Substrate + Topo + SubsDens, data = mite.env) # Df Variance F Pr(>F) # Model 11 0.20759 5.863 0.001 *** # Residual 58 0.18669 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` The selected environmental variables significantly explain .alert[43.7% (p = 0.001)] of the variation in mite species abundances. --- # Challenge 1: Solution Step 5: Plot the RDA! .pull-left[ ```r ordiplot(mite.spe.rda.signif, scaling = 1, main = "Mite RDA - Scaling 1") ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-30-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ```r ordiplot(mite.spe.rda.signif, scaling = 2, main = "Mite RDA - Scaling 2") ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-31-1.png" width="432" style="display: block; margin: auto;" /> ] --- class: inverse, center, middle # Canonical analyses ## Partial RDA --- # Partial RDA * Special case of RDA involving covariates * Models the linear effects of matrix X on matrix Y while controling for a matrix W of .alert[covariates]. .center[ ] --- # Applications of partial RDA * Assess effects of environmental variables on community composition while .alert[accounting for variation] that isn't the focus of the study. * .alert[Isolate] effect of one or more groups of explanatory variables .center[ ] --- # Partial RDA on Doubs River data Let's assess the effect of water chemistry on fish species abundances while controling for the effect of topography. ```r # Subset environmental data into topography variables and chemistry variables env.topo <- subset(env.z, select = c(alt, pen, deb)) env.chem <- subset(env.z, select = c(pH, dur, pho, nit, amm, oxy, dbo)) # Partial RDA spe.partial.rda <- rda(spe.hel, env.chem, env.topo) ``` -- .small[ .alert[Note]: Alternative syntax for the partial RDA: ```r spe.partial.rda <- rda(spe.hel ~ pH + dur + pho + nit + amm + oxy + dbo + Condition(alt + pen + deb), data = env.z) ``` ] --- # Partial RDA output in R ```r summary(spe.partial.rda, display = NULL) ``` .center[ ] .small[ * **Conditioned Proportion**: variance of Y explained by W .alert[(41.53%)] * **Constrained Proportion**: variance of Y explained by X .alert[(31.89%)] * **Unconstained Proportion**: unexplained variance in Y .alert[(26.59%)] How would you report these results? .comment[Water chemistry explains 31.9% of the variation in fish community composition across sites, while topography explains 41.5% of this variation.] ] --- # Significance testing First, let's extract the model's adjusted R2. ```r RsquareAdj(spe.partial.rda)$adj.r.squared # [1] 0.2413464 ``` -- Then, let's test whether the model is statistically significant. ```r anova.cca(spe.partial.rda, step = 1000) # Permutation test for rda under reduced model # Permutation: free # Number of permutations: 999 # # Model: rda(X = spe.hel, Y = env.chem, Z = env.topo) # Df Variance F Pr(>F) # Model 7 0.16024 3.0842 0.001 *** # Residual 18 0.13360 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- # Plot the partial RDA .small[ ```r ordiplot(spe.partial.rda, scaling = 2, main = "Doubs River partial RDA - Scaling 2") ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-37-1.png" width="432" style="display: block; margin: auto;" /> -- .alert[Note]: The topography variables (covariates) aren't plotted. Why is that?] --- # Challenge 2 ![:cube]() .small[ Run a partial RDA to model the effects of environmental variables on mite species abundances, while controling for substrate variables (SubsDens, WatrCont, and Substrate). * What is the variance explained by substrate variables? * Is the model significant? * Which axes are significant? <br> Recall some useful functions: ```r rda() summary() RsquareAdj() anova.cca() # hint: see the 'by' argument in ?anova.cca ``` ] --- # Challenge 2: Solution Our species abundance and environmental datasets have already been transformed and standardized. So, we can start with the partial RDA: ```r # Compute partial RDA mite.spe.subs <- rda(mite.spe.hel ~ Shrub + Topo + Condition(SubsDens + WatrCont + Substrate), data = mite.env) # Check summary summary(mite.spe.subs, display = NULL) ``` -- Shrub and Topo explain .alert[9.8%] of the variation in mite species abundances, while substrate covariables explain .alert[42.8%] of this variation. --- # Challenge 2: Solution * What is the variance explained by substrate variables? ```r RsquareAdj(mite.spe.subs)$adj.r.squared # [1] 0.08327533 ``` -- * Is the model significant? ```r anova.cca(mite.spe.subs, step = 1000) # Permutation test for rda under reduced model # Permutation: free # Number of permutations: 999 # # Model: rda(formula = mite.spe.hel ~ Shrub + Topo + Condition(SubsDens + WatrCont + Substrate), data = mite.env) # Df Variance F Pr(>F) # Model 3 0.038683 4.006 0.001 *** # Residual 58 0.186688 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- # Challenge 2: Solution * Which axes are significant? ```r anova.cca(mite.spe.subs, step = 1000, by = "axis") # Permutation test for rda under reduced model # Forward tests for axes # Permutation: free # Number of permutations: 999 # # Model: rda(formula = mite.spe.hel ~ Shrub + Topo + Condition(SubsDens + WatrCont + Substrate), data = mite.env) # Df Variance F Pr(>F) # RDA1 1 0.027236 8.4618 0.001 *** # RDA2 1 0.008254 2.5643 0.018 * # RDA3 1 0.003193 0.9919 0.439 # Residual 58 0.186688 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- class: inverse, center, middle # Canonical analyses ## Variation partitioning --- # Variation partitioning Partitions the variation of response variables among 2, 3, or 4 explanatory datasets. * e.g. large-scale and small-scale * e.g. abiotic and biotic .center[] --- # Variation partitioning .center[] --- # Variation partitioning in R .small[.alert[Note]: Make sure you've loaded the *vegan* package! ```r spe.part.all <- varpart(spe.hel, env.chem, env.topo) spe.part.all$part # access results! # No. of explanatory tables: 2 # Total variation (SS): 14.07 # Variance: 0.50251 # No. of observations: 29 # # Partition table: # Df R.squared Adj.R.squared Testable # [a+b] = X1 7 0.60579 0.47439 TRUE # [b+c] = X2 3 0.41526 0.34509 TRUE # [a+b+c] = X1+X2 10 0.73414 0.58644 TRUE # Individual fractions # [a] = X1|X2 7 0.24135 TRUE # [b] 0 0.23304 FALSE # [c] = X2|X1 3 0.11205 TRUE # [d] = Residuals 0.41356 FALSE # --- # Use function 'rda' to test significance of fractions of interest ``` ] --- # Variation partitioning plot ```r plot(spe.part.all, Xnames = c("Chem", "Topo"), # name the partitions bg = c("seagreen3", "mediumpurple"), alpha = 80, # colour the circles digits = 2, # only show 2 digits cex = 1.5) ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-44-1.png" width="432" style="display: block; margin: auto;" /> --- # Significance testing .center[] * The shared fraction [b] .alert[cannot] be tested for significance. * But, we can test the significance of the remaining fractions! --- # Significance testing: X1 [a+b] .center[] .small[ ```r # [a+b] Chemistry without controlling for topography anova.cca(rda(spe.hel, env.chem)) # Permutation test for rda under reduced model # Permutation: free # Number of permutations: 999 # # Model: rda(X = spe.hel, Y = env.chem) # Df Variance F Pr(>F) # Model 7 0.30442 4.6102 0.001 *** # Residual 21 0.19809 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] --- # Significance testing: X2 [b+c] .center[] .small[ ```r # [b+c] Topography without controlling for chemistry anova.cca(rda(spe.hel, env.topo)) # Permutation test for rda under reduced model # Permutation: free # Number of permutations: 999 # # Model: rda(X = spe.hel, Y = env.topo) # Df Variance F Pr(>F) # Model 3 0.20867 5.918 0.001 *** # Residual 25 0.29384 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] --- # Significance testing: Individual fractions .center[] .small[ ```r # [a] Chemistry alone anova.cca(rda(spe.hel, env.chem, env.topo)) # Permutation test for rda under reduced model # Permutation: free # Number of permutations: 999 # # Model: rda(X = spe.hel, Y = env.chem, Z = env.topo) # Df Variance F Pr(>F) # Model 7 0.16024 3.0842 0.001 *** # Residual 18 0.13360 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` .alert[Note:] Recognize this? It's a partial RDA! ] --- # Significance testing: Individual fractions .center[] .small[ ```r # [c] Topography alone anova.cca(rda(spe.hel, env.topo, env.chem)) # Permutation test for rda under reduced model # Permutation: free # Number of permutations: 999 # # Model: rda(X = spe.hel, Y = env.topo, Z = env.chem) # Df Variance F Pr(>F) # Model 3 0.064495 2.8965 0.001 *** # Residual 18 0.133599 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ] --- # Challenge 3 ![:cube]() .small[ Partition the variation in the mite species data according to substrate variables (SubsDens, WatrCont) and significant spatial variables. * What proportion of the variation is explained by substrate variables? By space? * Which individual fractions are significant? * Plot your results! Load the spatial variables: ```r data("mite.pcnm") ``` Recall some useful functions: ```r ordiR2step() varpart() anova.cca(rda()) plot() ``` ] --- # Challenge 3: Solution Step 1: Forward selection of significant spatial variables ```r # Write full RDA model with all variables full.spat <- rda(mite.spe.hel ~ ., data = mite.pcnm) # Forward selection of spatial variables spat.sel <- ordiR2step(rda(mite.spe.hel ~ 1, data = mite.pcnm), scope = formula(full.spat), R2scope = RsquareAdj(full.spat)$adj.r.squared, direction = "forward", trace = FALSE) spat.sel$call # rda(formula = mite.spe.hel ~ V2 + V3 + V8 + V1 + V6 + V4 + V9 + # V16 + V7 + V20, data = mite.pcnm) ``` --- # Challenge 3: Solution Step 2: Create variable groups ```r # Subset environmental data to retain only substrate variables mite.subs <- subset(mite.env, select = c(SubsDens, WatrCont)) # Subset to keep only selected spatial variables mite.spat <- subset(mite.pcnm, select = names(spat.sel$terminfo$ordered)) # a faster way to access the selected variables! ``` --- # Challenge 3: Solution Step 3: Variation partitioning ```r mite.part <- varpart(mite.spe.hel, mite.subs, mite.spat) mite.part$part$indfract # access results! # Df R.squared Adj.R.squared Testable # [a] = X1|X2 2 NA 0.05901929 TRUE # [b] 0 NA 0.24765221 FALSE # [c] = X2|X1 10 NA 0.19415929 TRUE # [d] = Residuals NA NA 0.49916921 FALSE ``` * What proportion of the variation is explained by substrate variables? * .alert[5.9%] * What proportion of the variation is explained by spatial variables? * .alert[19.4%] --- # Challenge 3: Solution Step 4: Which individual fractions are significant? ```r # [a]: Substrate only anova.cca(rda(mite.spe.hel, mite.subs, mite.spat)) # p = 0.001 *** # [c]: Space only anova.cca(rda(mite.spe.hel, mite.spat, mite.subs)) # p = 0.001 *** ``` -- .comment[So, what can you say about the effects of substrate and space on mite species abundances?] --- # Challenge 3: Solution Step 5: Plot the variation partitioning results ```r plot(mite.part, digits = 2, cex = 1.5, bg = c("pink", "skyblue"), alpha = 90) # add colour! ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-55-1.png" width="432" style="display: block; margin: auto;" /> --- class: inverse, center, middle # Multivariate regression tree (MRT) --- # Multivariate regression tree (MRT) .center[] MRT is a constrained clustering technique. * Splits a response matrix (Y) into clusters based on thresholds of explanatory variables (X) --- # Multivariate regression tree (MRT) .center[] An MRT consists of: * .alert[Branch]: each group formed by a split * .alert[Node]: splitting point (threshold value of an explanatory variable) * .alert[Leaf]: terminal group of sites --- # Multivariate regression tree (MRT) MRT has many advantages: * Doesn't assume a linear relationship between Y and X matrices * Results are easy to visualize (it's a tree!) * Clearly identifies importance of explanatory variables * Robust (missing values, collinearity) * Can handle raw explanatory variables --- # MRT: Tree selection When you run an MRT, 2 things happen: 1. Constrained partitioning of the data 2. .alert[Cross-validation] to identify best predictive tree <br> The "best" tree varies depending on your study goals. Usually you want a tree: * that is .comment[parsimonious] * but still has an .comment[informative] number of groups * Basically: what makes sense for your question? --- # MRT in R In what follows we will be using .alert[`mvpart` that is currently archived on CRAN]. We install it from GitHub using the package remotes: ```r remotes::install_github("cran/mvpart") library(mvpart) ``` --- # MRT in R ```r # First, remove the "distance from source" variable env <- subset(env, select = -das) # Create multivariate regression tree # library(mvpart) doubs.mrt <- mvpart(as.matrix(spe.hel) ~ ., data = env, xv = "pick", # interactively select best tree xval = nrow(spe.hel), # number of cross-validations xvmult = 100, # number of multiple cross-validations which = 4, # plot both node labels legend = FALSE, margin = 0.01, cp = 0) ``` --- # MRT in R: Tree selection <img src="workshop10-en_files/figure-html/unnamed-chunk-57-1.png" width="396" style="display: block; margin: auto;" /> .small[ * Green points: Relative error * Blue points: Cross-validated relative error (CVRE) * Red dot: Which tree has the smallest CVRE * Orange dot: Smallest tree within one standard error of the CVRE * Lime green bars: # of times each tree size was chosen ] --- # MRT in R: Tree selection .small[ * Pick the "best" tree by clicking on a blue dot that corresponds to your chosen tree size! * We don't have an *a priori* expectation about how to partition this data, so we'll select the .comment[smallest tree within 1 standard error of the overall best-fit tree] (i.e. the orange dot). ] --- # MRT in R: Tree plot <img src="workshop10-en_files/figure-html/unnamed-chunk-59-1.png" width="396" style="display: block; margin: auto;" /> .small[ * The species matrix is partitioned according to an .alert[altitude threshold (361.5)] * Barplots: species abundances in the sites included in each group * Residual error = 0.563, which means the model's R2 is .alert[43.7%] ] --- # MRT in R: Comparing trees We can also compare solutions, to help us chose the best tree. For example, let's look at a 10-group solution! <img src="workshop10-en_files/figure-html/unnamed-chunk-60-1.png" width="864" style="display: block; margin: auto;" /> .small[ * This is much .alert[harder to interpret] (so many groups!) * Higher explanatory power, .alert[BUT] predictive power (CV Error = 0.671) is basically the same as the previous solution (CV Error = 0.673). ] --- # MRT in R: Comparing trees Let's look at a solution with fewer (4) groups! .tiny[ <img src="workshop10-en_files/figure-html/unnamed-chunk-61-1.png" width="576" style="display: block; margin: auto;" /> ] .small[ * This is easier to interpret! * Higher explanatory power .alert[(lower Error)] than our original solution * .alert[Higher predictive power] than both previous solutions (CV Error) ] --- # MRT in R: Complexity parameter To find out how much variance is explained by each node in the tree, we need to look at the complexity parameter (CP). ```r doubs.mrt$cptable # CP nsplit rel error xerror xstd # 1 0.4369561 0 1.0000000 1.0768059 0.07491763 # 2 0.1044982 1 0.5630439 0.6760624 0.09510184 ``` * CP @ nsplit 0 = R2 of the whole tree * CP at subsequent nodes = R2 of each node (see full summary to see which node corresponds to which variable threshold) --- # MRT in R: Summary output We can access more information about the tree (such as which node corresponds to which variable threshold): .tiny[ ```r summary(doubs.mrt) # Call: # mvpart(form = as.matrix(spe.hel) ~ ., data = env, xv = "1se", # xval = nrow(spe.hel), xvmult = 100, xvse = 1, margin = 0.01, # which = 4, legend = FALSE, prn = FALSE, cp = 0) # n= 29 # # CP nsplit rel error xerror xstd # 1 0.4369561 0 1.0000000 1.0768059 0.07491763 # 2 0.1044982 1 0.5630439 0.6760624 0.09510184 # # Node number 1: 29 observations, complexity param=0.4369561 # Means=0.07299,0.2472,0.2581,0.2721,0.07133,0.06813,0.06897,0.07664,0.1488,0.2331,0.113,0.07879,0.1724,0.1366,0.1103,0.08216,0.08751,0.07113,0.07312,0.1345,0.06307,0.04423,0.1015,0.1862,0.07713,0.1623,0.07283, Summed MSE=0.4851823 # left son=2 (15 obs) right son=3 (14 obs) # Primary splits: # das < 192.2 to the left, improve=0.4369561, (0 missing) # alt < 361.5 to the right, improve=0.4369561, (0 missing) # deb < 23.65 to the left, improve=0.4369561, (0 missing) # amm < 0.06 to the left, improve=0.3529830, (0 missing) # nit < 1.415 to the left, improve=0.3513335, (0 missing) # # Node number 2: 15 observations # Means=0.1208,0.4463,0.4194,0.4035,0.1104,0.09023,0,0.02108,0.1256,0.2164,0.04392,0.01054,0.107,0.09779,0.06853,0,0.01054,0.01617,0.01054,0.09489,0,0,0,0.08629,0,0,0, Summed MSE=0.3194207 # # Node number 3: 14 observations # Means=0.02179,0.03391,0.08514,0.1313,0.02945,0.04444,0.1429,0.1362,0.1736,0.2509,0.1871,0.1519,0.2425,0.1781,0.1551,0.1702,0.17,0.13,0.1402,0.177,0.1306,0.09163,0.2103,0.2932,0.1598,0.3362,0.1509, Summed MSE=0.2236343 ``` ] --- # MRT in R: Discriminant species You might also want to know which species are contributing most to the explained variance at each split (i.e. .alert[discriminant species]), or which sites are included within each leaf (group). <br> To do this, we have the .comment[MVPARTwrap] package. .alert[MVPARTwrap is archived, we use the package remotes to install it from GitHub:] ```r remotes::install_github("cran/MVPARTwrap") library(MVPARTwrap) ``` --- # MRT in R: Discriminant species ```r # Generate a nicer and more informative output doubs.mrt.wrap <- MRT(doubs.mrt, percent = 10, species = colnames(spe.hel)) # Access the full output: summary(doubs.mrt.wrap) ``` --- # MRT in R: Discriminant species To see each species' contribution to explained variance at each node: .small[ ```r summary(doubs.mrt.wrap) # Length Class Mode # nodes 1 -none- numeric # pourct 27 -none- numeric # R2 27 -none- numeric # obj 13 rpart list # percent 1 -none- numeric # MOYs 54 -none- numeric # RWHERE 1 -none- list # LWHERE 1 -none- list # TABLE1 84 -none- numeric # LABELS 1 -none- logical # mat_labels 3 -none- character ``` ] --- # MRT in R: Indicator species You might also be interested in finding out which species are significant .alert[indicator species] for each grouping of sites. .xsmall[ ```r library(labdsv) # Calculate indicator values (indval) for each species doubs.mrt.indval <- indval(spe.hel, doubs.mrt$where) # Extract the significant indicator species (and which node they represent) doubs.mrt.indval$maxcls[which(doubs.mrt.indval$pval <= 0.05)] # TRU VAI LOC HOT TOX BAR SPI GOU BRO PER BOU PSO ROT CAR BCO PCH GRE GAR BBO ABL # 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 # ANG # 2 # Extract their indicator values doubs.mrt.indval$indcls[which(doubs.mrt.indval$pval <= 0.05)] # TRU VAI LOC HOT TOX BAR SPI GOU # 0.8674301 0.7758443 0.7042392 0.8571429 0.6185282 0.6363569 0.7347359 0.6442950 # BRO PER BOU PSO ROT CAR BCO PCH # 0.5533235 0.5449488 0.7857143 0.8070918 0.6352865 0.7307582 0.6428571 0.5000000 # GRE GAR BBO ABL ANG # 0.8571429 0.7726181 0.7142857 1.0000000 0.7857143 ``` ] TRU has the highest indicator value (0.867) overall, and is an indicator species for the first (alt >= 361.5) leaf of the tree. --- # Challenge 4 ![:cube]() .small[ Create a multivariate regression tree for the mite data. * Select the smallest tree within 1 SE of the CVRE. * What is the proportion of variance (R2) explained by this tree? * How many leaves does it have? * What are the top 3 discriminant species? ] <br> .small[ Remember to load the mite data: ```r data("mite") data("mite.env") ``` Recall some useful functions: ```r ?mvpart() # hint: pay attention to the 'xv' argument! ?MRT() summary() ``` ] --- # Challenge 4: Solution Step 1: Create the multivariate regression tree ```r mite.mrt <- mvpart(as.matrix(mite.spe.hel) ~ ., data = mite.env, xv = "1se", # choose smallest tree within 1 SE xval = nrow(mite.spe.hel), xvmult = 100, which = 4, legend = FALSE, margin = 0.01, cp = 0, prn = FALSE) ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-68-1.png" width="324" style="display: block; margin: auto;" /> --- # Challenge 4: Solution <img src="workshop10-en_files/figure-html/unnamed-chunk-69-1.png" width="360" style="display: block; margin: auto;" /> .small[ * What is the proportion of variance (R2) explained by this tree? * 1 - Error = 0.252, so the tree explains .alert[25.2%] of the variance in the species matrix. * How many leaves does it have? * 2 leaves ] --- # Challenge 4: Solution What are the top 3 discriminant species .alert[for node 1]? ```r # Generate nicer MRT output mite.mrt.wrap <- MRT(mite.mrt, percent = 10, species = colnames(mite.spe.hel)) # Look at discriminant species table from MRT output summary(mite.mrt.wrap) ``` --- # Challenge 4: Solution What are the top 3 discriminant species .alert[for node 1]? * LCIL, LRUG, Ceratoz1 .center[] --- class: inverse, center, middle # Linear discriminant analysis (LDA) --- # Linear discriminant analysis (LDA) * Determine how well your descriptor variables explain an *a priori* grouping of your response variable * Make predictions about how to classify new data * e.g. classifying whether a fish comes from a lake or ocean population, based on morphology .center[] --- # LDA in R: Doubs fish dataset We know that environmental variables generally change with latitude. <br> -- .comment[If we classify our Doubs River sites according to latitude, how well do environmental variables explain these groupings?] * We can use an LDA to answer this question. --- # LDA in R: Doubs fish dataset Let's begin by loading spatial coordinates for the Doubs sites: ```r # load spatial data for Doubs sites spa <- read.csv("data/doubsspa.csv", row.names = 1) spa$site <- 1:nrow(spa) # add site numbers spa <- spa[-8,] # remove site 8 ``` -- We can then assign sites to 3 latitude groups: ```r # group sites based on latitude spa$group <- NA # create "group" column spa$group[which(spa$y < 82)] <- 1 spa$group[which(spa$y > 82 & spa$y < 156)] <- 2 spa$group[which(spa$y > 156)] <- 3 ``` --- # LDA in R: Latitude groups Let's quickly plot the latitude groupings to see if they make sense: ```r plot(spa$x, spa$y, col = spa$group, pch = 16, cex = 1.5) ``` <img src="workshop10-en_files/figure-html/unnamed-chunk-73-1.png" width="396" style="display: block; margin: auto;" /> --- # LDA in R .alert[Note]: Usually we would check the multivariate homogeneity of within-group variances before proceeding (see Borcard et al. 2011). For the purposes of this workshop, we will move straight to doing the LDA: ```r # load required library library(MASS) # run the LDA grouping sites into latitude groups based on env data LDA <- lda(env, spa$group) ``` --- # LDA in R: Grouping accuracy .small[We can then determine how sites were grouped, and whether this grouping is accurate. ```r # Classification of the objects based on the LDA spe.class <- predict(LDA)$class # Posterior probabilities that the objects belong to those groups spe.post <- predict(LDA)$posterior # Table of prior vs. predicted classifications (spe.table <- table(spa$group, spe.class)) # spe.class # 1 2 3 # 1 7 0 0 # 2 0 6 0 # 3 0 0 16 # Proportion of corrected classification diag(prop.table(spe.table, 1)) # 1 2 3 # 1 1 1 ``` All sites were correctly classified into the latitude groups based on environmental variables.] --- # LDA in R: Predictions We can now use this relationship to .comment[classify new sites into latitude groups]. -- Let's .alert[predict the grouping] of 5 new sites using our LDA results: .small[ ```r # Load the new site data classify.me <- read.csv("data/classifyme.csv", header = TRUE) # classify.me <- classify.me[,-1] # remove das variable # Predict grouping of new sites predict.group <- predict(LDA, newdata = classify.me) # View site classification predict.group$class # [1] 1 1 1 3 3 # Levels: 1 2 3 ``` ] --- # Challenge 5 ![:cube]() .small[Create 4 latitude groups in the .comment[mite.xy] dataset. Then, run an LDA to classify mite sites into latitude groupings based on environmental variables (.comment[SubsDens] and .comment[WatrCont]). * What proportion of sites was correctly classified in group1? in group2? <br> Load .comment[mite.xy] data: ```r data(mite.xy) ``` Recall some useful functions: ```r lda() predict() table() diag() ``` ] --- # Challenge 5: Solution Step 1: Create 4 latitude groups .small[ ```r # assign numbers to sites mite.xy$site <- 1:nrow(mite.xy) # find latitudinal range for each group (max(mite.xy[,2])-min(mite.xy[,2]))/4 # [1] 2.4 # group sites into 4 latitude groups # group sites based on latitude mite.xy$group <- NA # create "group" column mite.xy$group[which(mite.xy$y < 2.5)] <- 1 mite.xy$group[which(mite.xy$y >= 2.5 & mite.xy$y < 4.9)] <- 2 mite.xy$group[which(mite.xy$y >= 4.9 & mite.xy$y < 7.3)] <- 3 mite.xy$group[which(mite.xy$y >= 7.3)] <- 4 ``` ] Step 2: Run the LDA .small[ ```r LDA.mite <- lda(mite.env[,1:2], mite.xy$group) ``` ] --- # Challenge 5: Solution Step 3: Check whether the groupings are correct .small[ ```r # classification of the objects based on LDA mite.class <- predict(LDA.mite)$class # table of prior versus predicted classifications (mite.table <- table(mite.xy$group, mite.class)) # mite.class # 1 2 3 4 # 1 9 4 2 0 # 2 2 11 4 0 # 3 1 2 14 2 # 4 0 0 3 16 # proportion of correct classification diag(prop.table(mite.table, 1)) # 1 2 3 4 # 0.6000000 0.6470588 0.7368421 0.8421053 ``` What proportion of sites was correctly classified in group1? in group2? * .alert[60%] were correctly classified into group1, and .alert[64.7%] were classified into group2.] --- class: inverse, center, bottom # Thank you for attending this workshop!