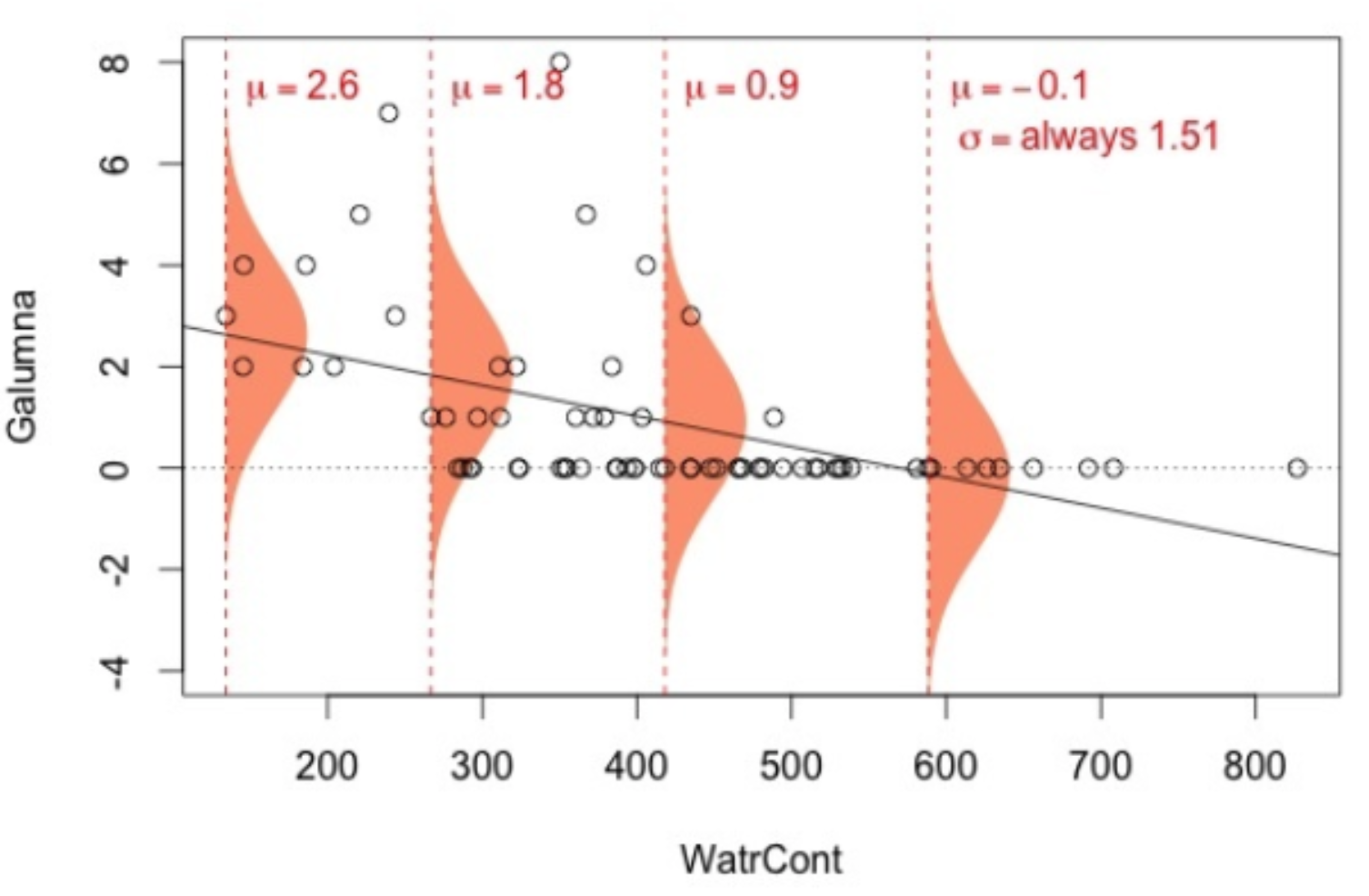

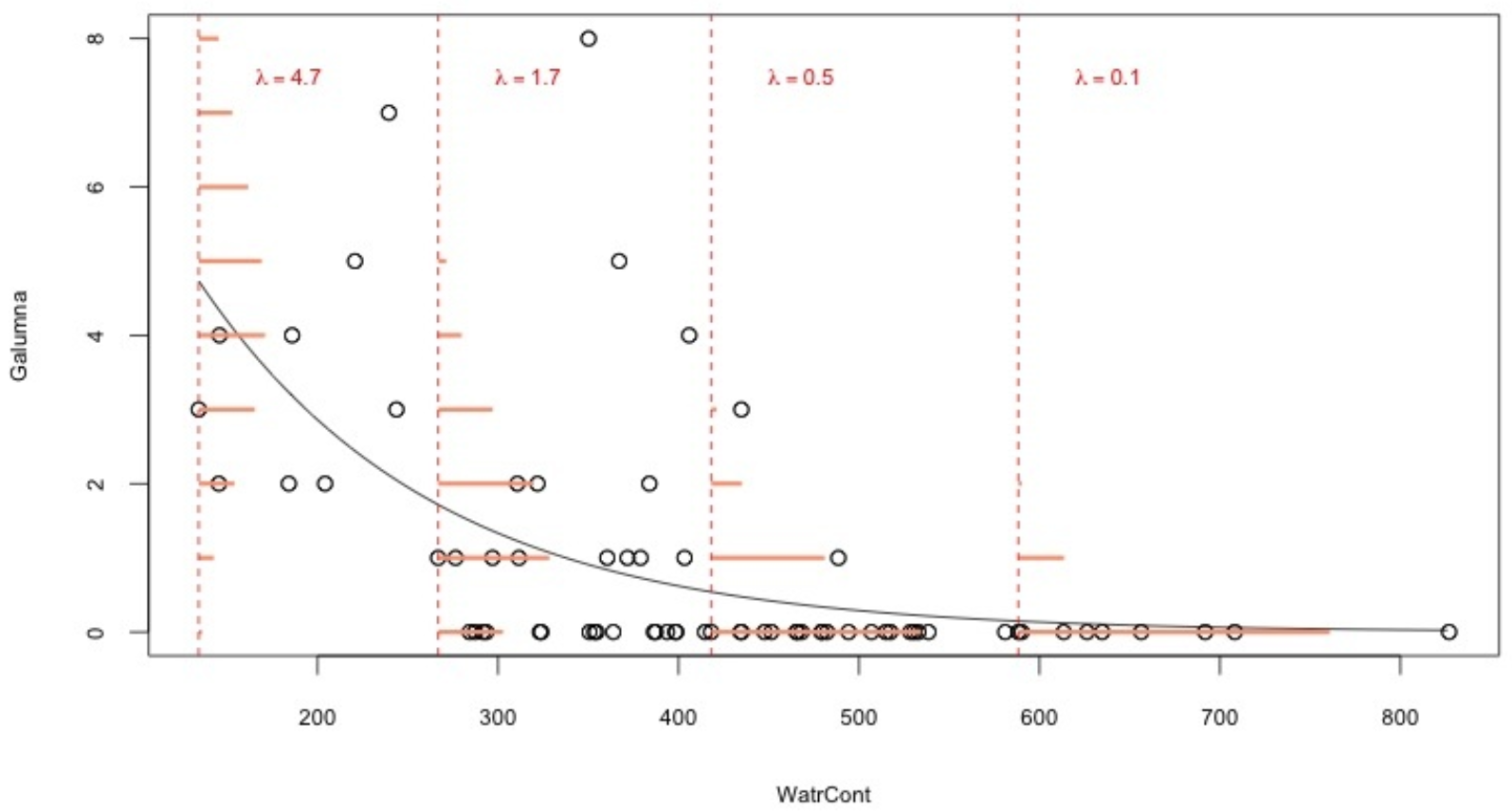

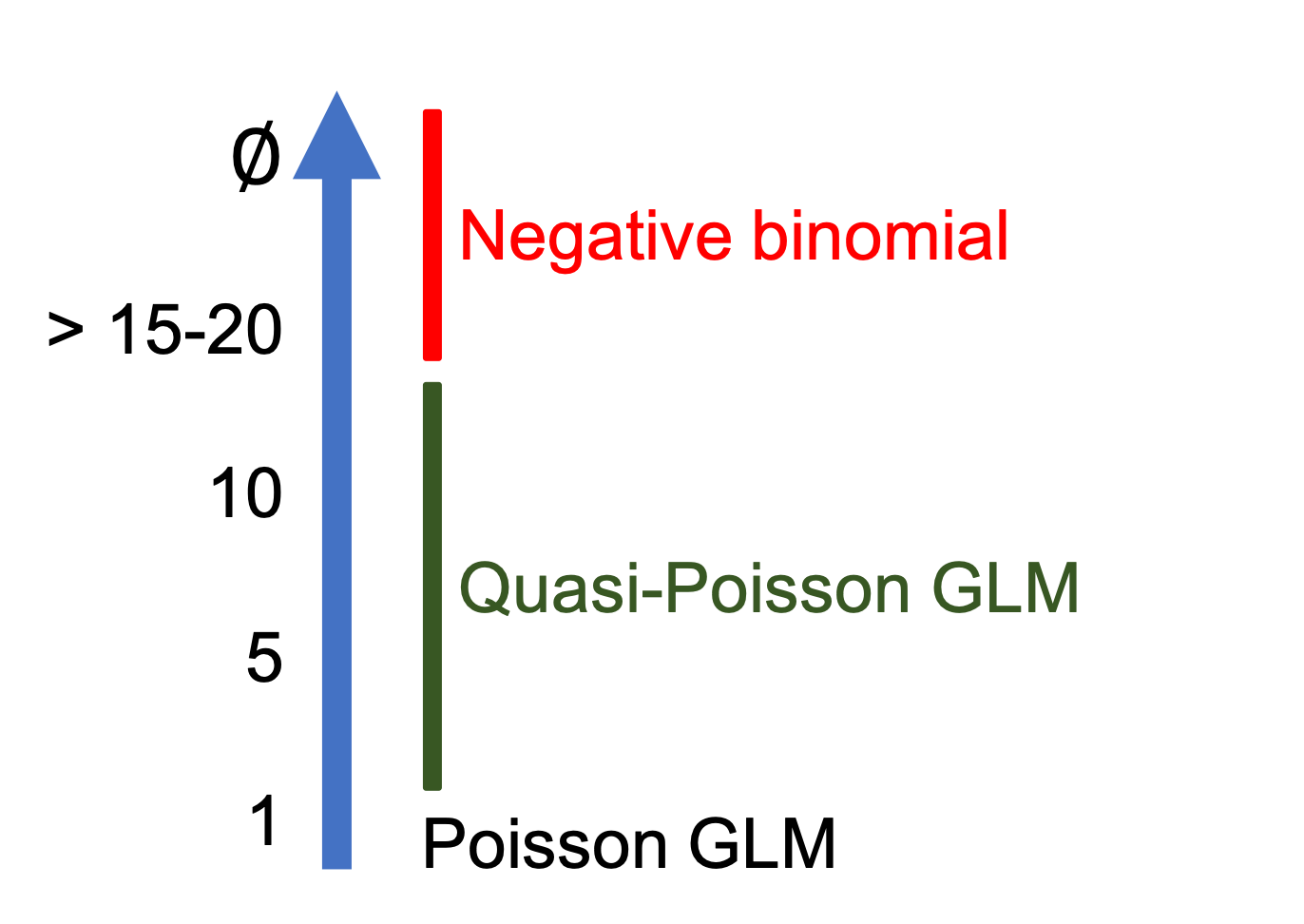

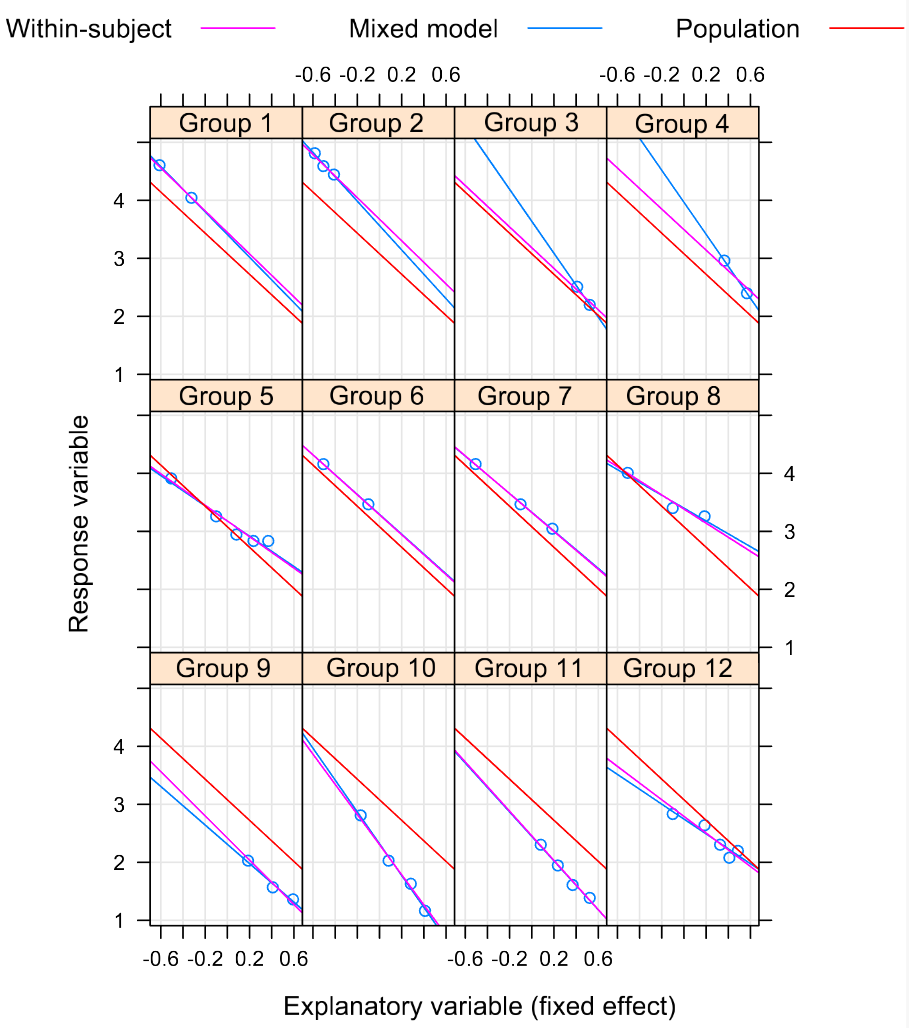

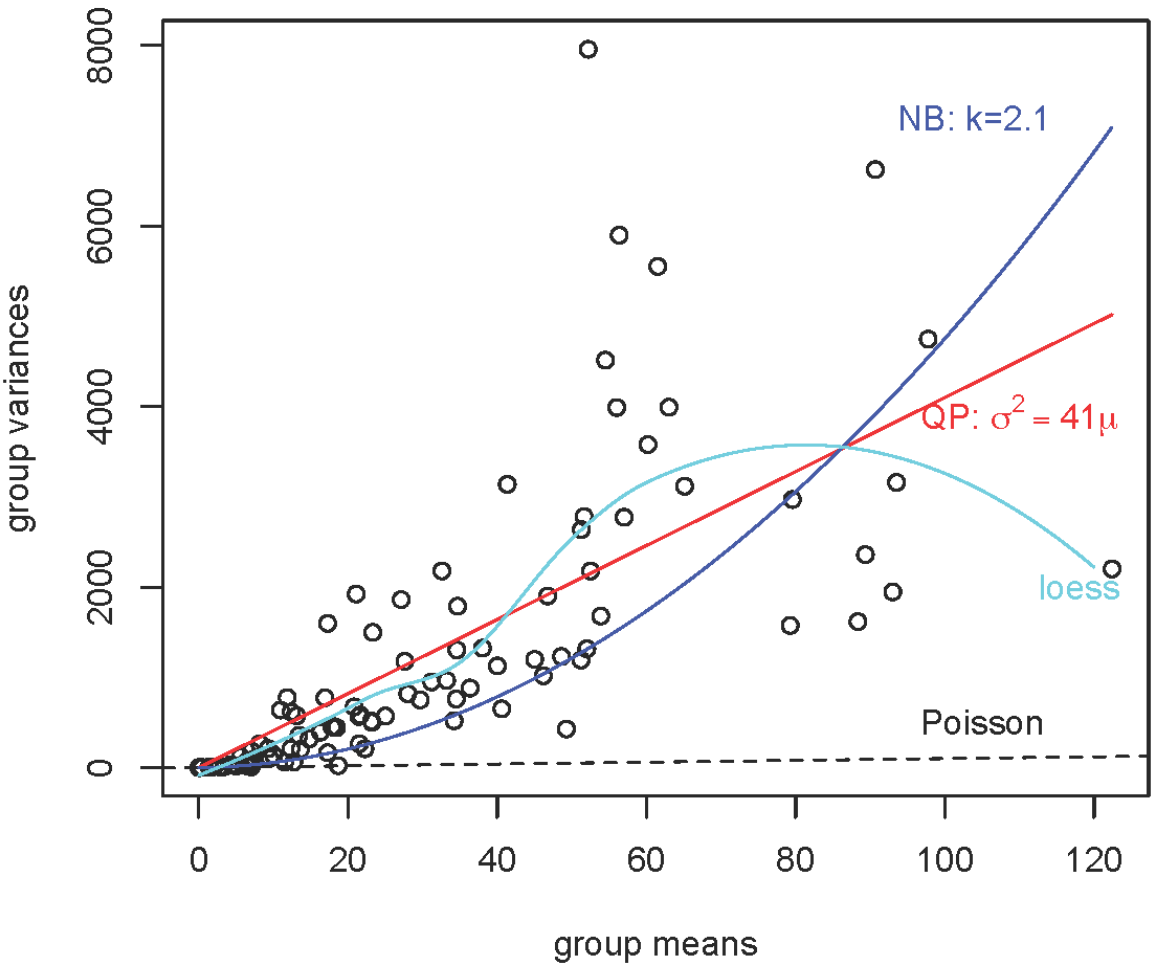

class: center, middle, inverse, title-slide # Workshop 7: Generalized linear (mixed) models ## QCBS R Workshop Series ### Québec Centre for Biodiversity Science --- ## Outline 1. Why be normal? .small[(*Your data is ok; it's the model that's wrong*)] 2. GLM with binary data 3. GLM with count data 4. GLMMs --- class: inverse, center, middle # Why be normal? ## Your data is ok; ## it's the model that's wrong --- ## Limitations of linear (mixed) models Load dataset and fit a linear model (`lm()`): ```r # make sure you're in the right working directory mites <- read.csv('mites.csv') head(mites) str(mites) ``` The dataset that you just loaded is a subset of the 'Oribatid mite dataset' .small[ > 70 moss and mite samples > 5 environmental measurements and abundance of the mite *Galumna sp.* ] **Goal**: Model the abundance (`abund`), occurrence (`pa`), and proportion (`prop`) of Galumna as a function of the 5 environmental variables. --- ## Exploring relationships Can we see any relationship(s) between Galumna and the 5 environmental variables? --- ## Exploring relationships .small[Can we see any relationship(s) between Galumna and the 5 environmental variables?] .pull-left2[ ```r plot(mites) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-4-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right2[ <br><br><br><br><br> `Galumna` vs `WatrCont`?! ] --- ## Exploring relationships A negative relationship between `Galumna` and water `content`? ```r par(mfrow = c(1, 3), cex = 1.4) plot(Galumna ~ WatrCont, data = mites, xlab = 'Water content', ylab='Abundance') boxplot(WatrCont ~ pa, data = mites, xlab='Presence/Absence', ylab = 'Water content') plot(prop ~ WatrCont, data = mites, xlab = 'Water content', ylab='Proportion') ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-5-1.png" width="864" style="display: block; margin: auto;" /> --- ## Testing linearity Fit linear models to test whether `abund`, `pa`, and/or `prop` varies as a function of water content. -- ```r lm.abund <- lm(Galumna ~ WatrCont, data = mites) ## summary(lm.abund) lm.pa <- lm(pa ~ WatrCont, data = mites) ## summary(lm.pa) lm.prop <- lm(prop ~ WatrCont, data = mites) ## summary(lm.prop) ``` -- .pull-left[ ```r summary(lm.abund)$coefficients[, 4] # (Intercept) WatrCont # 3.981563e-08 1.206117e-05 summary(lm.abund)$coefficients[, 4] # (Intercept) WatrCont # 3.981563e-08 1.206117e-05 summary(lm.abund)$coefficients[, 4] # (Intercept) WatrCont # 3.981563e-08 1.206117e-05 ``` ] .pull-right[ Significant relationship in all models! .alert[But...] ] --- ## Testing linearity Significant relationship in all models! .alert[Wait a minute...] .pull-left[ ```r plot(Galumna ~ WatrCont, data = mites) abline(lm.abund) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-8-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ```r par(mfrow = c(2, 2), cex = 1.4) plot(lm.abund) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-9-1.png" width="432" style="display: block; margin: auto;" /> ] --- ## Testing linearity Even worse for other models (Proportion `prop`): .pull-left[ ```r plot(prop ~ WatrCont, data = mites) abline(lm.prop) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-10-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ```r par(mfrow = c(2, 2), cex = 1.4) plot(lm.prop) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-11-1.png" width="432" style="display: block; margin: auto;" /> ] --- ## Testing linearity Even worse for other models (Presence/Absence `pa`): .pull-left[ ```r plot(pa ~ WatrCont, data = mites) abline(lm.pa) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-12-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ```r par(mfrow = c(2, 2), cex = 1.4) plot(lm.pa) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-13-1.png" width="432" style="display: block; margin: auto;" /> ] --- ## Model assumptions Common in Ecology that assumptions of homogeneity of variance and normality are not met. - Main reason why we need GLMs! .comment[Let's revisit the assumptions of lm...] --- ## Model assumptions Equation of lm: `\(y = \beta_0 + \beta_1x_i + \varepsilon\)` where: `\(y_i\)` = predicted value of response variable `\(\beta_0\)` = intercept `\(\beta_1\)` = slope `\(x_i\)` = explanatory variable `\(\varepsilon_i\)` = model residuals drawn from a normal distribution with a varying mean but a constant variance** .comment[.alert[**Key point!] Residuals (the distance between each observation and the regression line) can be predicted by drawing random values from a normal distribution.] --- ## Normally distributed residuals Recall: Normal distributions have two parameters, `\(\mu\)` (mean) and `\(\sigma\)` (variance): <br> .pull-left[ Varing `\(\mu\)`, `\(\sigma = 5\)` <img src="workshop07-en_files/figure-html/unnamed-chunk-14-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ `\(\mu = 25\)`, varing `\(\sigma\)` <img src="workshop07-en_files/figure-html/unnamed-chunk-15-1.png" width="432" style="display: block; margin: auto;" /> ] --- ## Normally distributed residuals Another way to write the lm equation is: `\(y_i \sim N(\mu = \beta_0 + \beta_1 X_i, \sigma^2)\)` <br> Which literally means that `\(y_i\)` is drawn from a normal distribution with parameters `\(\mu\)` (which depends on `\(x_i\)`) and `\(\sigma\)` (which has the same value for all `\(Y\)`s) <br> .comment[Lets predict Galumna abund as a function of water content using the `lm` we fitted earlier...] --- ## Model prediction We need regression coefficients ( `\(\beta\)`) and `\(\sigma\)`: ```r coef(lm.abund) # (Intercept) WatrCont # 3.439348672 -0.006044788 summary(lm.abund)$sigma # [1] 1.513531 ``` What are the parameters of the normal distribution used to model `\(y\)` when water content = 300? `\(y_i \sim N(\mu = \beta_0 + \beta_1 X_i, \sigma^2)\)` -- `\(\mu = 3.44 + (-0.006 x 300) = 1.63\)` `\(\sigma = 1.51\)` --- ## Model prediction - At `\(x = 300\)`, residuals should follow a normal distribution with `\(\mu = 1.63\)` and `\(\sigma^2 = 1.51\)`. - At `\(x = 400\)`, we get `\(\mu = 1.02\)` and `\(\sigma^2 = 1.51\)`, etc. <br> Graphically, this is our model: -- .pull-left[ .center[  ]] -- .pull-right[ **Problems**: - `\(\sigma^2\)` is not homogeneous, yet `lm()` forces a constant `\(\sigma^2\)` - Predicted values should be integers ] --- ## Biological data & distributions Statisticians have described a multitude of distributions that correspond to different types of data A distribution provides the probability of observing each possible outcome of an experiment or survey (e.g. `\(abund = 8\)` Galumna) Distributions can be **discrete** (only includes integers or **continuous** (includes fractions) All distributions have **parameters** that dictate the shape of the distribution (e.g. `\(\mu\)` and `\(\sigma^2\)` for the normal) --- ## Biological data & distributions Galumna abund follows a discrete distribution (can only take integer values). A useful distribution to model abundance data is the “Poisson” distribution: - a discrete distribution with a single parameter, `\(\lambda\)` (lambda), which defines both the mean and the variance of the distribution: <img src="workshop07-en_files/figure-html/unnamed-chunk-17-1.png" width="1080" style="display: block; margin: auto;" /> --- ## Biological data & distributions Galumna seems to follow a Poisson distribution with a low value of `\(\lambda\)`: .pull-left[ ```r hist(mites$Galumna) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-18-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ```r mean(mites$Galumna) # [1] 0.9571429 ``` ] --- ## Biological data & distributions Presence-absence takes yet another form: - only `0`s and `1`s - Poisson distribution would not be appropriate to model this variable ```r hist(mites$pa) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-20-1.png" width="432" style="display: block; margin: auto;" /> --- ## Biological data & distributions **“Bernoulli” distribution**: - Only two possible outcomes in its range: success (`1`) or failure (`0`) - One parameter, `\(p\)`, the probability of success <br> <img src="workshop07-en_files/figure-html/unnamed-chunk-21-1.png" width="864" style="display: block; margin: auto;" /> We can use the Bernouilli distribution to calculate the probability Galumna present (`1`) vs. absent (`0`) --- ## Biological data & distributions **Binomial distribution**: When there are multiple trials (each with a success/failure), the Bernoulli distribution expands into the binomial - Additional parameter, n, for number of trials - Predicts the probability of observing a given proportion of successes, p, out of a known total number of trials, `\(n\)` <img src="workshop07-en_files/figure-html/unnamed-chunk-22-1.png" width="1080" style="display: block; margin: auto;" /> --- ## Biological data & distributions **Binomial distribution**: used to model data where the number of successes are integers and where the number of trials, n, is known. **Main difference with Poisson distribution**: the binomial has an upper limit to its range, corresponding to `n`. Consequently, it is right-skewed at low p values but left-skewed at high `p` values <img src="workshop07-en_files/figure-html/unnamed-chunk-23-1.png" width="720" style="display: block; margin: auto;" /> --- ## Biological data & distributions Getting back to our problem... can switch the distribution of error terms (εi) from normal to Poisson: `$$y_i \sim Poisson(\lambda = \beta_0 + \beta_1 x_i)$$` Problems solved! 1. `\(\lambda\)` varies with `\(x\)` (water content) which means residual variance will also vary with `\(x\)`, which means that we just relaxed the homogeneity of variance assumption! 2. Predicted values will now be integers instead of fractions 3. The model will never predict negative values (Poisson is strictly positive) --- ## Biological data & distributions This is **almost** a Poisson GLM, which looks like this: .center[] Probabilities (in orange) are now integers, and both the variance and the mean of the distribution decline as `\(\lambda\)` decreases with increasing water content. --- class: inverse, center, middle # GLM with binary data --- ## Binary variables Common response variable in ecological datasets is the binary variable: we observe a phenomenon X or its “absence” - Presence/Absence of a species - Presence/Absence of a disease - Success/Failure to observe behaviour - Survival/Death of organisms Wish to determine if `\(P/A \sim Environment\)` .comment[*] .comment[Called a logistic regression or logit model] --- ## Binary variables In `R`, binary variables are coded with `1` and `0`: <br> .pull-left[ .right[ ``` # Site Presence # 1 A 1 # 2 B 0 # 3 C 1 # 4 D 1 # 5 E 0 # 6 F 1 ``` ]] .pull-right[ <br> 1 = Presence <br> 0 = Absence ] --- ## Binary variables Clearly not normally distributed! <br> <img src="workshop07-en_files/figure-html/unnamed-chunk-26-1.png" width="504" style="display: block; margin: auto;" /> --- ## Binary variables Expected values can be out of the `[0,1]` range with `lm()`: <br> <img src="workshop07-en_files/figure-html/unnamed-chunk-27-1.png" width="540" style="display: block; margin: auto;" /> --- ## Probability distribution The Bernoulli distribution is well suited for binary response variables <br> .pull-left[.right[ `\(E(Y) = p\)` <br> `\(Var(Y) = p \times (1 - p)\)` ]] .pull-right[  **Mean of distribution** .small[Probability `\(p\)` of observing an outcome]  **Variance of distribution** .small[Variance decreases as `\(p\)` is close to `0` or `1`] ] --- ## Logistic regression The `glm()` function! <br> `logit.reg <- glm(formula, data, family)` <br> To move away from traditional linear models, need to specify two things (`family`): 1. probability distribution **AND** 2. a link function --- ## The Link Function For a simple linear model of a normally distributed continuous response variable, the equation for the expected values is: <br> `$$\mu = x\beta$$` where - `\(\mu\)` is the expected value of the response variable - `\(x\)` is the model matrix (*i.e.* your data) - `\(\beta\)` is the vector of estimated parameters (*i.e.* the intercept & slope) <br> `\(x\beta\)` is called the **linear predictor** --- ## The Link Function `\(\mu = x\beta\)` is only true for normally distributed data If this is not the case, must use a transformation on the expected values `\(\mu\)` `$$g(\mu) = x\beta$$` where `\(g(\mu)\)` is the link function <br> This allows us to relax the normality assumption --- ## The Link Function For binary data, the link function is called the **logit**: <br> `$$g(\mu) = log\frac{\mu}{1-\mu}$$` `\(\mu =\)` expected values (probability that `\(Y = 1\)`) <br> - Get the odds ( `\(\frac{\mu}{1-\mu}\)`) - log-transform them --- ## The Link Function `$$g(\mu) = log\frac{\mu}{1-\mu}$$` - Get the odds ( `\(\frac{\mu}{1-\mu}\)`) - log-transform them <br> The odds puts our expected values on a `0` to `+Inf` scale The log transformation puts our expected values on a `-Inf` to `+Inf`  The expected values can now be **linearly** related to the linear predictor --- ## Exercise 1 Build a logistic regression model using the mites data ```r #setwd('...') mites <- read.csv("mites.csv", header = TRUE) str(mites) ``` ``` # 'data.frame': 70 obs. of 9 variables: # $ Galumna : int 8 3 1 1 2 1 1 1 2 5 ... # $ pa : int 1 1 1 1 1 1 1 1 1 1 ... # $ totalabund: int 140 268 186 286 199 209 162 126 123 166 ... # $ prop : num 0.05714 0.01119 0.00538 0.0035 0.01005 ... # $ SubsDens : num 39.2 55 46.1 48.2 23.6 ... # $ WatrCont : num 350 435 372 360 204 ... # $ Substrate : Factor w/ 7 levels "Barepeat","Interface",..: 4 3 2 4 4 4 4 2 3 4 ... # $ Shrub : Factor w/ 3 levels "Few","Many","None": 1 1 1 1 1 1 1 2 2 2 ... # $ Topo : Factor w/ 2 levels "Blanket","Hummock": 2 2 2 2 2 2 2 1 1 2 ... ``` --- ## Exercise 1 Build a model of the presence of Galumna sp. as a function of water content and topography ```r logit.reg <- glm(pa ~ WatrCont + Topo, data=mites, family = binomial(link = "logit")) ``` ```r summary(logit.reg) ``` --- ## Exercise 1 .small[ ```r summary(logit.reg) # # Call: # glm(formula = pa ~ WatrCont + Topo, family = binomial(link = "logit"), # data = mites) # # Deviance Residuals: # Min 1Q Median 3Q Max # -2.0387 -0.5589 -0.1594 0.4112 2.0252 # # Coefficients: # Estimate Std. Error z value Pr(>|z|) # (Intercept) 4.464402 1.670622 2.672 0.007533 ** # WatrCont -0.015813 0.004535 -3.487 0.000489 *** # TopoHummock 2.090757 0.735348 2.843 0.004466 ** # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 # # (Dispersion parameter for binomial family taken to be 1) # # Null deviance: 91.246 on 69 degrees of freedom # Residual deviance: 48.762 on 67 degrees of freedom # AIC: 54.762 # # Number of Fisher Scoring iterations: 6 ``` ] --- ## Challenge 1 ![:cube]() Using the 'bacteria' dataset, model the presence of *H. influenzae* as a function of treatment and week of test. Start with a full model and reduce it to the most parsimonious model. ```r #install.packages("MASS") library(MASS) data(bacteria) str(bacteria) # 'data.frame': 220 obs. of 6 variables: # $ y : Factor w/ 2 levels "n","y": 2 2 2 2 2 2 1 2 2 2 ... # $ ap : Factor w/ 2 levels "a","p": 2 2 2 2 1 1 1 1 1 1 ... # $ hilo: Factor w/ 2 levels "hi","lo": 1 1 1 1 1 1 1 1 2 2 ... # $ week: int 0 2 4 11 0 2 6 11 0 2 ... # $ ID : Factor w/ 50 levels "X01","X02","X03",..: 1 1 1 1 2 2 2 2 3 3 ... # $ trt : Factor w/ 3 levels "placebo","drug",..: 1 1 1 1 3 3 3 3 2 2 ... ``` --- ## Solution ![:cube]() ```r model.bact1 <- glm(y ~ trt * week, data = bacteria, family = binomial) ``` ```r model.bact2 <- glm(y ~ trt + week, data = bacteria, family = binomial) ``` ```r model.bact3 <- glm(y ~ week, data = bacteria, family = binomial) ``` ```r anova(model.bact1, model.bact2, model.bact3, test = "LRT") # Analysis of Deviance Table # # Model 1: y ~ trt * week # Model 2: y ~ trt + week # Model 3: y ~ week # Resid. Df Resid. Dev Df Deviance Pr(>Chi) # 1 214 203.12 # 2 216 203.81 -2 -0.6854 0.70984 # 3 218 210.91 -2 -7.1026 0.02869 * # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` --- ## Interpreting the output Let's go back to the summary of our `logit.reg` model to see the coefficients: ```r summary(logit.reg)$coefficients # Estimate Std. Error z value Pr(>|z|) # (Intercept) 4.46440199 1.670622482 2.672299 0.0075333598 # WatrCont -0.01581255 0.004535069 -3.486728 0.0004889684 # TopoHummock 2.09075654 0.735348234 2.843220 0.0044660283 ``` The output indicates that both water content and topography are significant .comment[But how do we interpret the slope coefficients?] --- ## Interpreting the output Remember we used a logit transformation on the expected values! To properly interpret the regression parameters, we have to use a 'reverse' function: <br> The natural exponential function to obtain the odds: `\(e^x\)` The inverse logit function to obtain the probabilities: `$$logit^{-1} = \frac{1}{1 + \frac{1}{e^x}}$$` --- ## Interpreting the output On the odds scale for water content: ```r exp(logit.reg$coefficient[2]) # WatrCont # 0.9843118 ``` On the probability scale for water content: ```r 1 / (1 + 1/exp(logit.reg$coefficient[2])) # WatrCont # 0.4960469 ``` --- ## Predictive Power and goodness of fit Get the pseudo-R², the analogue of the `\(R^2\)` for models fitted by maximum likelihood: `$$\text{pseudo-R}^2 = \frac{\text{null deviance - residual deviance}}{\text{null deviance}}$$` <br> `\(\text{pseudo-R}^2 = \text{variance explained by the model}\)` --- ## Predictive Power and goodness of fit Comparing deviance of your model (residual deviance) to the deviance of a null model (null deviance) The **null model** is a model without explanatory variables ```R null.model <- glm(Response.variable ~ 1, family = binomial) ``` --- ## Predictive Power and goodness of fit In R, we can extract the residual and null deviances directly from the glm object: ```r objects(logit.reg) # [1] "aic" "boundary" "call" # [4] "coefficients" "contrasts" "control" # [7] "converged" "data" "deviance" # [10] "df.null" "df.residual" "effects" # [13] "family" "fitted.values" "formula" # [16] "iter" "linear.predictors" "method" # [19] "model" "null.deviance" "offset" # [22] "prior.weights" "qr" "R" # [25] "rank" "residuals" "terms" # [28] "weights" "xlevels" "y" ``` ```r pseudoR2 <- (logit.reg$null.deviance - logit.reg$deviance) / logit.reg$null.deviance pseudoR2 # [1] 0.4655937 ``` .comment[Hence, the model explains 46.6% of the variability in the data] --- ## Predictive Power and goodness of fit New statistic - **coefficient of discrimination (D)** evaluates the predictive power of logistic regression - Measure of how well logistic regression classifies an outcome as a success or a failure To assess goodness of fit, diagnostic plots are not useful, instead must use **Hosmer-Lemeshow test**: - Compare observed and expected number of outcomes - Similar to a Chi square test --- ## Exercise 2 In R these tests are available in the `binomTools` package Compute the coefficient of discrimination D ```r fit <- binomTools::Rsq(object = logit.reg) fit # # R-square measures and the coefficient of discrimination, 'D': # # R2mod R2res R2cor D # 0.5205221 0.5024101 0.5025676 0.5114661 # # Number of binomial observations: 70 # Number of binary observation: 70 # Average group size: 1 ``` --- #### Exercise 2: Perform a Hosmer-Lemeshow test .comment[*] .small[ ```r binomTools::HLtest(binomTools::Rsq(model.bact2)) # # Hosmer and Lemeshow's goodness-of-fit test for logistic regression models # with method = deciles # Observed counts: # 1 2 3 4 5 6 7 8 9 10 # 8 10 7 4 4 2 1 4 1 2 # 16 13 18 16 22 26 14 14 19 19 # # Expected counts: # 1 2 3 4 5 6 7 8 9 10 # 9.7 6.8 5.8 4.4 5 4.1 2 2 1.8 1.5 # 14.3 16.2 19.2 15.6 21 23.9 13 16 18.2 19.5 # # Pearson residuals: # 1 2 3 4 5 6 7 8 9 10 # -0.5 1.2 0.5 -0.2 -0.4 -1.0 -0.7 1.4 -0.6 0.4 # 0.4 -0.8 -0.3 0.1 0.2 0.4 0.3 -0.5 0.2 -0.1 # # Chi-square statistic: 7.812347 with 8 df # P-value: 0.4520122 # # Warning: Test may not be appropriate due to low expected frequencies ``` ] .comment[A non significant value indicates an adequate fit!] --- ## Challenge 1 ![:cube]() 1. Using the model created with bacteria dataset, assess goodness of fit and predictive power. 2. Think how predictive power could be improved for this model. --- ## Solution ![:cube]() 1: ```r null.d <- model.bact2$null.deviance resid.d <- model.bact2$deviance bact.pseudoR2 <- (null.d -resid.d) / null.d HLtest(Rsq(model.bact2) ``` 2: Adding informative explanatory variables could increase the explanatory power of the model --- ## Proportion data and GLM Sometimes, proportion data is more similar to logistic regression than you think... If we measure the number of occurrences and we know the total sample size, it is not count data! Suppose we measure disease prevalence in ten deer populations on 10 deer individuals per population: .pull-left[ `$$\frac{x\,\, \text{infected deer}}{10\,\,\text{deer}}$$` ] .pull-right[  always bound between `0` and `1`! ] --- ## Exercise 3 In R, we have to specify the number of times something happened and the number of times something did not happen: ```r prop.reg <- glm(cbind(Galumna, totalabund - Galumna) ~ Topo + WatrCont, data = mites, family = binomial) ``` ```r summary(prop.reg) ``` --- ## Exercise 3 .small[ ```r summary(prop.reg) # # Call: # glm(formula = cbind(Galumna, totalabund - Galumna) ~ Topo + WatrCont, # family = binomial, data = mites) # # Deviance Residuals: # Min 1Q Median 3Q Max # -1.4808 -0.9699 -0.6327 -0.1798 4.1688 # # Coefficients: # Estimate Std. Error z value Pr(>|z|) # (Intercept) -3.288925 0.422109 -7.792 6.61e-15 *** # TopoHummock 0.578332 0.274928 2.104 0.0354 * # WatrCont -0.005886 0.001086 -5.420 5.97e-08 *** # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 # # (Dispersion parameter for binomial family taken to be 1) # # Null deviance: 140.702 on 69 degrees of freedom # Residual deviance: 85.905 on 67 degrees of freedom # AIC: 158.66 # # Number of Fisher Scoring iterations: 5 ``` ] --- ## Exercise 3 We can also code the model directly with proportions : ```r prop.reg2 <- glm(prop ~ Topo + WatrCont, data = mites, family = binomial, weights = totalabund) ``` --- class: inverse, center, middle # GLM with count data --- ## Modeling count data .large[What is count data?] Import the `faramea.csv` into R ```r faramea <- read.csv('faramea.csv', header = TRUE) ``` The number of trees of the species *Faramea occidentalis* was assessed in 43 quadrats in Barro Colorado Island (Panama). For each quadrat, environmental characteristics were also recorded such as elevation or precipitation. Let's investigate the histogram of the number of *Faramea occidentalis* --- ## Modeling count data .large[What is count data?] <img src="workshop07-en_files/figure-html/unnamed-chunk-51-1.png" width="432" style="display: block; margin: auto;" /> Count data are characterized by: - positive values: you do not count -7 individuals - integer values: you do not count 7.56 individuals - exhibits larger variance for large values --- ## Modeling count data .large[How to model count data?] Does elevation influence the abundance of *F. occidentalis*? <img src="workshop07-en_files/figure-html/unnamed-chunk-52-1.png" width="432" style="display: block; margin: auto;" /> the **Poisson distribution** seems to be the perfect choice to model this data, hence **Poisson GLMs** are usually the good way to start modeling count data. --- ## The Poisson distribution The Poisson distribution specifies the probability of a discrete random variable Y and is given by: `$$f(y, \,\mu)\, =\, Pr(Y = y)\, =\, \frac{\mu^y \times e^{-\mu}}{y!}$$` `$$E(Y)\, =\, Var(Y)\, =\, \mu$$` **Properties**: - `\(\mu\)` is the parameter of the Poisson distribution - specifies the probably only for integer values - probability for negative values is null ( `\(P(Y<0) = 0\)`) - mean = variance (allows for heterogeneity) --- ## Poisson GLM behind the scenes A Poisson GLM will model the value of µ as a function of different explanatory variables <br> .center[**Three steps**] **Step 1.** We assume Yi follows a Poisson distribution with mean and variance `\(\mu_i\)` `$$Y_i = Poisson(\mu_i)$$` `$$E(Y_i) = Var(Y_i) = \mu_i$$` `$$f(y_i, \, \mu_i) = \frac{\mu^{y_i}_i \times e^{-\mu_i}}{y!}$$` `\(\mu_i\)` corresponds to the expected number of individuals --- ## Poisson GLM behind the scenes **Step 2.** We specify the systematic part of the model just as in a linear model Interpreting the output `$$\underbrace{\alpha}_\text{One intercept} + \underbrace{\beta}_\text{slope of 'Elevation'} \times \text{Elevation}_i$$` **Step 3.** The link between the mean of `\(Y_i\)` and the systematic part is a logarithmic `$$log(\mu_i) = \alpha + \beta \times \text{Elevation}_i$$` .center[or] `$$\mu_i = e^{ \alpha + \beta \times \text{Elevation}_i}$$` --- ## Fitting a Poisson GLM in R The function `glm()` allows you to specify a Poisson GLM ```r glm.poisson = glm(Faramea.occidentalis~Elevation, data=faramea, family=poisson) ``` `family` argument specify the distribution and link function <br> As with `lm()` you can access the outputs of the model using the function `summary()` ```r summary(glm.poisson) ``` --- ## Model summary .pull-left2[ .small[ ```r summary(glm.poisson) # # Call: # glm(formula = Faramea.occidentalis ~ Elevation, family = poisson, # data = faramea) # # Deviance Residuals: # Min 1Q Median 3Q Max # -3.3319 -2.7509 -1.5451 0.1139 11.3995 # # Coefficients: # Estimate Std. Error z value Pr(>|z|) # (Intercept) 1.7687001 0.1099136 16.092 < 2e-16 *** # Elevation -0.0027375 0.0006436 -4.253 2.11e-05 *** # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 # # (Dispersion parameter for poisson family taken to be 1) # # Null deviance: 414.81 on 42 degrees of freedom # Residual deviance: 388.12 on 41 degrees of freedom # AIC: 462.01 # # Number of Fisher Scoring iterations: 10 ``` ]] .pull-right2[ Estimates: Intercept = `\(\alpha\)` Elevation = `\(\beta\)` ] -- .pull-right2[ <br> What about `Null deviance` and `Residual deviance`?! ] --- ## Parameter estimates In our model the unknown parameters are the intercept ( `\(\alpha\)`) and the slope of elevation ( `\(\beta\)`) `$$log(\mu_i) = 1.769 - 0.0027 \times \text{Elevation}_i$$` .center[or] `$$\mu_i = e^{1.769 - 0.0027 \times \text{Elevation}_i}$$` --- ## The deviance Remember that to estimate the unknown parameters maximum likelihood estimation is used The residual deviance is defined as twice the difference between the log likelihood of a model that provides a perfect fit and the log likelihood of our model `$$\text{Res dev} = 2 \, log(L(y;\,y)) - 2 \, log(L(y;\, \mu))$$` In a Poisson GLM, the residual deviance should equal the residual degrees of freedoms .center[.alert[388.12 >> 41]] --- ## Overdispersion When the residual deviance is higher than the residual degrees of freedom we say that the model is **overdispersed** `$$\phi \, = \, \frac{\text{Residual deviance}}{\text{Residual degrees of freedom}}$$` Occurs when the variance in the data is even higher than the mean, hence the Poisson distribution is not the best choice (many zeros, many very high values, missing covariates, etc) .center[.large[**Solutions**]] .pull-left[ 1: Correct for overdispersion using **quasi-Poisson GLM** ] .pull-right[ 2: Choose another distribution: **the negative binomial** ] --- ## Quasi-Poisson GLM The variance of the distribution will account for **overdispersion**: `$$E(Y_i) = \mu_i$$` `$$Var(Y_I) = \phi \times \mu_i$$` the **systematic part** and the **link function** remain the same `\(phi\)` is the dispersion parameter. It will be estimated prior to estimate parameters. Correcting for overdispersion will not affect parameter estimates but will affect their **significance**. Indeed, the standard errors of the parameters are multiplied by `\(\sqrt{\phi}\)` .alert[Some marginally significant p-values may no longer hold!] --- ## Fitting a quasi-Poisson GLM in R Create a new GLM using the 'quasipoisson' family or update the previous one ```r glm.quasipoisson = glm(Faramea.occidentalis ~ Elevation, data = faramea, family=quasipoisson) glm.quasipoisson = update(glm.poisson, family = quasipoisson) ``` --- ## Fitting a quasi-Poisson GLM in R .pull-left2[ .small[ ```r summary(glm.quasipoisson) # # Call: # glm(formula = Faramea.occidentalis ~ Elevation, family = quasipoisson, # data = faramea) # # Deviance Residuals: # Min 1Q Median 3Q Max # -3.3319 -2.7509 -1.5451 0.1139 11.3995 # # Coefficients: # Estimate Std. Error t value Pr(>|t|) # (Intercept) 1.768700 0.439233 4.027 0.000238 *** # Elevation -0.002738 0.002572 -1.064 0.293391 # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 # # (Dispersion parameter for quasipoisson family taken to be 15.96936) # # Null deviance: 414.81 on 42 degrees of freedom # Residual deviance: 388.12 on 41 degrees of freedom # AIC: NA # # Number of Fisher Scoring iterations: 10 ``` ]] .pull-right2[ **Same estimates but** .small[The standard errors of the parameters are multiplied by] `$$\sqrt{\phi} = 4$$` `0.0006436 * 4 = 0.00257` <- `\(\phi\)` <br> <- .small[AIC is not defined] ] --- ## Fitting a quasi-Poisson GLM in R Try also deviance analysis to test for the effect of Elevation ```r null.model <- glm(Faramea.occidentalis ~ 1, data = faramea, family = quasipoisson) anova(null.model, glm.quasipoisson, test = "Chisq") # Analysis of Deviance Table # # Model 1: Faramea.occidentalis ~ 1 # Model 2: Faramea.occidentalis ~ Elevation # Resid. Df Resid. Dev Df Deviance Pr(>Chi) # 1 42 414.81 # 2 41 388.12 1 26.686 0.1961 ``` --- ## Dispersion parameter .center[] --- ## Negative binomial GLM Negative binomial GLMs are favor when overdispersion is high - It has **two parameters** `\(\mu\)` and `\(k\)`. `\(k\)` controls for the dispersion parameter (smaller `\(k\)` indicates higher dispersion) - It corresponds to a combination of two distributions (**Poisson** and **gamma**) - It assumes that the `\(Y_i\)` are Poisson distributed with the mean `\(\mu\)` assumed to follow a gamma distribution! `$$E(Y_i) = \mu_i$$` `$$Var(Y_i) = \mu_i + \frac{\mu^2_i}{k}$$` --- ## Fitting a negative binomial in R NB is not in the `glm()` function so you need to install and charge the `MASS` package ```r install.packages('MASS') ``` ```r glm.negbin = glm.nb(Faramea.occidentalis ~ Elevation, data = faramea) ``` ```r summary(glm.negbin) ``` --- .pull-left2[ .small[ ``` # # Call: # glm.nb(formula = Faramea.occidentalis ~ Elevation, data = faramea, # init.theta = 0.2593107955, link = log) # # Deviance Residuals: # Min 1Q Median 3Q Max # -1.36748 -1.17564 -0.51338 -0.05226 2.25716 # # Coefficients: # Estimate Std. Error z value Pr(>|z|) # (Intercept) 2.369226 0.473841 5.00 5.73e-07 *** # Elevation -0.007038 0.002496 -2.82 0.00481 ** # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 # # (Dispersion parameter for Negative Binomial(0.2593) family taken to be 1) # # Null deviance: 41.974 on 42 degrees of freedom # Residual deviance: 36.343 on 41 degrees of freedom # AIC: 182.51 # # Number of Fisher Scoring iterations: 1 # # # Theta: 0.2593 # Std. Err.: 0.0755 # # 2 x log-likelihood: -176.5090 ``` ]] .pull-right2[ <br><br><br><br><br><br><br><br><br><br><br><br><br> `theta` `\(= k\)` ] --- ## Plotting the final GLM model **Step 1** Plot the data and the use estimates of the parameters to draw model line `$$\mu_i = e^{2.369 - 0.007 \times Elevation_i}$$` Use `summary()` to get the parameters ```r summary(glm.negbin)$coefficients[1, 1] summary(glm.negbin)$coefficients[2, 1] ``` **Step 2** Use the standard errors to build the confidence envelope ```r summary(glm.negbin)$coefficients[1, 2] summary(glm.negbin)$coefficients[2, 2] ``` `$$\text{Upper limit} = e^{[\alpha - 1.96 \times SE_{\alpha}] + [\beta - 1.96 \times SE_{\beta}] \times \text{Elevation}_i}$$` `$$\text{Upper limit} = e^{[\alpha + 1.96 \times SE_{\alpha}] + [\beta + 1.96 \times SE_{\beta}] \times \text{Elevation}_i}$$` --- .small[ ```r pp <- predict(glm.negbin, newdata = data.frame(Elevation = 1:800), se.fit = TRUE) linkinv <- family(glm.negbin)$linkinv ## inverse-link function pframe$pred0 <- pp$fit pframe$pred <- linkinv(pp$fit) sc <- abs(qnorm((1-0.95)/2)) ## Normal approx. to likelihood pframe <- transform(pframe, lwr = linkinv(pred0-sc*pp$se.fit), upr = linkinv(pred0+sc*pp$se.fit)) plot(faramea$Elevation, faramea$Faramea.occidentalis, ylab = 'Number of F. occidentalis', xlab = 'Elevation(m)') lines(pframe$pred, lwd = 2) lines(pframe$upr, col = 2, lty = 3, lwd = 2) lines(pframe$lwr, col = 2, lty = 3, lwd = 2) ``` ] <img src="workshop07-en_files/figure-html/unnamed-chunk-62-1.png" width="432" style="display: block; margin: auto;" /> --- ## Challenge 3 ![:cube]() Use the `mites` dataset! Model the abundance of the species Galumna as a function of the substrate characteristics (water content `WatrCont` and density `SubsDens`) - Do you need to account for overdispersion? - Which covariates have a significant effect? - Select the best model! ```r mites <- read.csv("mites.csv", header = TRUE) ``` --- ## Challenge 3: tips ![:cube]() Drop each term in turn and compare the full model with a nested model using the command: ```r drop1(MyGLM, test = "Chi") ``` Specify manually a nested model, call it for example MyGLM2, and use the command: ```r anova(MyGLM, MyGLM2, test = "Chi") ``` --- ## Challenge 3: solution ![:cube]() .small[ ```r # Poisson GLM glm.p = glm(Galumna~WatrCont+SubsDens, data=mites, family=poisson) # quasi-Poisson GLM glm.qp = update(glm.p,family=quasipoisson) # model selection drop1(glm.qp, test = "Chi") # Single term deletions # # Model: # Galumna ~ WatrCont + SubsDens # Df Deviance scaled dev. Pr(>Chi) # <none> 101.49 # WatrCont 1 168.10 31.711 1.789e-08 *** # SubsDens 1 108.05 3.125 0.07708 . # --- # Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` ```r # or glm.qp2 = glm(Galumna~WatrCont, data=mites, family=quasipoisson) anova(glm.qp2, glm.qp, test="Chisq") ``` ] --- ## Challenge 3: solution ![:cube]() <br> .center[ <img src="workshop07-en_files/figure-html/unnamed-chunk-66-1.png" width="432" style="display: block; margin: auto;" /> ] --- ## Other distributions - **Logit transformation of the data** often used with `lm(m)` for percentages or proportions when the binomial distribution is not appropriate. When not selections from fixed quantities (e.g. percent cover, school grades, etc). - **Log-normal distribution in glm**, avoids having to log-transform the data. - **Gamma distribution**. Similar to log-normal, more versatile. - **Tweedie distribution**. Versatile family of distributions. Useful for data with a mix of zeros and positive values (not necessarily counts). - **Zero-inflated Poisson or zero-inflated negative binomial**. When the data comprise an excess number of zeros, that arise from a different process than the process that generates the counts. --- class: inverse, center, middle # GLMMs --- ## Review: Linear Mixed Models **Review of LMM Workshop**: - Structure in the dataset or correlation among observations can result in **lack of independence among observations** sampled from same sites or time points - Account for this by including r**andom effect terms** **Random effects**: - Parameter is a sample from the population, i.e. the subjects you happen to be working with - Explains the variance of the response variable **Fixed effects**: - Parameter is reproducible, i.e. would be the same across studies - Explain the mean of the response variable --- ## Review: Linear Mixed Models .pull-left[ **Shrinkage estimates** - Random effects are often called **shrinkage estimates** because they represent a weighted average of the data and the overall fit (fixed effect) - The random effect shrinkage toward the overall fit (fixed effect) is more severe if the within-group variability is large compared to the among-group variability ] .pull-right[ <br>  ] --- ## Generalized Linear Mixed Models Extension of GLMs to account for additional structure in dataset Follows similar steps introduced in LMM Workshop 1. LMMs incorporate random effects 2. GLMs handle non-normal data (letting errors take on different distribution families - e.g. Poisson or negative binomial) --- ## How to run a GLMM in R Import the `Arabidopsis` dataset `banta_totalfruits.csv` into R. ```r dat.tf <- read.csv("banta_totalfruits.csv") ``` ```r # popu factor with a level for each population # gen factor with a level for each genotype # nutrient factor with levels for low (value = 1) or high (value = 8) # amd factor with levels for no damage or simulated herbivory # total.fruits integer indicating the number of fruits per plant ``` The effect of nutrient availability and herbivory (**fixed effects**) on the fruit production of the mouse-ear cress (Arabidopsis thaliana) was evaluated by measuring 625 plants across 9 different populations, each comprised of 2 to 3 different genotypes (**random effects**) --- ## Choosing error distribution The response variable is count data which suggests to that a **Poisson distribution** should be used (i.e. the variance is equal to the mean) <img src="workshop07-en_files/figure-html/unnamed-chunk-68-1.png" width="432" style="display: block; margin: auto;" /> However, as we will soon see, the variance increases with the mean much more rapidly than expected under the Poisson distribution --- ## Exploring variance To illustrate heterogeneity in variance we will first create boxplots of the response variable versus different environmental factors Let's create new variables that represents every combination of **nutrient** x **clipping** x **random factor** ```r dat.tf <- within(dat.tf, { # genotype x nutrient x clipping gna <- interaction(gen,nutrient,amd) gna <- reorder(gna, total.fruits, mean) # population x nutrient x clipping pna <- interaction(popu,nutrient,amd) pna <- reorder(pna, total.fruits, mean) }) ``` --- ## Exploring variance .small[ ```r # Boxplot of total fruits vs genotype x nutrient x clipping interaction library(ggplot2) ggplot(data = dat.tf, aes(factor(x = gna),y = log(total.fruits + 1))) + geom_boxplot(colour = "skyblue2", outlier.shape = 21, outlier.colour = "skyblue2") + theme_bw() + theme(axis.text.x=element_blank()) + stat_summary(fun.y=mean, geom="point", colour = "red") ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-70-1.png" width="576" style="display: block; margin: auto;" /> ] .comment[Similarly, the boxplot of total fruits vs population x nutrient x clipping interaction shows a large amount of heterogeneity among populations.] --- ## Choosing error distribution As we just saw, there is a large amount of heterogeneity among group variances even when the response variable is transformed If we plot the **group variances vs group means** (example with genotype x nutrient x clipping grouping shown here), we can appreciate that the Poisson family is the least appropriate distribution (i.e. variances increase much faster than the means) .small[.pull-left[  ] .pull-right[ <font color="blue">NB = negative binomial</font> <br> <font color="red">QP = quasi-Poisson</font> <br> <font color="LightBlue">loess = Locally weighted regression smoothing</font> ]] --- ## Poisson GLMM Given the mean-variance relationship, we will most likely need a model with overdispersion - but let's start with a Poisson model: To run a GLMM in R we simply need to use the `glmer()` function of the lme4 package ```r library(lme4) mp1 <- glmer(total.fruits ~ nutrient*amd + rack + status + (1|popu)+ (1|gen), data = dat.tf, family = "poisson") ``` **Random effects**: `(1|popu)` contains a random intercept shared by measures that have the same value for `popu` --- ## Overdispersion check We can check for overdispersion using the `overdisp_fun()` function (Bolker *et al*. 2011) which divides the Pearson residuals by the residual degrees of freedom and tests whether ratio is greater than unity ```r # Download the glmm_funs.R code from the wiki page and source it to run the function source(file="data/glmm_funs.R") # Overdispersion? overdisp_fun(mp1) # chisq ratio p logp # 15755.86833 25.57771 0.00000 -6578.47027 ``` - Ratio is significantly `\(>>\)` 1 - As expected, we need to try a different distribution where the variance increase more rapidly than the mean --- ## Negative binomial GLMM .small[(Poisson-gamma)] Recall that the negative binomial (or Poisson-gamma) distribution meets the assumption that the **variance is proportional to the square of the mean** ```r mnb1 <- glmer.nb(total.fruits ~ nutrient*amd + rack + status + (1|popu)+ (1|gen), data=dat.tf, control=glmerControl(optimizer="bobyqa")) # Control argument specifies the way we optimize the parameter values ``` .pull-left[ ```r # Overdispersion? overdisp_fun(mnb1) ``` ] .pull-right[ .small[.alert[Ratio is now much closer to 1 although p-value is still less than 0.05]] ] --- ## Poisson-lognor mal GLMM - Another option is the **Poisson-lognormal** distribution. - This can be achieved simply by placing an observation-level random effect in the formula. .small[ ```r mpl1 <- glmer(total.fruits ~ nutrient*amd + rack + status + (1|X) + (1|popu)+ (1|gen), data=dat.tf, family="poisson", control = glmerControl(optimizer = "bobyqa")) ``` `(1|X)` deals with overdisp. by adding **observation-level random effects** ```r overdisp_fun(mpl1) # chisq ratio p logp # 1.775363e+02 2.886768e-01 1.000000e+00 -3.755952e-73 ``` .alert[Ratio now meets our criterion] ] --- .small[ **Visualization the model parameters**: A graphical representation of the model parameters can be obtained using the `coefplot2()` function from the `coefplot2` package: .pull-left[ ```r library(coefplot2) # Variance terms coefplot2(mpl1, ptype = "vcov", intercept = TRUE) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-76-1.png" width="432" style="display: block; margin: auto;" /> ] .pull-right[ ```r # Fixed effects coefplot2(mpl1, intercept = TRUE) ``` <img src="workshop07-en_files/figure-html/unnamed-chunk-77-1.png" width="432" style="display: block; margin: auto;" /> ] .alert[Note]: error bars are only shown for the fixed effects because glmer doesn't provide information on uncertainty of variance terms ] --- ## Visualization the random effects You can extract the random effect predictions using `ranef()` and plot them using a `dotplot()` from the `lattice` package Regional variability among populations: - Spanish populations (SP) larger values than Swedish (SW) or Dutch (NL) Difference among genotypes largely driven by genotype 34 ```r library(gridExtra) # dotplot code pp <- list(layout.widths=list(left.padding=0, right.padding=0), layout.heights=list(top.padding=0, bottom.padding=0)) r2 <- ranef(mpl1, condVar = TRUE) d2 <- lattice::dotplot(r2, par.settings = pp) grid.arrange(d2$gen, d2$popu, nrow = 1) ``` --- ## Visualization the random effects <br> <img src="workshop07-en_files/figure-html/unnamed-chunk-78-1.png" width="648" style="display: block; margin: auto;" /> --- ## Model selection The same methods can be used with a glmm or lmm to choose between models with various random intercepts and/or random slopes and to choose fixed effects to keep in final model. - an **information theoretic approach** (e.g., AICc - Workshop 5) - a **frequentist approach** (where the significance of each term is evaluated using `anova()` and the likelihood ratio test; LRT) --- ## Model selection We first code potential models and compare them using AICc.comment[*]: ```r mpl2 <- update(mpl1, . ~ . - rack) # model without rack mpl3 <- update(mpl1, . ~ . - status) # model without status mpl4 <- update(mpl1, . ~ . - amd:nutrient) # without amd:nutrient interaction bbmle::ICtab(mpl1, mpl2, mpl3, mpl4, type = c("AICc")) # dAICc df # mpl1 0.0 10 # mpl4 1.4 9 # mpl3 1.5 8 # mpl2 55.0 9 ``` .comment[*NB: We do not cover all possible models above, however, the interaction `amd:nutrient` can only be evaluated if both amd and nutrient (i.e., the main effects) are included in the model. ] --- ## Model selection Alternatively, we can use `drop1()` and `dfun()` functions to evaluate our fixed effects (`dfun()` converts the AIC values returned by the `drop1()` into `\(\Delta\)`AIC values) .small[ ```r dd_LRT <- drop1(mpl1,test="Chisq") (dd_AIC <- dfun(drop1(mpl1))) # Single term deletions # # Model: # total.fruits ~ nutrient * amd + rack + status + (1 | X) + (1 | # popu) + (1 | gen) # Df dAIC # <none> 0.000 # rack 1 55.083 # status 2 1.612 # nutrient:amd 1 1.444 ``` ] - Strong **rack** effect (dAIC = 55.08 if we remove this variable) - Effects of **status** and **interaction** term are weak (dAIC < 2) - Start by **removing the non-significant interaction** term to test main effects of nutrient and clipping --- ## Model selection .small[ .pull-left2[ ```r mpl2 <- update(mpl1, . ~ . - and:nutrient) # Use AIC mpl3 <- update(mpl2, . ~ . - rack) # no rack or interaction mpl4 <- update(mpl2, . ~ . - status) # no status or interaction mpl5 <- update(mpl2, . ~ . - nutrient) # no nutrient or interaction mpl6 <- update(mpl2, . ~ . - amd) # no clipping or interaction # bbmle::ICtab(mpl2, mpl3, mpl4, mpl5, mpl6, # type = c("AICc")) # Or use drop1 dd_LRT2 <- drop1(mpl2,test="Chisq") dd_AIC2 <- dfun(drop1(mpl2)) ``` ] .pull-right2[ ```r library(bbmle) ICtab(mpl2, mpl3 ,mpl4, mpl5, mpl6, type = c("AICc")) # dAICc df # mpl2 0.0 10 # mpl5 0.0 10 # mpl4 1.5 8 # mpl6 10.6 9 # mpl3 55.0 9 ``` ]] <br><br><br><br><br><br><br><br> - Both the main effects of **nutrient** and **clipping** are strong (large change in AIC of `\(135.6\)` (`mpl5`) and `\(10.2\)` (`mpl6`) if either nutrient or clipping are dropped, respectively). - Final model includes the fixed nutrient and clipping effects, rack, and observation-level random e ffects `(1|X)` to account for over-dispersion --- ## Up for a challenge? ![:cube]() Use the `inverts` dataset (larval development times (`PLD`) of 74 marine invertebrate and vertebrate species reared at different temperatures and time), answer the following questions: - What is the effect of feeding type and climate (fixed effects) on `PLD`? - Does this relationship vary among taxa (random effects)? - What is the best distribution family for this count data? - Finally, once you determined the best distribution family, re-evaluate your random and fixed effects. --- ## Additional GLMM resources **Books**: - B. Bolker (2009) Ecological Models and Data in R. Princeton University Press. - A. Zuur et al. (2009) Mixed Effects Models and Extensions in Ecology with R. Springer. **Websites**: - GLMM for ecologists (http://glmm.wikidot.com) .small[.comment[A great website on GLMM with a Q&A section!]] --- class: inverse, center, bottom # Thank you for attending this workshop!